Your Next Samsung Could Learn To Love Your Smile

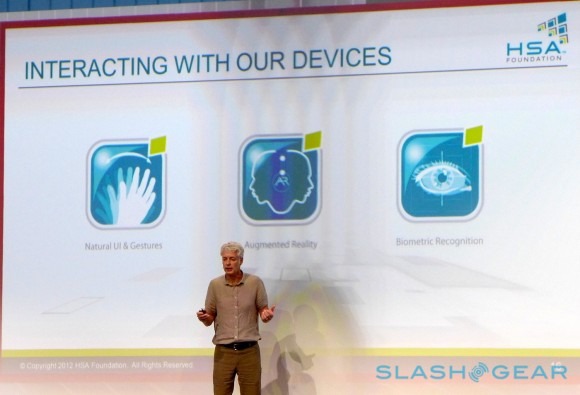

Heterogeneous System Architecture might not be a phrase that trips off your tongue right now, but if AMD, TI and – in a quiet addition – Samsung have their way, you could be taking advantage of it to interact with the computers of tomorrow. AMD VP Phil Rogers, president of the HSA Foundation, filled his IFA keynote with futurology and waxing lyrical about how PCs, tablets and other gadgets will react to not only touch and gestures, but body language and eye contact, among other things. Check out the concept demo after the cut.

Heterogeneous System Architecture is a catch-all for scalar CPU processing and parallel GPU processing, along with high-bandwidth memory access for boosting app performance while minimizing power consumption. In short, it's what AMD has been pushing for with its APUs (and, elsewhere – though not involved with HSA – NVIDIA has with its CUDA cores), with the HSA seeing smartphones, desktops, laptops, consumer entertainment, cloud computing, and enterprise hardware all taking advantage of such a system.

While there were six new public additions to the Foundation, Samsung Electronics' presence came as a surprise. The HSA was initially formed by AMD, ARM, Imagination Technologies, MediaTek, and Texas Instruments, but today's presentation saw Samsung added to the slides and referred to as a founding member.

Samsung is no stranger to heterogeneous computing tech. Back in October 2011, the company created the Hybrid Memory Cube Consortium (along with Micron) to push a new, ultra-dense memory system that – running at 15x the speed of DDR3 and requiring 70-percent less energy per bit – would be capable of keeping up with multicore technologies. The Cubes would be formed of a 3D stack of silicon layers, formed on the logic layer and then with memory layers densely stacked on top.

As for the concept, Rogers described a system which could not only learn from a user's routine, but react to whether they were smiling or not, whereabouts at the display they were looking, and to more mundane cues such as voice and gesture. Such a system could offer up a search result and then, if the user was seen to be smiling at it, learn from that reaction to better shape future suggestions.

Exactly when we can expect such technology to show up on our desktop (or, indeed, in laptops, phones and tablets) isn't clear. However, Samsung has already been experimenting with devices that react to the user in basic ways; the Galaxy S III, for instance, uses eye-recognition to keep the screen active even if it's not being touched, while its camera app includes face- and smile-recognition.