I've Got One Big Question After Waymo's Autonomous Car Crash

Waymo's computers may not have been at the wheel during the Arizona car crash last Friday, but eventually autonomous car developers will have to figure out just how human they dare let their AI drivers behave. While plenty of attention has been given to the technological barriers to self-driving cars, and a reasonable amount to the insurance and regulatory implications, there's a big black hole in one other key element. That's driverless car ethics.

Despite initial suggestions by the Chandler Police Department, Waymo says that its car was in manual mode at the point of the collision. That is, the safety operator at the wheel was physically driving the car, rather than the systems Alphabet's company has developed. It certainly appears that there was little, if any, emergency maneuvering to avoid the oncoming vehicle by that operator, who sustained minor injuries in the crash.

The question that stands out to me is how the Waymo car might have handled the situation, had it been in autonomous, not manual mode. Indeed, I suspect it's the first aspect of a broader question that will become the most pressing issue around driverless vehicles as the technology matures. Effective driving isn't just following the rules of the road, after all; it's about the decisions we as human car operators make, almost without consciously thinking about them.

Waymo has released video from the front-facing cameras in its car, with the brief clip showing the silver Honda coming across the central median and approaching the autonomous vehicle almost head-on. Without footage from the side or rear of the car, however, it's hard to say what the broader road conditions were. That would likely have had a huge impact on how a self-driving system might have reacted.

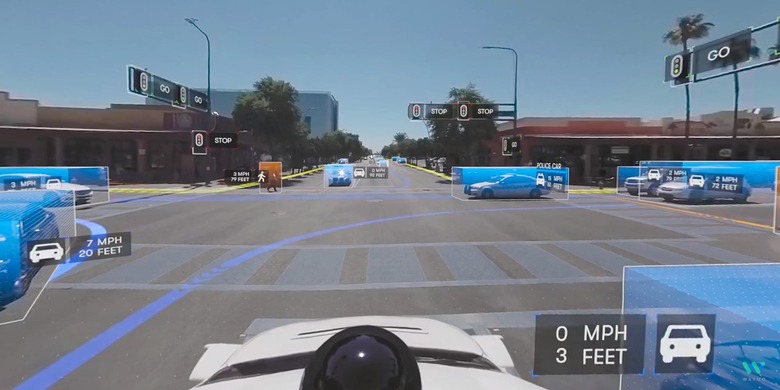

Had the AI been at the controls, I wonder, would Waymo's car have swerved to the side to avoid that oncoming Honda? That would, I suspect, be the reaction many human drivers would have. An autonomous car, though, has 360-degree vision: it would know if there was another vehicle in the next lane.

Does the autonomous vehicle swerve, if it knows that doing so could put the adjacent car in increased danger? Or, does it stay in its lane, and do as much as possible there to minimize the impact of a crash without imperiling other vehicles around it? Braking hard, for instance, or making more cautious swerves within the limits of the lane.

The question is who, after all, does the car have responsibility to. The person inside it? Those other road users around it? Whoever the insurance policy says is liable?

Once you start to run through the mental exercise that is autonomous car crash ethics, it's easy to end up with a serious headache. My go-to thought exercise involves a self-driving car and a nun walking out into the road. Does the car swerve, if in doing so it puts its riders in jeopardy, but potentially saving the nun? Or, does it prioritize the passengers, but risk the pedestrian?

Perhaps, as a human driver, you'd weigh it in that split-second and figure that the nun is old, your kid in the back is young, and the contents of your car therefore more valuable. Just to properly bed the headache down and crank it up to a serious migraine, though, then you add more nuns. Or school children they're shepherding. Or somebody walking a cute little dog.

Is a middle-aged man being ferried by a driverless car more or less worth saving than a pair of schoolchildren? What are the relative values of a group of teenagers inside an autonomous taxi, versus a group of middle-aged women stepping out in front of the car? How about if the women are carrying kids, or pushing baby carriages?

According to J. Christian Gerdes, Professor of Mechanical Engineering at Stanford University and Director of the Center for Automotive Research at Stanford (CARS), a self-driving vehicle has three objectives: safety, legality, and mobility. In an ideal world it would satisfy all three equally, but life on the public road is seldom ideal. Instead, at some point the car – or the ethics programming that car's AI uses – needs to decide which will take priority.

Autonomous vehicle ethics won't just be important when it comes to figuring out who to prioritize in a crash situation. Working out how "human" a robot driver should be will have an impact on every aspect of that car on the road. Too tight an interpretation of the letter of the law, for instance, and on encountering an obstacle in the lane the self-driving car will simply halt and stay there. It's only when you dial in a little moral looseness – a willingness to bend the rules, to some extent – that it would consider driving around that obstacle, even if that means momentarily entering the opposing lane, or driving onto the shoulder.

There will not, Gerdes has argued, be one single "ethical module box" that automakers will be able to buy from a supplier and plug into their self-driving cars. Indeed, in a chapter he co-authored with Sarah M. Thornton in the 2015 book "Autonomes Fahren," the suggestion is that different types of autonomous vehicles could end up prioritizing different levels of "good" driving.

"An automated taxi may place a higher weight on the comfort of the passengers to better display its virtues as a chauffeur," they theorize. "An automated ambulance may want to place a wider margin on how close it comes to pedestrians or other vehicles in order to exemplify the Hippocratic Oath of doing no harm."

Waymo has not released data on what its autonomous systems might have done, had they been in control during the incident last week. My guess is that, like other driverless car projects, even when the human operator is in control the AI is still watching as a data-collection exercise. From that, it should presumably be possible to work out how the computer would have reacted were the minivan not been in manual mode.

Predictability is one of the big advantages of a self-driving car over a human-operated version. A computer never gets tired; never gets distracted by the radio or their phone. Its sensors see everything around it, all at once.

Yet the data those sensors gather, and the way it's interpreted, has to be viewed through an ethical lens that I'm not sure we've given as much thought to as we ought to have. If driverless cars are to be deployed at scale on public roads, then we need to figure out what moral code we can accept them operating under. Waymo's car may not have swerved out of the way of the crash on Friday, but one day it may have to, and the implications of that could be a whole lot bigger than a fender-bender.