Toyota's New Driverless Prototype Has A Surprise Inside

Most driverless cars are aiming to do away with physical controls, which makes the fact that Toyota's latest autonomous vehicle has two sets all the more unexpected. The Toyota Research Institute (TRI)'s Platform 2.1 research vehicle gives March's big 2.0 update a sensor update, throwing in a new LIDAR laser scanning system. Arguably more important, however, is how the prototype melds different levels of driver-assistance together.

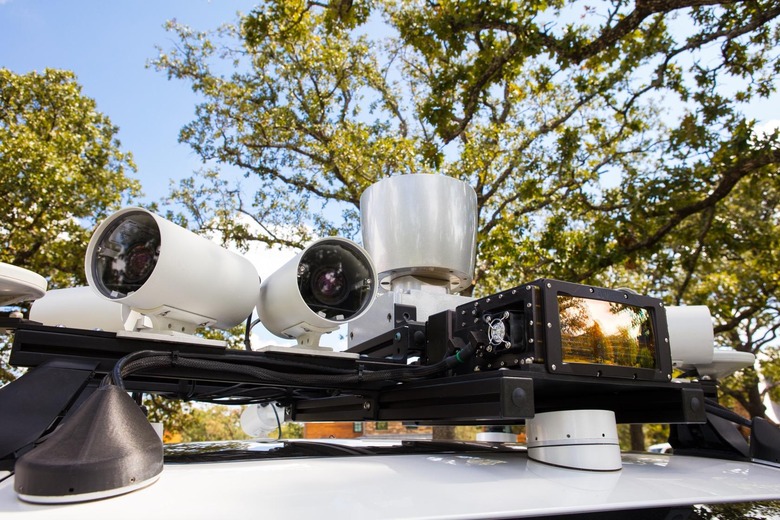

The car is based on a Lexus sedan, though heavily modified for the TRI's purposes. On the outside, there's a new Luminar LIDAR which promises a longer sensing range and a field of view that can now be dynamically configurable. That means that, while the point cloud the LIDAR can create is denser to begin with – making it easier to pick out objects like other vehicles and pedestrians within it – it can also be focussed on a specific area of interest.

It's combined with the v2.0 car's existing sensor system, which gives the Platform 2.1 vehicle 360-degree vision around itself. Under the hood, that all feeds into a new set of deep learning computer perception models that Toyota says are more accurate and efficient, as well as being faster. Importantly, they can not only understand their surroundings – like other road users and the best route through the upcoming roadways – but help feed back into map development with information on road signs and lane markings.

What's happening inside is particularly curious, meanwhile. Where most autonomous car concepts do away with physical controls altogether, TRI's Platform 2.1 actually has a double set. A second "vehicle control cockpit" on the front passenger side has a complete set of pedals and a steering wheel, using drive-by-wire technology.

At first glance you might think that was a safety system, offering redundant control for times when the driverless system struggles. In fact, it's intended to allow TRI to explore how best an autonomous car can transfer vehicle control from a human driver and then back again, particularly in challenging or potentially dangerous situations. " It also helps with development of machine learning algorithms that can learn from expert human drivers and provide coaching to novice drivers," TRI says.

For the first time, this new prototype includes TRI's dual approaches to autonomous driving. Dubbed Guardian and Chauffeur, they focus on different implementations of driver-assistance technology.

Guardian is more like a protective angel watching over a human driver's handiwork at the wheel. While the driver keeps control, Guardian's automated systems operate in parallel in the background. That way, if a potential crash is predicted, it can step in to assist with evasive maneuvers, more aggressive braking, or other help.

Guardian can also monitor for distracted or sleepy drivers. While production Toyota and Lexus cars often have driver alertness prompts, these tend to be fairly rudimentary and use metrics like how many times the car strays over the lane markings accidentally to assess attentiveness. TRI's system could use computer vision to track the driver's skeletal pose, head and gaze position, and even emotional cues being displayed, and make suggestions that the human at the wheel should take a break or even step in with an override if the circumstances demand it.

Chauffeur, meanwhile, is what we'd probably consider a more traditional implementation of autonomous driving. It's a Level 4/5 system, which takes over the piloting duties completely. It uses the same sensor suite and processing as Guardian.

How those systems coexist – and transfer with – human control is a big part of Toyota's current research. TRI is exploring how situational awareness of human occupants of a driverless or driver-assisted car can be helped with more data. For instance, the Platform 2.1 vehicle can show a point-cloud representation of what its sensors "see" on the dashboard displays. It also uses colored lights to show status.