The First Apple AR Headset Could Be A Much Bigger Deal Than We Expected

Apple's augmented reality headset could pack a surprise, according to the latest analyst predictions, with suggestions of a more gradual dive into smart glasses upended with the idea of a more ambitious first product. Long the topic of rumor – one which Apple seems happy to periodically stoke – the AR wearable would arguably be the biggest product announcement for the Cupertino firm since the original iPhone.

As was the case with the iPhone, Apple wouldn't be first to the smart glasses category. However, far more important than being number one on the calendar is being top of the pile for usability, functionality, and practicality. The dearth of competitive Android tablets in the face of the iPad is fine example of that strategy in play.

For Apple's smart glasses, then, the expectation – among numerous leaks and rumors – was that the company would play a relatively conservative game with its launch. The first-generation AR wearable planned, for example, was said to be a relatively basic headset. Tipped to debut in 2022, it would resemble a virtual reality headset like an Oculus Quest or HTC VIVE, and use cameras and other exterior sensors to show pass-through video of the world beyond.

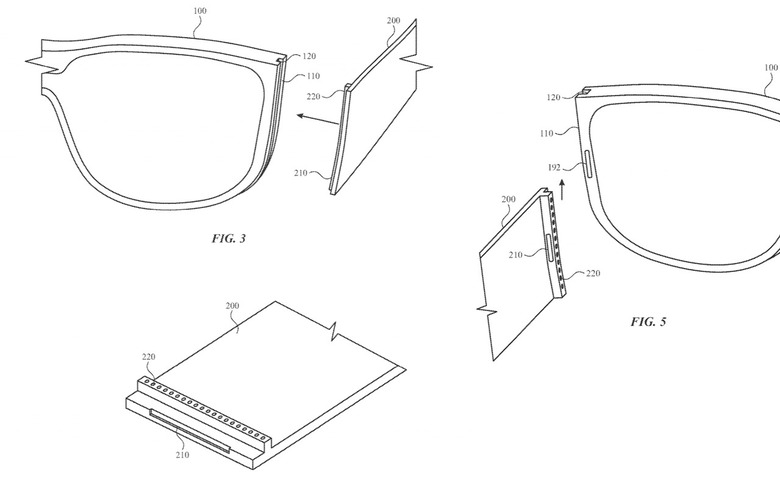

Come 2025, meanwhile, the suggestion is of a transparent-display wearable. By then, the glasses will be more streamlined and more flexible; they'll no longer need the pass-through cameras, for example, because the clear lenses will take care of that instead. Overshadowing all of this has been the question of power, though, and whether Apple will rely on standalone processing within the wearables or a tethered device such as an iPhone.

According to a new analyst note by Ming-Chi Kuo, MacRumors reports, the current suggestion is not one but two chipsets within Apple's first-generation AR wearable. Rather than two identical chips, Apple will combine one similar to the Apple Silicon within the latest MacBook Air and Mac mini; the second will be less potent, but more frugal at a targeted set of tasks.

"We predict that Apple's AR headset to be launched in 4Q22 will be equipped with two processors," Kuo writes. "The higher-end processor will have similar computing power as the M1 for Mac, whereas the lower-end processor will be in charge of sensor-related computing. The power management unit (PMU) design of the high-end processor is similar to that of M1 because it has the same level of computing power as M1."

The result, the analyst predicts, is a standalone headset that won't need to be tethered – wired or wirelessly – to a nearby iPhone or Mac. Apple's goal, it's predicted, is to shift mobile computing from iPhone to such a wearable within ten years.

Exact specifications for the chipsets or the Apple headset more broadly have not been detailed. However, Kuo predicts that Apple will use a pair of Micro OLED panels from Sony for its first model, each capable of running at 4K resolution. It's the sheer grunt required for not only driving those screens, but combining the external sensor data – such as up to eight optical sensors – for a smooth pass-through video experience that demands the M1-scale chip.

As with other chip designs, we've seen Apple combine different types of CPU core on the same SoC before. The iPhone 13's A15 Bionic launched earlier this year, for example, mixes two high-performance cores known as Avalanche with four energy-efficient cores known as Blizzard. The result is the ability to selectively activate the more potent CPUs when processing needs demand, and then scale back the iPhone's power requirements for more humdrum tasks, thus maximizing battery life overall.

This reported design for the first Apple AR headset, though, would seemingly expand that to having multiple processors on the same device. Again, that's not entirely a new approach – Apple has relied on co-processors in Mac products before, typically with specific tasks such as hardware-based security and encryption – though here it's a further example of how bringing together the underlying architecture of iOS and macOS devices is helping Apple balance requirements of power and portability. We've seen that pay dividends for MacBook Pro battery life most recently; now, it could help the Apple smart glasses succeed in breaking into the mainstream where other headsets have struggled.