Smart Assistants May Have Made Us More Lax About Privacy

There's probably little debate that AI-powered smart assistants have become very useful. They started out as good fun but without much utility and have sometimes even become the butt of jokes thanks to misheard commands. These days however, the likes of Amazon Alexa, Google Assistant, and Apple Siri have become a normal part of modern tech life, with or without smart speakers involved. But for all their usefulness and help, these services may have made people less vigilant about what they say and do and, it turns out, those secret utterances might not be so secret at all.

The truth about smart speakers

They may seem like magical devices that give off a Star Trek vibe but, at first, smart speakers were the laughing stock of the tech world. Few knew what to make of the Amazon Echo and its weird cylindrical body. Before we knew it, thanks to aggressive marketing by companies, the likes of the Echo, the Google Home, and the HomePod have become commonplace among techies.

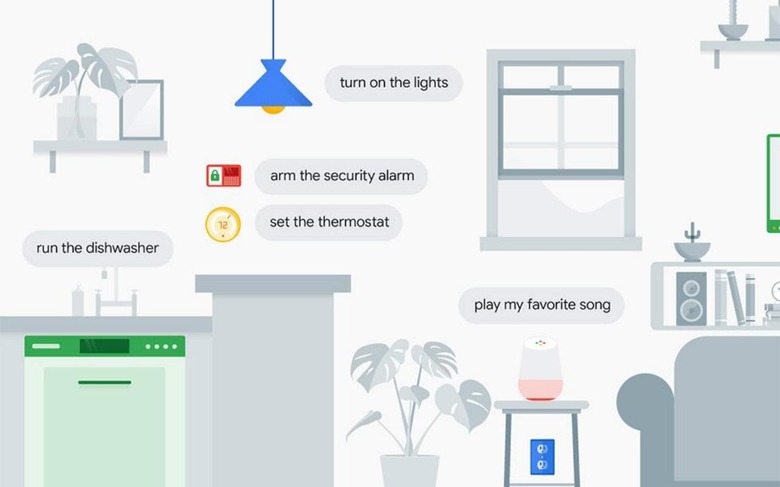

More and more appliances and accessories have started integrating these smart assistants, most of them offering a world of knowledge and control at the push of a button or at the drop of a trigger phrase.

The latter is the ultimate convenience since you only have to say the word and, like a genie, the answer, if it exists, is delivered to your ears. But that convenience doesn't happen by magic. Technically, it requires that these devices, especially smart speakers, are always listening.

Smart speaker makers will, of course, insist and clarify that the speakers aren't actually recording these all that time and that it only starts really analyzing or recording speech after it hears the trigger phrase. Unfortunately, that's exactly where the problem starts.

Say that again?

Despite decades' worth of voice and speech recognition, smart assistants are hardly infallible even at distinguishing their own names. It may be a running gag that AI assistants can misinterpret certain phrases for their triggers but that has serious implications.

If a smart assistant only starts recording audio clips after the trigger has been said, it means that it also records everything said after such accidental wake ups, whether the user is aware of it or not.

That has, in fact, lead to at least one and embarrassing instance where certain conversations which were recorded and sent to some random contact. Such accidental triggers are also used to justify feeding AI assistants with data, that is, submitting sample recordings to companies, in order to improve their service. What those companies didn't really tell users is what's involved in that process.

Human-assisted machine learning

Considering how it's portrayed by media, many people see AI and machine learning as some magical sauce that makes every piece of software smart. Some will at least be aware of the complex and massive amounts of data crunching involved to arrive at those impressive feats of computational wizardry. Because of that, few probably imagine the amount of direct human intervention involved in training these AI assistants.

Unlike with other machine learning applications, smart assistants can't simply work on completely random sets of data. In order to be truly useful and relevant, they have to work on actual spoken words and phrases. Sometimes they might get things wrong, which is where the human element comes in.

Unlike other machine learning applications, AI-powered assistants have to be sometimes told they got things wrong so that they can improve and it takes human ears to do that. Unfortunately, that's the dirty secret the industry has been keeping until exposed recently.

The walls have ears

It might sound like a case for immediately boycotting these technologies but, let's face it, it's never going to happen. Not only are they already too ingrained in modern life and consciousness, but these smart assistants and the smart devices they come in have also proven themselves to be truly useful.

Smart speakers are only the most recent focus of scrutiny. Smartphones have actually been doing the same to some extent for years now. Pretty soon, anything and everything will tie into smart assistants or even have them built-in. Soon everything and anything will be connected.

Rather than abandoning all modern advancements, users, developers, and privacy advocates should take the opportunity to steer the nascent industry while it isn't yet too late. Companies should be held accountable and should disclose practices that endanger users' privacy in any way whatsoever.

Users should also be made aware of the risks they will be taking so that they could make educated decisions whether or not to subscribe to those conveniences. As always, vigilance is the price that has to be paid to protect freedom and privacy. Unfortunately, while privacy and privacy violations are always hot topics, few ever take it seriously.