Samsung NPU Promises On-Device AI With Lower Power Consumption

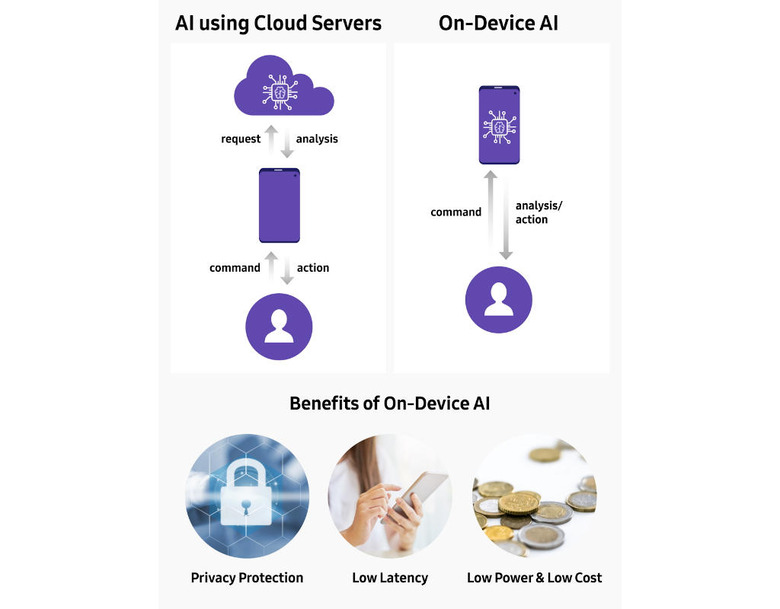

AI is the big thing in tech these days, permeating everything from the Web to homes to mobile devices. Much of that commercial AI, however, lives in the cloud, which has security, privacy, and performance impacts. In the mobile industry, there is a movement to relocate some of that AI processing inside smartphones and Samsung is one of those pushing that trend. Its latest efforts focus on delivering that on-device AI processing with a smaller power footprint, ironically by shrinking the grouping of data compared to conventional deep learning models.

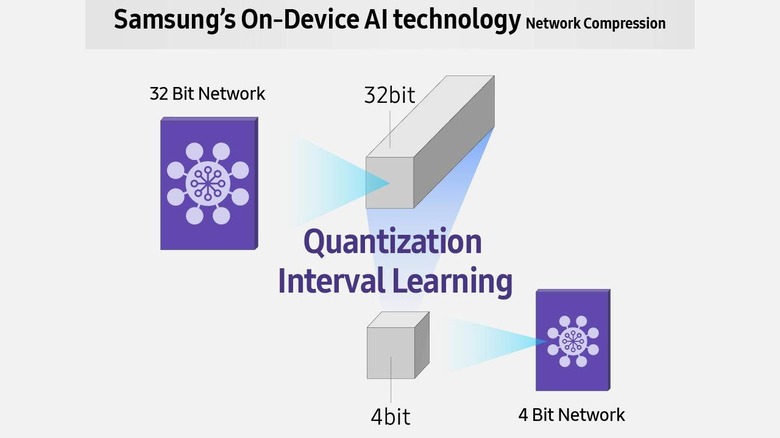

Mirroring traditional computing models, Samsung says that deep learning data is grouped in 32 bits. This naturally requires more hardware in the form of transistors which, in turn draws more power. This is no problem for beefy phones with huge batteries but not only does it leave out other phones, but it also adds unnecessary power consumption that could otherwise be used for more important activities.

Samsung's research led it to conclude that by simply grouping data into 4 bits while retaining data accuracy,, they can reduce the hardware and power requirement for deep learning. This "Quantization Interval Learning" may sometimes even be more accurate than having to transfer the data from servers. All at 1/40 to 1/120 the number of transistors according to Samsung.

This means that it is even more feasible to have AI processing on the phone or device itself rather than offload it to a remote server. Not only does it reduce the latency of responses since everything is right on the device, it also reduces the risk of eavesdropping.

At the moment, Samsung has only announced the technology behind the NPU but not the NPU itself. That will most likely embedded in the next Exynos chip it will announce later this year.