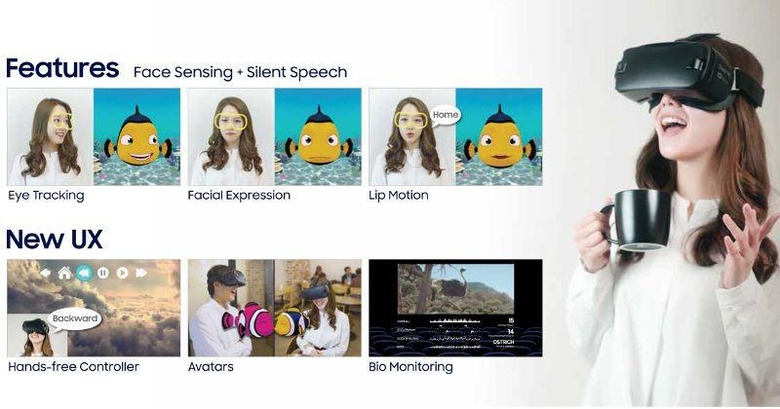

Samsung C-Lab's FaceSense Uses Your Face To Navigate VR

We may receive a commission on purchases made from links.

One of the biggest hurdles to virtual and augmented reality today isn't really the display. We've pretty much gotten that down. What breaks the illusion of the made-up worlds is, in fact, our input methods. While our eyes and ears are deceived into believing the immersion, our hands aren't. While the likes of Oculus and Vive are grappling with coming up with new handheld controllers, Samsung's Creative Lab, a.k.a. C-Lab s looking at another possible way to control and navigate VR experiences: your face.

Our faces are regarded to be the most expressive part of our body. A simple change in the curve of our lips, a wrinkling of the forehead, or a raising of an eyebrow can mean the difference between a grin and a grimace, an innocent smile or a loaded smirk. Those subtle changes, however, carry more than just expressions. They also bring along some electric signals. Signals that can be interpreted into commands for a computer or a program.

This is the theory on which C-Lab's FaceSense lays its foundations. Such electrical signals that travel across our bodies have formed the basis for a wide variety of things, from medical tests to "mind-reading" contraptions. FaceSense's purpose is both less eccentric but no less ambitious: using your face as a VR controller.

By lining a Gear VR headset with electrodes, the FaceSense prototype is able to detect changes in facial expressions, whether it be from looking at something, reacting to some visual stimulus, or speaking a command. Yes, FaceSense is experimenting on what it calls "Silent Speech", where the device is able to understand what you're saying not by hearing it but by reading the user's facial expression, so to speak.

It all sounds too good to be true, and that's mostly because FaceSense is still at an early stage of research. If it manages to prove its theories solid and turn it into a working product, FaceSense could change the face of VR navigation and control forever. Pun totally intended.

SOURCE: Samsung