Ray Tracing For Everyone: Made Simple Today

Video games you play use rasterization while videos you watch use ray tracing – or that's the way it's been in the past. Ray tracing focuses on each pixel's color and how light in the scene made that color so. Rasterization focuses on the objects in the frame at any given time, deciding how light affects the color and brightness of objects therein. Rasterization can be very low-compute-power, while ray tracing is at the moment requires high-compute-power.

What's going on here? Why now?

Ray tracing isn't new, it's just newly available to the average person. Ray tracing was used to render 3D graphics in movies and 3D-rendering programs before now. It's generally been used by people who want to make products that are rendered once, recorded, then played from there. That means movies and still-images.

Before now, any sort of ray tracing would take lots of compute power and potentially lots of time to achieve. Computing ray-traced imagery is not easy to do. Because it takes a lot of time to ray trace any sort of scene, it was not possible to render enough frames-per-second to consider playing a video game with this technology.

In 2018, NVIDIA revealed their first computer graphics card capable of allowing the average person to use ray tracing in video games. With NVIDIA's newest hardware, computers will be able to play video games that are rendered with ray tracing at 30-frames-per-second or higher.

Not only games

Games are the primary focus of NVIDIA's presentation at Gamescom 2018, at their keynote speech this week. Their keynote speech introduced the first live-ray-tracing GPUs in the NVIDIA GeForce RTX series. NVIDIA makes most of their money in the gaming industry, so they're right to focus in this are for their first ray tracing GPU.

But the fact that real time ray tracing is here means we've entered a new era in computer graphics in general. The realism only previously sensibly applied to major motion pictures is now available in real time viewing. Imagine the possibilities.

Imagine exploring completely real-looking environments in real time, breaking through the barriers that once existed between what we could watch and what we could experience. Imagine sitting on the battlefield during Lord of the Rings: Return of the King.

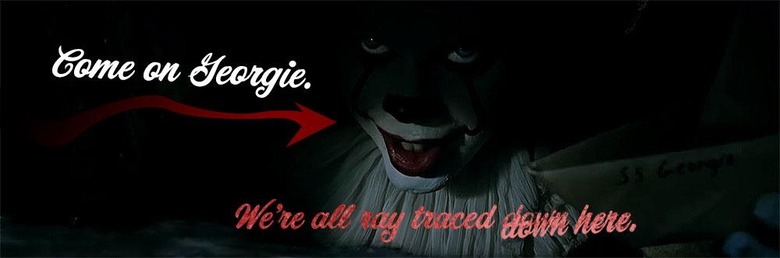

Imagine standing on the street while it rains out, looking down in a storm drain and seeing Pennywise the Dancing Clown. Think of how terrifying it'll be to interact with realistic looking historical figures, or walking across the face of alien planets, all of which react to what you're doing, all looking as real as the graphics you've seen in multi-million-dollar motion pictures.

This is the beginning

Granted, this is the beginning. It'll look amazing, rolling with one of the first NVIDIA RTX graphics cards, rendering some sweet rays in the first ray tracing-friendly gameplay games. But we're still here at the start. I don't know that I'd necessarily be ready to toss many hundreds of dollars at any sort of hardware just yet.

Stick around until we've got some testing done here in the lab. Wait until game developers and VR and other sorts of experience developers create their first and second-gen products. Then I'd recommend you re-assess the situation. For now, revel in the possibilities.