Pixel 4 Soli Radar Gesture Recognition Isn't As Simple As We Thought

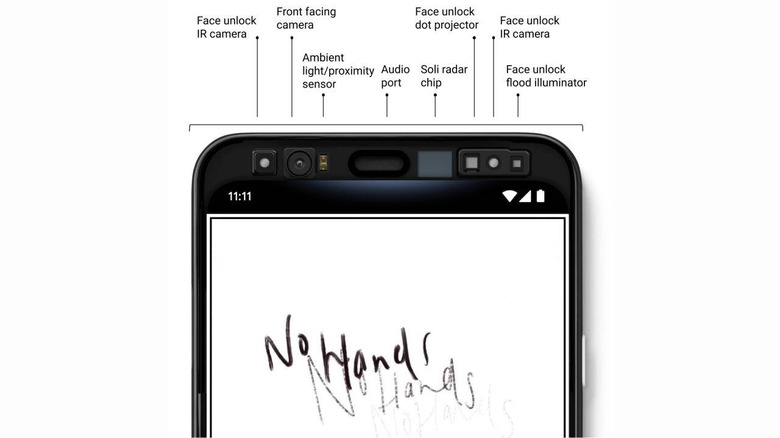

If you ever wondered why Google went from thick notch on the Pixel 3 back to thick forehead on the Pixel 4, then Motion Gesture is really to blame along with face recognition. The feature is powered by Google's Soli radar technology that it has been developing mostly in silence for years now. Gesture controls, however, aren't novel and the Pixel 4's implementation may have caused many to downplay the feature. Google now explains it's far more complicated than you might think.

Radar technology isn't exactly new and even Google admits it's pretty much a century old. It has even experienced a resurgence thanks to its use in self-driving cars and autonomous robots. Traditional radars, however, only detect large shapes and objects at long distances, which is obviously not a good fit for hand movements on smartphones.

Google's accomplishment is to create a short-range radar that can detect even subtle movements. Contrary to popular conception, the radar doesn't even "see" the shape of your hand or your face. Instead, the signals it receives are practically blobs that change shape or intensity depending on the distance to the sensor and the object's movement. There's definitely no chance for spying on you here.

That solved only one part of the problem though. The other part is making Soli's machine learning model distinguish between different gestures that could be performed in a hundred different ways depending on the person. For that, Google trained its AI on hundreds of hours of video recordings from Google volunteers.

Impressive as the technology behind Soli may be, all of that may be lost on users who have little use for it. Worse, not all Pixel 4 owners even have access to it due to certification requirements per country. It's a major step forward for radars in general but radar technology is still a long way from becoming a commodity.