Pixel 3 Portrait Mode Is Magic With Frankenphone

The rig goes by the name Frankenphone, and the results are incredible. Google described the way they made magic in the Pixel 3 camera's Portrait Mode this week with a strange device indeed. What you're seeing first is that device – actually 5 Pixel 3 devices connected together into one monster machine capable of collecting visual information to train all future Pixel smartphones.

Today Google explained how they were able to turn a complex set of variables into a camera system that could reasonably accurately predict the depth at which any object is from a Pixel 3's camera. To do this, they used machine learning – computers crunching numbers given to them by other computers, doing so to create a smart final product that can improve all Pixel phones. To do this, Google started with a set of "depth cues."

Depth Cues Google Uses:

• Traditional Stereo Algorithms – aka "Depth from Stereo"

• Focus – aka the Defocus cue

• Size of everyday objects – aka a Semantic cue

Google used the three sorts of depth cues above combined with the already-massive number of objects learned by sources like Google Photos and Google Image Search – etc. Those images are "everyday objects" recognized by the machine. Recognization of these objects, depth from stereo (PDAF), defocus depth, and semantic clues were used to create a system with which photos could be analyzed, in turn teaching Google's camera algorithm to be smarter.

To teach Google's system, Google created the Frankenphone, a device which took photos from 5 Pixel 3 devices at once. Synchronized together, these lenses on this mega-phone were able to capture 5x the depth data, allowing the final product to give Google's system far more detailed information on the objects in the photo than they'd otherwise ever get.

SEE TOO: Our big Google Pixel 3 Review

With this information, Google's system becomes better at predicting the size of everyday objects and their relative distance from the camera. Multiple angles on a single subject – like a human being – also gives the system more information on the many different positions in which a human might be sitting or standing, etc.

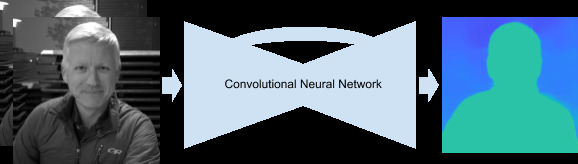

Using the resulting information, Google's able to run PDAF photos through a Convolutional Neural Network to output an accurate depth map. With that information, the final photo can be revealed, complete with blur around the main subject, creating a "professional looking portrait" of sorts. All of this information is processed and turned around to make the Pixel better at predicting a depth map in photos captured with the Pixel you've got in your hand right now.

This results in nice Portrait Mode photos, but it also enables the app Google Photos to re-adjust photos after capture. Open up a Portrait Mode photo in the newest version of Google Photos and you should have the ability to change the amount of blur and change the focus point, too – which is awesome! For more information on this subject from Google, go and see more on the Google AI blog.

See that data right now

If you've got a Google Pixel 3, take a few Portrait Mode photos and use a Depth Extractor like the one called Depth Data Extractor. You'll see a super awesome bit of tech at work with quickness. OR if you have a few minutes, head over to the Depth Data Viewer. That'll fully blow your mind to chunks, without a doubt.

Code on the first project comes from Jaume Sanchez Elias. Code on the second project there is also by Jaume Sanchez Elias using three.js code and Kinect code from @kcimc and @mrdoob. You can also see all the GitHub code if you do so choose.