Perfect Photo Portrait Research Mimics The Masters

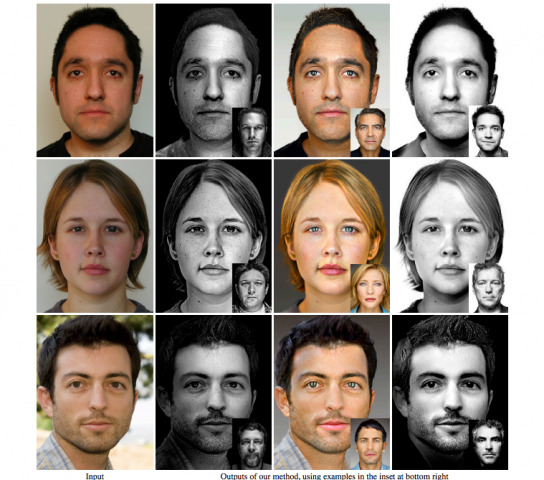

Striking headshot portraits that offer the same styles as famous photographers could one day be created from the sort of snaps you can take with your phone, with one computer vision research team cooking up a dynamic retouching system. The handiwork of a group led by computational photography researcher YiChang Shih, the system – dubbed "Style Transfer for Headshot Portraits" – takes a regular source picture and another showing the an example of the sort of style you're trying to achieve, blending the two automatically, and even doing the same for video.

Although many phones and cameras offer stylized modes to automatically adjust the scene you're shooting, the researchers argue that they don't work so well on portraits. That's because they don't deliver the same sort of precision retouching that a professional photographer would apply, instead simply manipulating the whole scene.

In contrast, this new system takes a more complicated – but apparently effective – approach, breaking down the different aspects of the source and style images, and mapping the properties of the latter onto the former. For instance, contrast and lighting direction can be translated across, effectively mimicking professional lighting setups from relatively mundane source files.

It's crying out to be developed into a smartphone app, though it's unclear what sort of processing power was required to deliver the results, particularly the video manipulation. A photo conversion takes around 12 seconds, the research paper suggests, while video requires some breaking down in order to avoid flickering (but still leads to impressive results, as you can see in the video demo below).

Computational photography is expected by many to be the next big thing in cameras, as smartphones get more powerful and standalone cameras strive to keep up. For instance, the Lytro ILLUM packages light-field photography into a prosumer model, while HTC's One M8 offers post-capture processing with depth sensor data; both are powered by Qualcomm processors, with the chip firm planning a reference design to make advanced cameras more readily adopted by manufacturers.

The style-mimicking system is due to be presented at SIGGRAPH 2014 in August. No word on whether it might spawn a commercial app any time soon.

Update: Project lead YiChang Shih tells us that he believes that running the style transfer system on an iPad will eventually be possible, though he's "not sure" about whether a phone could handle it. That's down to the two-part nature of the algorithm, he explained.

First is the "SIFT flow" which demands very high processing power, and is "almost impossible" on mobile devices, Shih says; it's the current bottleneck, though the team is looking at alternatives now. Second involves Laplacian pyramids and image filtering, which can already be done in realtime on megapixel images on an iPad. Meanwhile, the source images being analyzed for style are around 1300 x 900, which Shih suggests should be fine on an iPad but that the processing of which could struggle on an iPhone.

SOURCE YiChang Shih