NVIDIA's New AI Eats Words, Spits Out Photos And Feels Borderline Magical

NVIDIA has as generative art system that uses AI to turn words into visually spectacular works of art. This isn't the first time this sort of concept has been postulated, or even produced. It is, however, the first time we've seen such a system work with such incredible speed and precision.

You can take a peek at OpenAI to see a project called DALL·E. That's an image-generating project based on GPT-3, which you can learn about over at Cornell University. You can start to make wild interpretations of styles with Deep Dream Generator, or learn about some of the source for the NVIDIA Research project we're looking at today – see the paper Generative Adversarial Networks to learn about GAN!

The NVIDIA Project GauGAN2 builds on what the company's researchers created with NVIDIA Canvas. That application – in Beta mode at the moment – works with the first GauGAN model. With artificial intelligence at your disposal, anyone can generate a relatively realistic looking work of art with input that's nothing more than what's required to make a finger painting.

With GauGAN2, NVIDIA researchers expanded what's possible with simple input and artificial intelligence interpretation of said input. This model uses a wide variety of sketches (approximately 10 million high-quality landscape images), as its bank of visual knowledge, and draws upon said bank in order to decide what your words could mean in a work of art.

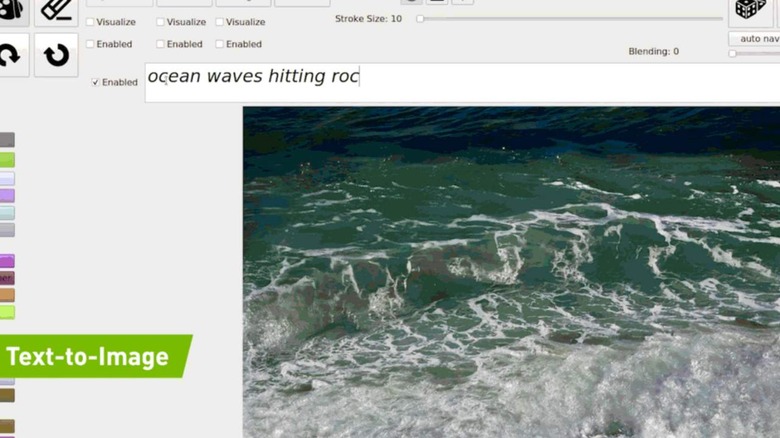

One single GAN framework in GauGAN2 includes several modalities. NVIDIA points to text, semantic segmentation, sketch, and style. Below you'll see a demonstration of this new text input element in an interface that's essentially an extension of NVIDIA Canvas.

The demonstration is far less important than what it represents. A smartphone can now magically erase elements in a photo. If you're using a system like Google Photos, artificial intelligence is already in your life, growing smarter as you feed it more images captured by your phone.

The next wave is here, with NVIDIA's demonstration, showing us how the machine doesn't just know how to identify elements in photos, it knows how to generate imagery based on its knowledge of the images it has been fed. NVIDIA has a model here that's effectively showing us that graphics processing power and the right set of codes can generate shockingly reliable representations of what we humans interpret as reality.