NVIDIA A100, The First Ampere GPU, Is An Absolute Monster

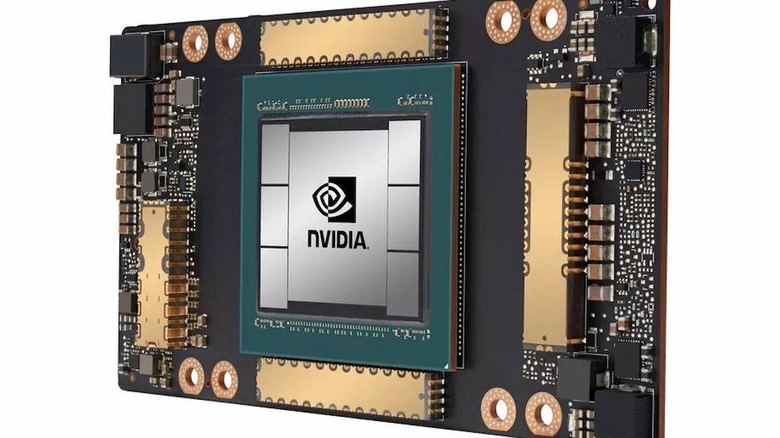

NVIDIA made a lot of announcements during its GTC 2020 keynote today, but at the center of many of them is the company's new Ampere GPU architecture. Ampere is something that's been rumored for quite some time, and the first GPU that will use the architecture – the NVIDIA A100 – is quite the capable piece of hardware. Before you get too excited, though, you should know that the A100 definitely isn't a consumer card for gaming PCs or workstations.

Instead, the A100 is an enterprise card for data centers and tasks that are very GPU intensive, whether it's data analytics or deep learning AI. In that regard, the A100 is like the Volta and Pascal-based cards that came before it, particularly when it comes to its beefy specifications.

The A100 is built using TSMC's 7nm process and uses an insane 54 billion transistors. It serves up 6,912 CUDA cores, and the third-gen Tensor cores in this GPU promise a 20 fold increase in deep learning training performance over the previous generation. The A100 also packs a whopping 40GB of VRAM with memory bandwidth that tops out at 1.6TB/sec. The A100 also leverages multi-instance GPU tech that allows users to partition it into seven instances and better tailor performance to the workload.

So, looking at that spec sheet, even if someone wanted to put this in a gaming rig or workstation, it's clear they'd have a difficult time paying for it. We don't know how much a standalone A100 will cost, but NVIDIA is offering DGX A100 clusters for corporations that pack eight A100s for a starting price of $199,000.

That's a ton of money, but NVIDIA says that it already has some big customers lined up to utilize these GPUs, including Google, Microsoft, and Amazon. Presumably, we'll eventually see the Ampere architecture make its way to consumer RTX cards, but for now, it seems to be the domain of ultra high-end supercomputers and cloud computing companies.