Mr Zuckerberg, Tear Down This Wall

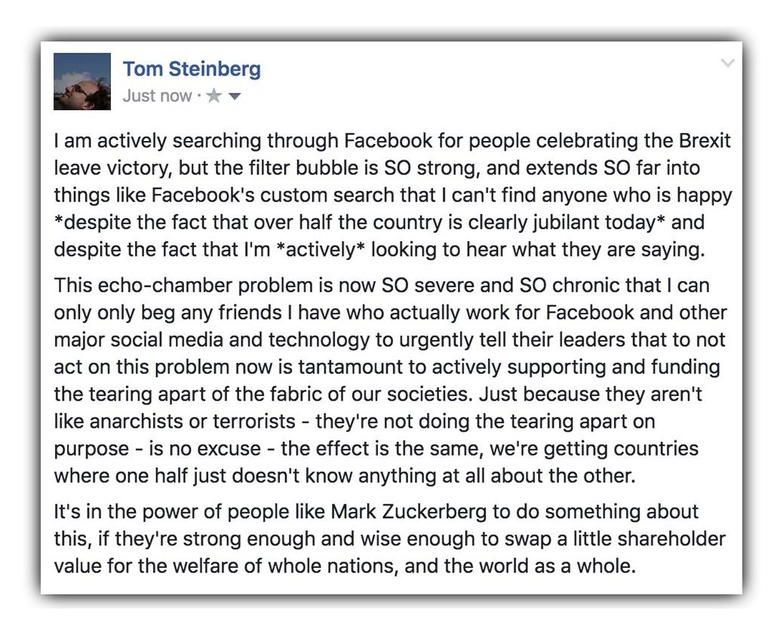

Today we need to cut the crap. This nonsense has gone too far. I'm speaking of the Echo Chamber. The search engine optimization, and the filtering of the content I see on the internet. Not only me, but my family and my friends, as well. This point was driven home to me this morning by a fellow by the name of Tom Steinberg who, earlier today, attempted to find any people on Facebook who were expressing happiness with the results of the "Brexit" vote for which results have come in overnight. He couldn't find any. That's not real. At least it's not realistic. At most, it's extremely dangerous.

I don't want to see opinions that match with mine exclusively. That's not the kind of world I want to live in. That's a safe space. If I want to go to a safe space, I'll take a nap.

Dear search engine and social network: stop automatically choosing and tuning content I see, selecting what I see based on what I've searched for in the past.

You don't need to remind me what I like.

That's my job, not yours.

ABOVE: Echo Chamber by Christophe Vorlet, 2016.BELOW: Echo Chamber by Hugh McLeod, 2010.

I know there are options, in some cases, in web browsers and on social networks where I can block the tracking that goes on. I know ways to get around these artificial limits of what I'm able to see – but most people don't.

In Facebook's case, I don't know how to get around this limit.

Even if I did find a way to search Facebook without basic search modifiers placed according to my past searches, I don't honestly believe I'm getting a set of unfiltered results.

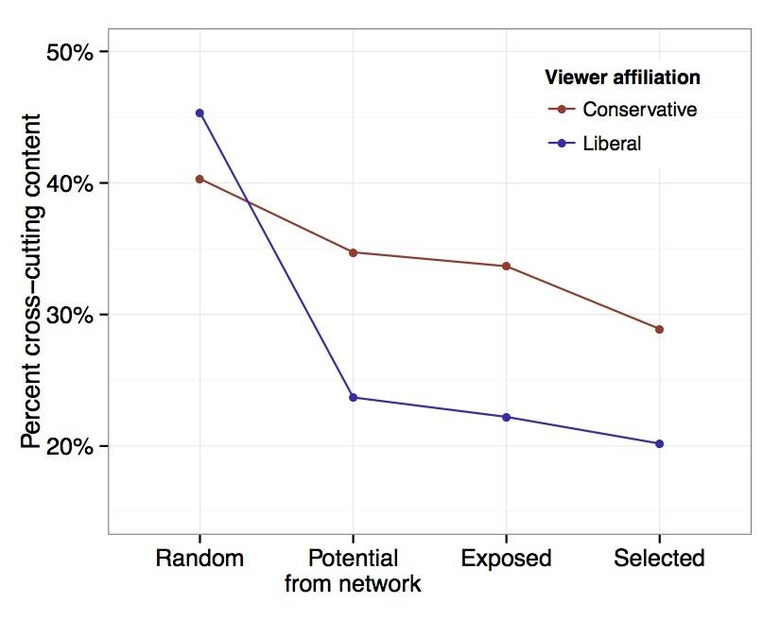

A research paper from Facebook by the name of "Exposure to ideologically diverse news and opinion on Facebook" was published in the year 2015. This paper can be found in full at CNStudioDev via Science.

In this paper, you'll find out why Facebook's "Echo Chamber" is your fault

ABOVE: The diversity of content (1) shared by random others (random), (2) shared by friends (potential from network), (3) actually appearing in peoples' News Feeds (exposed), (4) clicked on (selected) via Facebook Research paper.

In this paper, the authors assert that "we do not pass judgment on the normative value of cross-cutting exposure—though normative scholars often argue that exposure to a diverse 'marketplace of ideas' is key to a healthy democracy." This point references the text Political Decision Making, Deliberation and Participation, Volume 6, pages 151-193 by T. Mendelberg.

They go on, however, to suggest that "a number of studies find

that exposure to cross-cutting viewpoints is associated with

lower levels of political participation."

Do I not want to see opposing viewpoints? Will this discourage me from speaking or thinking about political discourse at all?

ABOVE: Fig. 1. Distribution of ideological alignment of content shared on Facebook as measured by the average affiliation of sharers, weighted by the total number of shares. Content was delineated as liberal, conservative, or neutral based on the distribution of alignment scores.

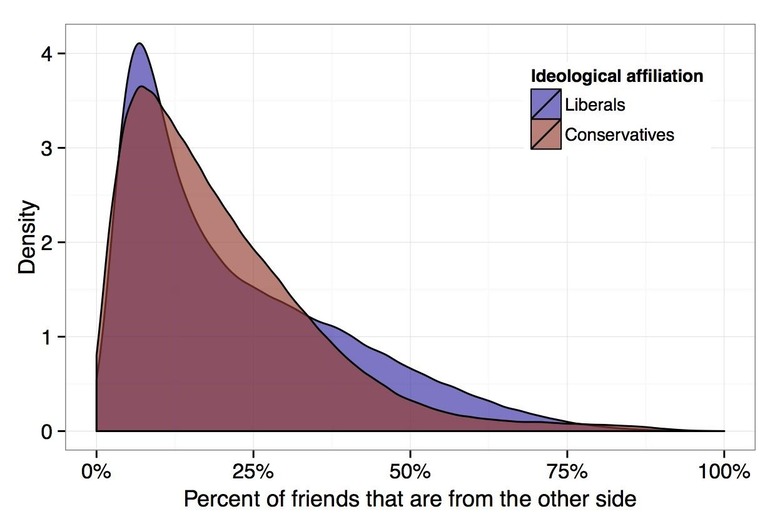

Facebook's study suggested that while their algorithms for content display for users does play SOME part in this, the users – you and I – play a far greater part in what we see.

"Regardless," said the study, "our work suggests that the power to expose oneself to perspectives from the other side in social media lies first and foremost with individuals."

You don't say?

Why not axe the algorithms altogether and do away with your contribution to the problem, then, Facebook?

I don't necessarily believe anyone has real access to this mythical idea of unfiltered results in search engines, and the ability to see an un-edited feed of content from one's peers.

But I want it.

I want to see what's really happening. I want to see popular opinions as well as dissenting opinions. I want to be able to search the internet without seeing results from webpages I've visited before smothering the rest.

I want the mix – the real mix of everyone's thoughts and views, that is – that I was promised at the dawn of the social network. I don't want to have to bypass built-in filters in the networks I use most just to find someone who disagrees with me.

I propose a "Grown Up" version of every one of our social networks and search engines. Even if all we're able to get is a version with everything but the illegal content, that'd certainly be a start.