MIT Scientists Improve Sensory Perception For Soft Robotic Grippers

Scientists at MIT are researching soft robotics, which is robotics that use flexible materials rather than the traditional rigid materials. The capabilities of soft robots have been limited due to the lack of good sensing. A robotic gripper needs to feel what it is touching and the sense the position of its fingers, a capability that has been missing from most soft robots.MIT researchers have published a pair of new papers outlining new tools to let robots better perceive what they're interacting with. Specifically, the papers describe new methods of allowing the robots to see and classify items along with the softer and more delicate touch. The team says that the soft robotic hands have sensor-laden skins that allow them to pick up objects ranging from fragile items, such as potato chips, to heavy items such as milk bottles.

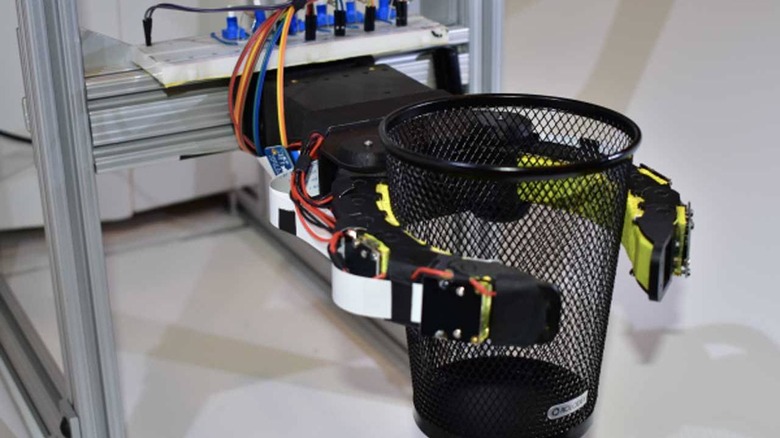

One of the papers builds on research from last year conducted by MIT and Harvard University. In that research, the team developed a soft robotic gripper that was a cone-shaped origami structure. It was able to collapse on objects to pick up items that were as much is 100 times heavier than its weight. The team has now added tactile sensors made from latex bladders connected to pressure transducers to that origami structure.

The new sensors allow the gripper to pick up objects and classify them, letting the robot better understand what it's picking up. The sensors correctly identified ten objects with over 90% accuracy, even when an object slipped out of the grips. The second paper outlined a gripper researchers created called a soft robotic finger called "GelFlex" that uses embedded cameras and deep learning to enable high-resolution tactile sensing and the awareness of the position of movements of the robot body.

The gripper looks like a two-finger cup gripper you might see at a soft drink station. It uses a tendon-driven mechanism to actuate the fingers. When tested on metal items of various shapes, the system was able to recognize over 96% of objects accurately.