Misty's Robotics Master Plan Is More Android Than R2-D2

Misty II doesn't look too pleased to see me when I sit down in front of the new platform robot, and even with only a pair of cartoon eyes to express itself, there's no doubting the glare. A stroke across the touch-sensors on the back of its head is enough to make it coo with pleasure, however. I feel like I may have begun what could be an interesting friendship, though for the moment I'm not the person Misty Robotics is trying to convince.

While it may be available to purchase from today, Misty II doesn't have to win me over, not yet anyhow: first it has developers in its sights. After all, nobody said that making robots was easy, and in a segment punctuated by startups with big dreams that went on to fizzle out, Misty Robotics has a challenge ahead of it.

"We're not trying to figure out the killer app for Misty out of the gate," Ian Bernstein, founder and head of product at Misty Robotics, explained to me ahead of today's launch. The startup, based in Boulder, CO., has watched other attempts to break robotics into the consumer space, and Bernstein believes they've charted a safe course that learns from others' mistakes.

That started with a recognition that, for many projects, "people are just building robots over and over," Bernstein suggests. "Outside of industrial, nobody has really progressed robotics. You buy a bunch of components, you build a platform – just like a couple of motors with wheels, there's no intelligence in it."

That ends up taking so much time, investment, and effort, he argues, that projects never get around to actually figuring out what the robots should do, and how they'll do it. Misty, in contrast, focuses on being relatively affordable and prioritizes flexibility. "How can we give people a capable platform," Bernstein asks, "that has enough sensors, that can do enough."

The result is a robot that looks like the cutesy, friendly companion you might expect from a Pixar movie, but that's readily programable and modular. Misty II isn't a toy, and anybody ordering one for $2,899 between now and the end of the year hoping it will be the best holiday gift for their kids may be disappointed when it doesn't behave like Wall-E out of the box. What it does, however, is give a significant head-start on creating robotic skills.

Out of the box, there's a display for showing different eye graphics, various cameras and other sensors for object avoidance, tracks on the bottom so that Misty II can roam around, and two flipper-like arms that can spin about. On the back, a magnetic "backpack" can add a breakout board, compatible with Arduino so that any shield could be slotted on. Bernstein expects Misty II buyers to develop their own custom backpacks.

To make that as painless as possible, Misty Robotics is offering up all the CAD models for the external shell pieces. It's also offering the specific limb joints onto which different parts could be grafted, to take into account the potential for tolerance issues on 3D printing. Misty II even has a trailer hitch on the back, so that the robot could tow something around.

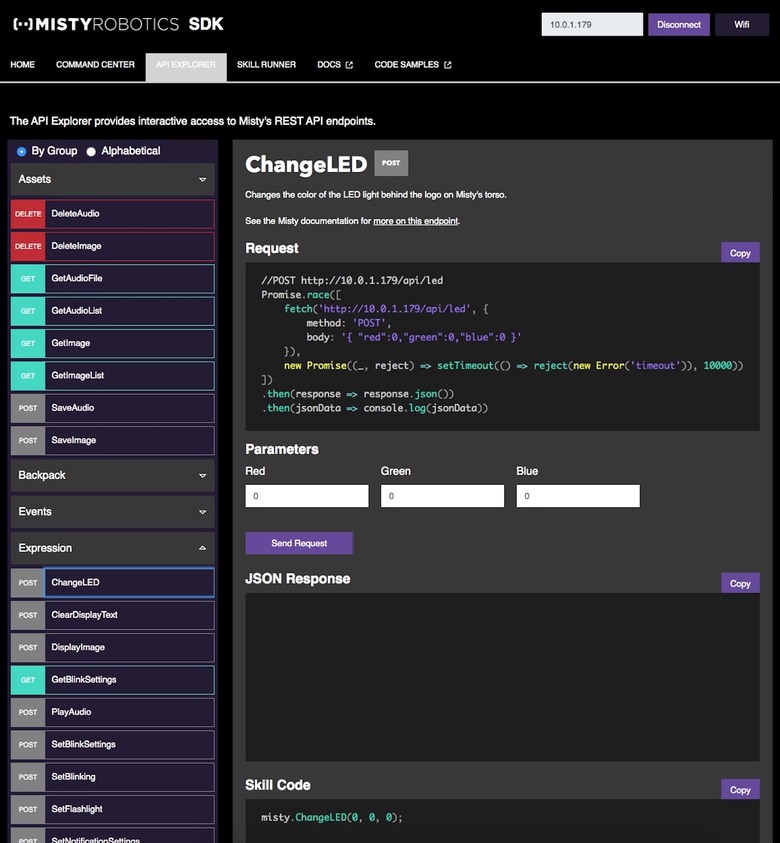

"We're just supporting developers in different spaces," Bernstein explains, and trying to do that as broadly as possible. Misty skills are programmed with Javascript, so that the barrier to entry is low for web and app developers, then loaded into the robot via a web interface. A web-based controller allows you to remotely pilot Misty II, but also generates the necessary code to interface with its sensors, microphones, speakers, and other parts. That can then simply be dropped into your own robot skill program.

A key difference to other robot startups is that Misty is intentionally not trying to do everything itself. "We're super agnostic," Bernstein says, whereas "a lot of other robot companies out there tried to build their own systems."

If you want to use Microsoft Cognitive Services for its computer vision APIs, therefore, you can: it's easy to make a skill that shares with them what Misty's cameras see, for example, and then gets back an estimate of age, gender, and facial expression. If you want your Misty skill to speak, you could use Amazon's text-to-speech engine; if you'd rather tap Google for natural language processing, that's straightforward too.

The goal is to build up a cache of third-party Misty skills that could either act as a standalone reason for someone to buy a robot, or together persuade a consumer that the time is right to pick one up themselves. Misty Robotics' role in that would be some blend of hardware specialist and white-label, providing the robot so that skill-makers wouldn't need to wade into manufacturing. Already, even with just some early backers with Misty II on their workbenches, some companies are talking about how they could deploy robots running their skills in the hundreds.

There'll be times, of course, when Misty II as it exists now isn't the right form factor. Maybe an elder care facility doesn't need the robot to have its own mobility, or a store needs a robot that's raised to eye-level with customers as they come in. "Depending on quantities," Bernstein says, "we could easily depopulate certain things if they weren't needed" like the tracks, for example. It also has manufacturing partners who could scale up different requirements for specific hardware.

Down the line, Misty Robotics is open to a similar model that Google has adopted with Android. Licensing out its software to other manufacturers, while also developing robots of its own, much like Google does with its Pixel range of phones and laptops. For now, Bernstein says, unlike ill-fated startups like Mayfield Robotics' Kuri and Jiro, Misty is 100-percent focused on developers and companies wanting to make apps. "At some point we need to go to consumers," he agrees, "but we need to have enough skills first."

For now, early adopters are in areas like elder care and education, though Misty has also proved to be an unexpected hit in libraries and as a potential "virtual concierge" in venues like stadiums. Bernstein expects it to be 3-6 months before the first Misty skills start to break cover, after developers have a chance to get to grips with the robot's capabilities.