Microsoft Project Artemis Will Scan Chats For Signs Of Child Exploitation

Just as the Internet empowered people by connecting them to knowledge and to one another, it has sadly also empowered less conscientious individuals in carrying out illegal activities in anonymity. Sexual exploitation, especially those of minors, is one of the long-standing problems for both authorities and Internet companies and its complexity defies conventional solutions. Microsoft is rising to the challenge with a new tool called Project Artemis will try to look for signs of online grooming that could lead to child exploitation.

You might associate the word "grooming" with the physical activity of cleaning up but online grooming is the exact opposite. It involves deceptive or indirect techniques to lure children into a sense of security only to abuse them later online.

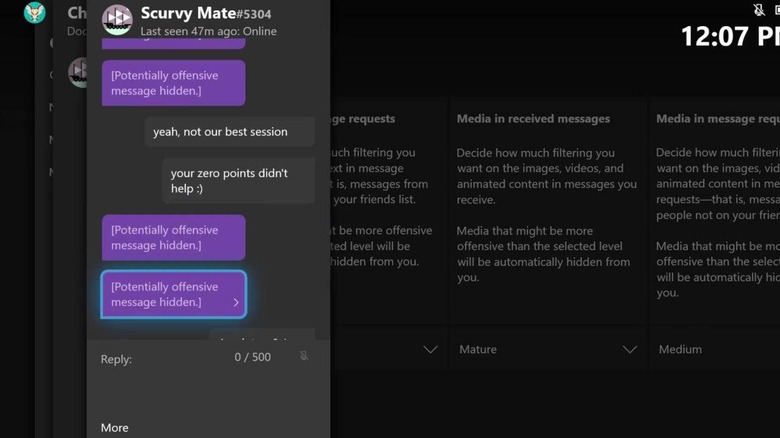

Much of this grooming involves chatting with the victim, which turns out to be a treasure trove for Microsoft to let loose its AI hounds on. It doesn't go into Artemis' technical details beyond how it is applied to historical chat text, a.k.a. chat logs, and rates each one according to their probability of containing child grooming. Chat companies using the technology could then look at the chat and determine whether it should be sent for human review and, if necessary, alert authorities.

Microsoft says it has implemented the technology on Xbox for years and might soon apply it to Skype. It is also licensing the technology via Thorn, a nonprofit that aims to build technology to combat the sexual abuse of minors.

There might be a few concerns about how Project Artemis could be used to violate user privacy, though the topic of privacy and illegal online activity has always been a thorny subject. It should at least give potential offenders pause for thought but it should also not be used as a cover to compromise innocent people's integrity. As always, a careful balance is needed when putting such tools into companies' hands.