Lookout App Now On Pixel Phones To Help The Blind See With AI

When people here about AI, they usually think either of disembodied personal assistants or, worse, Skynet. AI, however, has so much more varied applications, including the computer vision technology that powers the likes of Google Lens. Google is now bringing that exact same AI technology at the service of people that may need them the most. With the Lookout app, those with impaired vision will no longer have to play a guessing game or ask someone to help read crucial and maybe sensitive documents.

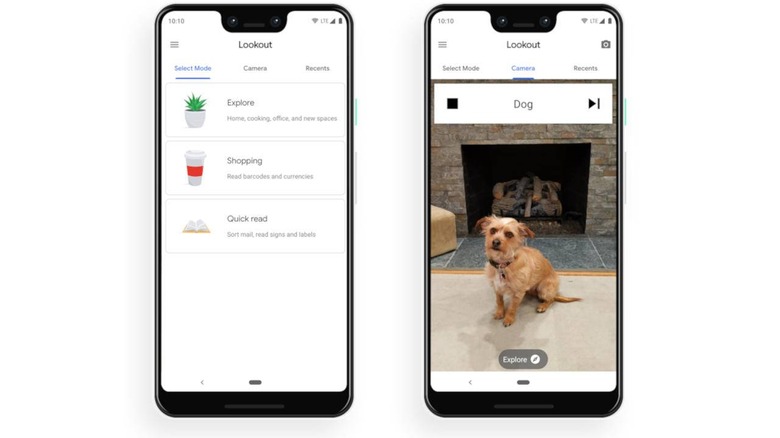

Think of it as a specialized application of Google Lens, one that identifies real-world objects but also plays it out loud for the user to hear. More than just augmenting what you're already seeing, Lookout is designed to be the eye some people may not have at all.

The app was designed to be simple to use since those who will be using it might not have the ability to comfortably navigate through menus and options. Simply launch the app and keep it pointed forward and it will identify objects seen by the camera, which is then spoken out loud. Google recommends hanging the phone via a lanyard or placing it in a shirt front pocket with the camera facing out. Earphones might be a necessity, too, when using it outdoors.

The app does have other features for those who aren't completely visually impaired. There's the default Explore mode for identifying objects, a Shopping mode for scanning barcodes and money, and Quick Read for mail, labels, and other text-heavy objects.

Announced back at Google I/O last year, Lookout is now available on Google Pixel phones in the US. Google doesn't mention any plans for expanding it to other Android phones. And as helpful as it may be, it warns that Lookout isn't 100% perfect but, like any AI-based technology, will improve over time as it learns even from its mistakes.