iOS 12 Multi-User ARKit 2.0: Here's What's Special

Apple's multi-player support for augmented reality experiences will support more than just the two-player games the company showed off during the WWDC 2018 keynote, with ARKit 2.0 in iOS 12 bringing unexpectedly comprehensive – and private – ways to use AR. Debuting on the iPhone and iPad later this year, iOS 12's updated augmented reality system will depend on new, persistent scans of the real world that Apple will allow devices to securely share.

ARKit initially launched as part of iOS 11 last year, Apple's take on augmented reality experiences. Although only a first-generation release, it shook up the mobile AR category. Google, for example, moved away from its strategy of Tango-based smartphones and tablets with complex AR sensor arrays, and shifted instead to a more software-heavy version, CoreAR that would run on regular phones with more typical cameras.

Now, Apple is readying developers for ARKit 2.0, most notably with the addition of multi-player support and persistent scans of physical spaces. Saving and loading maps is part of ARKit's world tracking, figuring out the position and relative location of 3D objects. It's also the same system that controls the physical scale of virtual objects, so that they match the 3D feature points of the scene.

iOS 11.3 added relocalization to ARKit. That allowed an interrupted AR session to relocate itself: if you'd switched to another app, for instance, then wanted to resume the augmented reality experience. That relied on a map of the 3D environment, but that map was only kept as long as the AR session was alive.

In ARKit 2, that virtual map of physical 3D space is preserved. Dubbed the World Map, it keeps named anchors, too – fixed points in the real world – along with raw feature points to better identify the virtual map with the real space. Each World Map created is independently saved, now, too.

It means Apple can now support persistence in augmented reality experiences. If you left a virtual cup behind on a physical table, for example, ARKit can now load the same world map when you return to that location and find the cup still there. Previously, there was no way for an app built on ARKit to recall that specific location.

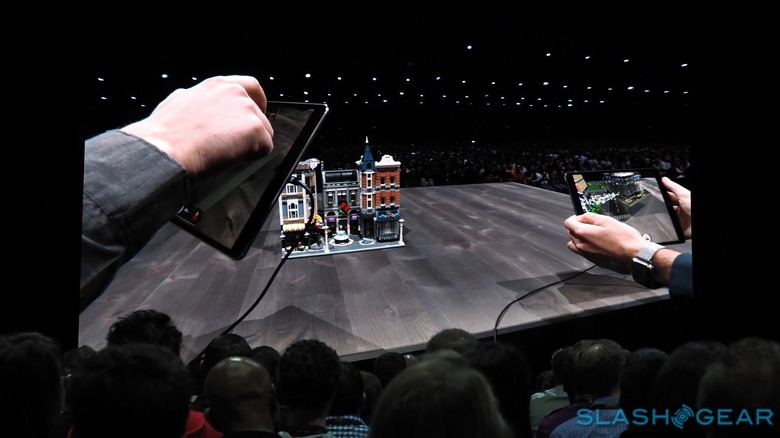

It's also used for multi-user experiences: not just one, but many users, in fact. A single user can create a World Map with their iPhone or iPad, and then have that shared with multiple other people. Apple showed that off as a two-player game in the WWDC keynote, but in reality ARKit 2.0 supports multiple people all collaborating with the same World Map. Indeed, there's no specific limit for the number of participants that can be involved.

The sharing itself can be done over WiFi or Bluetooth, meaning AR sessions needn't be held only where there's cellular connectivity. When an app loads a World Map, it uses the same relocalization process from before to identify whereabouts the device is within that existing map. Of course, there are factors which affect how readily and accurately that's done: it's easier if the environment is static and well-textured, with dense feature points on the map. Having multiple points of view generate the World Map helps, too.

In practice, that means the initial generation of the World Map will build dynamically. As users – whether of a game, an educational experience, or something else – scan the environment around them, the World Map is fleshed out. Depending on how comprehensive the scanning has been, apps will find the World Map is either unavailable, limited, extending, or mapped. Apple's advice to app-makers is to prompt more thorough scanning if the World Map is unavailable or limited.

Supporting all that are other core enhancements to ARKit and how quickly it can scan the world. iOS 11.3 added continuous autofocus to ARKit: iOS 12 optimizes that process for AR. There's faster initialization and plane detection, and more accurate detection of edges and boundaries.

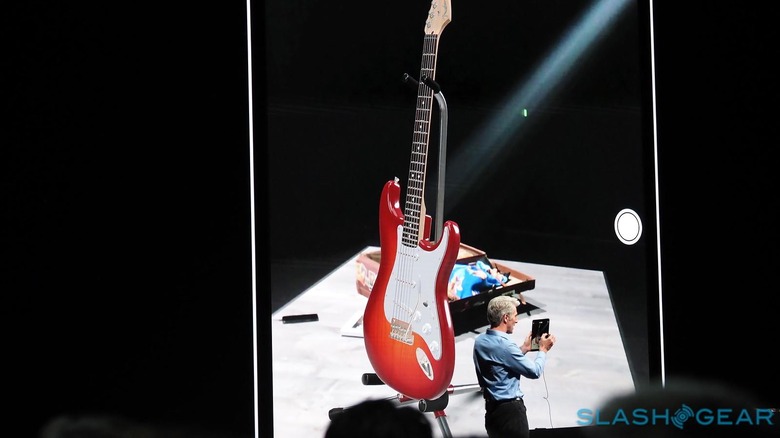

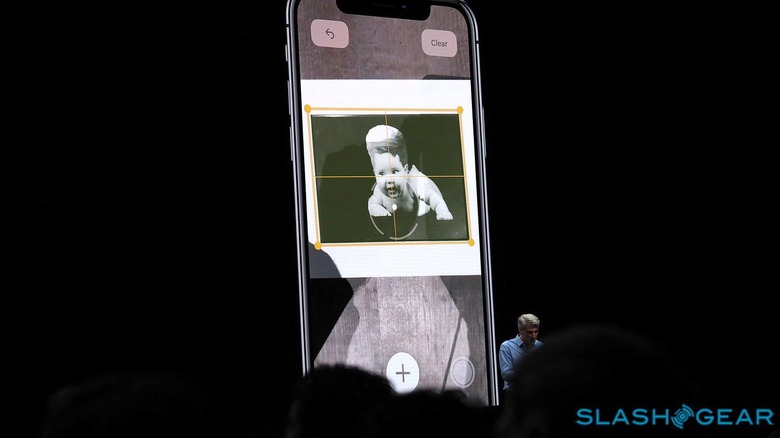

It also combines with ARKit 2.0's freshly-added 2D image tracking. Now, images no longer need to be static in order for augmented reality experiences to identify them in the frame: position and orientation are tracked every frame, at 60fps. It's also possible to track multiple images simultaneously, rather than just one as in previous versions of ARKit.

Arguably even more useful, there's also now detection for 3D objects, though unlike 2D image detection they need to be static. Once scanned, these objects – which need to be well-textured, rigid, and non-reflective, and roughly the size of a table top – can be tracked positionally, depending on where a user is in relation to them.

Developers will, of course, need to embrace these new features in ARKit 2.0; it's apparently a matter of adding a few lines of code to do that, though existing single-user AR experiences may need reworking in order to suit multi-person use.