How Machine Learning Will Be Our Gandalf

In the near future, we'll be using machines to read research papers and make connections humans have not yet made. A paper published in the scientific journal Nature outlined how old scientific research papers contain "latent knowledge" that we, pathetic humans, are not utilizing fully. As a system called Word2vec proved, the connections are there to be made, and they will continue to be made in a BIG way with machine learning!

What we do today

Today we've got an issue – a sort of disconnect between individual humans gathering knowledge and the spreading of that knowledge. Take for example the pyramids of Egypt. At some point, someone knew everything there was to know about these pyramids – somehow HAD to know in order for them to be built.

Over the course of time, knowledge was lost. Be it for lack of desire to know or lack of a method for retaining knowledge that would stand the test of time, information was lost. Our collective memory as humans did not retain all there was to know about the pyramids of Egypt.

Today we have methods of retaining knowledge on which future generations can stand and expand. The issue is that each time we learn something new, we interpret and/or transmit said knowledge from our own unique perspective. Even when we're dealing with seemingly objective subject matter, details can get lost in the process.

Gandalf

Another pop culture reference for this situation is in Gandalf from Lord of the Rings. "Much that once was is lost," said Galadriel, Elf lord, "for none now live who remember it."

In the library at Minas Tirith, Gandalf sought information from times all but lost to memory. In searching the library, and stacks of papers in disarray, Gandalf made discoveries in already-published papers.

Gandalf re-discovered what'd happened to the Rings of Power, and the One Ring, and basically everything that made the story in Lord of the Rings progress from that point forward. That information was written down, sure, but we needed a Gandalf to go out and find it, read it, and relay the important bits to the world so that we could use said knowledge here, today.

Labeling Better

"Publications contain valuable knowledge regarding the connections and relationships between data items as interpreted by the authors," wrote researcher Vahe Tshitoyan et al, "To improve the identification and use of this knowledge, several studies have focused on the retrieval of information from scientific literature using supervised natural language processing."

This process requires that datasets be processed by hand, and they generally work with hand-labelled datasets. Again, there's potential for missed details in the labelling process. The process proposed in new research from Tshitoyan suggests a new method.

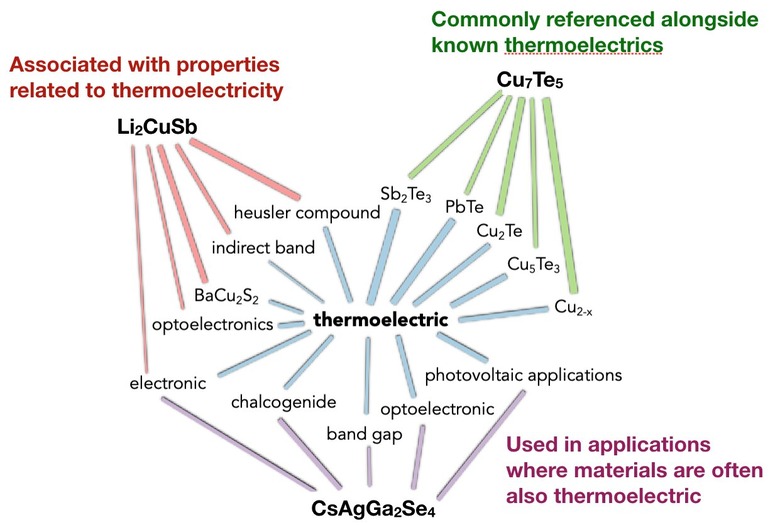

This new method uses materials science knowledge from published research, encoded as "information-dense word embeddings (vector representations of words) without human labelling or supervision." The computer interprets the information and stores it all in one place.

What will happen?

Once the machine begins to capture information, it'll immediately begin to interpret said information. The machine will then potentially "recommend materials for functional applications several years before their discovery."

According to Tshitoyan, this team's findings suggest that already-published research contains "latent knowledge regarding future discoveries" that'll be turned up by the machine. The machine could be making discoveries years before humans would've done otherwise – and might turn up connections that might never have been made by humans at all!

"In every research field there's 100 years of past research literature, and every week dozens more studies come out," said study co-author Gerbrand Ceder. "A researcher can access only fraction of that. We thought, can machine learning do something to make use of all this collective knowledge in an unsupervised manner—without needing guidance from human researchers?"

When?

Researchers at the U.S. Department of Energy's Lawrence Berkeley National Laboratory (Berkeley Lab) worked on the research that's included in the paper "Unsupervised word embeddings capture latent knowledge from materials science literature" as published in Nature this week. That paper can be found in Nature 571, 95-98, published July 3, 2019. Authors include Vahe Tshitoyan, John Dagdelen, Leigh Weston, Alexander Dunn, Ziqin Rong, Olga Kononova, Kristin A. Persson, Gerbrand Ceder, Anubhav Jain.

Work has already begun, using an algorithm by the name of Word2vec. This system was trained with 3.3 million abstracts of published materials science papers already – and it's not done, not by a long shot. If you'd like to participate, you might want to start with DeepLearning4j, a system that distributes Word2vec with Java and Scala, working on Spark with GPUs. Go forth and teach the machine!