Google's TPU Taking On Intel And NVIDIA For Neural Networking's Future

Google is seeking a new way to make neural networking work efficiently – starting with the TPU. The TPU is a Tensor Processing Unit – to be compared to Intel's CPU or NVIDIA's GPU, made for processing data. Google's news here is their first-ever study published on how well their TPU works – compared, appropriately enough, to Intel's CPU and NVIDIA's GPU whilst neural networking.

A neural network is a computer system with functionality based on that of the human brain and nervous system. Neural networking is meant to allow computers to think and reason, and ultimately solve problems in ways that've only before been possible with the human brain. Google uses neural networks for a wide variety of applications.

Saying "OK Google" followed by a question or request utilizes Google's neural network computing technology. Google uses neural networking for machine learning as well – each time the computer solves a problem, it gets smarter. Each time the user confirms that the computer has solved a problem correctly, the computer gets more capable of finding answers that are correct. This is called Deep Learning.

To solve the problem of increasing demand for neural networking capacity, Google worked on their own Tensor Processing Unit (TPU). Google's TPU is processing hardware – an application-specific integrated circuit (ASIC). Google's goal (starting in the year 2013), was to improve cost-performance for neural networking (in this case Deep Neural Networking) by 10x over GPUs. This is according to Google's paper "In-Datacenter Performance Analysis of a Tensor Processing Unit".

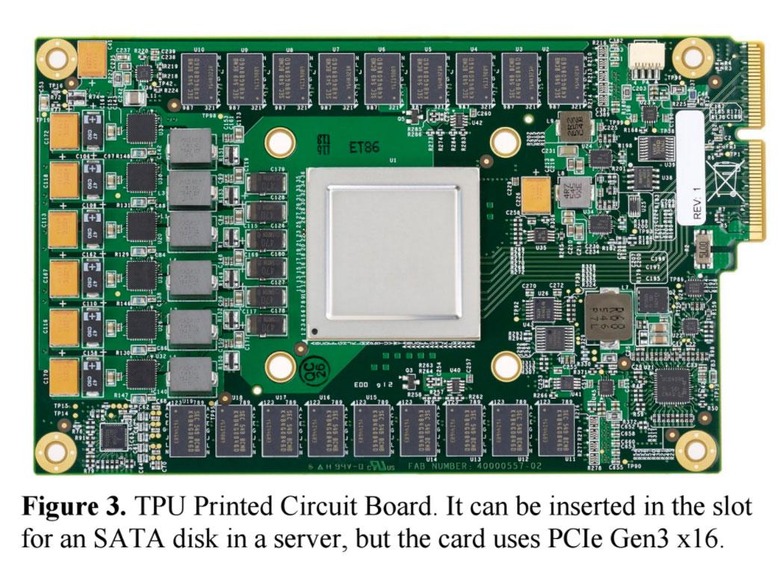

Google's TPU plugs into existing servers like a GPU does using the PCIe I/O bus. Google's TPU is a coprocessor, one that is not tightly integrated with a CPU. Google's development team says that because the host server sends instructions to the TPU rather than the TPU fetching them itself, the TPU is "closer in spirit to an FPU (floating-point unit) coprocessor than it is to a GPU."

Speaking with NextPlatform, Google hardware engineer Norman Jouppi suggested that Google looked at field-programmable gate arrays (FPGAs) before they decided on ASIC units. While FPGAs are easy to modify, they do not perform as well as ASICs. Google's TPU seems to be the best of both worlds. "The TPU is programmable like a CPU or GPU," said Jouppi. "It isn't designed for just one neural network model; it executes CISC instructions on many networks (convolutional, LSTM models, and large, fully connected models). So it is still programmable, but uses a matrix as a primitive instead of a vector or scalar."

Google's choices in hardware were done not to create the most efficient or powerful TPU possible. Instead, Google's choices in hardware were to allow deployment at a massive scale. "It needs to be distributed—if you do a voice search on your phone in Singapore, it needs to happen in that datacenter—we need something cheap and low power," said Jouppi. "Going to something like HBM (High Bandwidth Memory) for an inference chip might be a little extreme, but is a different story for training."

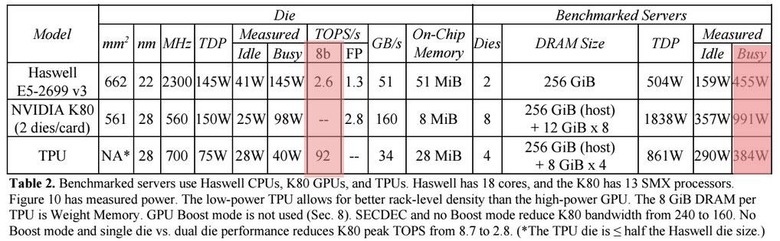

From the paper linked above, Google's benchmarks show that Google's TPU solution far outperformed comparable Intel Haswell CPU architecture with less power. Their tests showed their TPU process 92 TOPS (71x more throughput than Intel's CPU on inferences) in a 384 watt thermal envelope for the server while busy. The Intel Haswell CPU processed 2.6 TOPS with a thermal envelope of 455 watts while busy.

This is where Google's entry into this neural networking processing hardware universe begins. Google's TPU will be seeing a lot more play – and publicity – over the next several years if their efficiency continues to take on the top guns as it has in this first benchmarking study.