Google's Next Revolution May Cut The Touchscreen Altogether

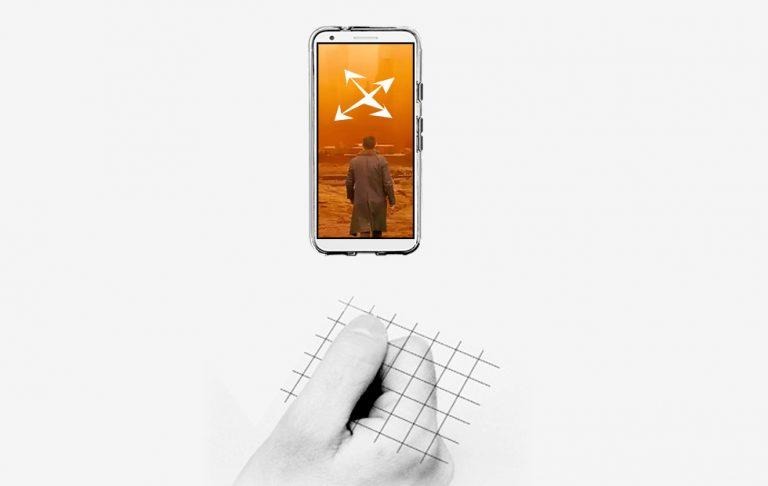

A patent published this week points to Google having a deep knowledge of spacially aware gestures for control of devices of all sorts. We'd known that Google was working on tech to recognize our hands swiping through the air as controls for smartphones – but this is a bit different. This expands upon that – beyond the realm of pre-configured controls and into the world of DIY.

The patent goes by the name "device interaction with spacially aware gestures" and it was filed just this week. The techniques described in the patent suggests Google's working on device interaction that includes "performing gestures that are intuitive to a user." Normally that'd normally include such simple things as pushing, pulling, and tapping – but this expands on that.

Gesture Learning

Two main concepts are presented by Google that I'd like to point out. The first is the gesture equivalent of Natural Language. The smart device with the abilities Google described in this patent will be able to interpret gestures the user has made up themselves.

Let's say, for example, I want the phone (or whatever other device this is) to turn off when I show a unique double-thumbs-down gesture. I'd set that request up, and the device would ask me to perform the gesture I want to use, and it'd recognize the gesture I've made as the trigger for "turn phone off".

Multi-Device Potential

Google's patent also identifies the potential for multi-device gesture recognition and command. Instead of saying "OK Google, turn on my living room lights," I'd wave my hand at whatever gesture-recognition device is watching for my movements. I'd make a gesture that looks like I'm flipping a switch, for example, and the lights would turn on.

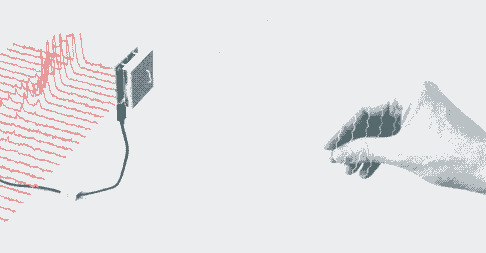

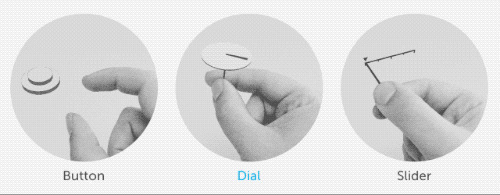

Project Soli

The moving images shown above come from Google's Project Soli. This Google project is already well underway, and was demonstrated for the first time all the way back in May of 2015. It's not just in development, it's been in development for years – just chomping at the bit to come out into the open.

A Soli SDK is available for developers, and the folks behind this project – over at Google ATAP – continue to update their system through today. The other one of two major projects Google ATAP was working on is Jacquard, and they've just released their Google Levi's Jaquard smart jacket this week.

Now that we're running full speed into the future with fingerprint sensors under touchscreens, augmented reality, and the death of physical buttons, the next step is clear. Next, the touchscreen will be removed altogether. Maybe not this year, but soon!