Google Spills The Pixel 4's Camera Magic

Google's Pixel 4 may have added the second camera it long avoided, but a deep-dive into Portrait Mode shows it's not slowing up on computational photography. Earlier versions of the Pixel relied on post-processing trickery to coax a faux-bokeh shot from a single lens, where rivals used the double-data from two adjacent sensors.

Google's argument had been that it could just as readily do with software and a single camera what phones like the Galaxy Note and iPhone required two cameras for. That all changed with the Pixel 4 and Pixel 4 XL this year, of course. Alongside the regular camera on the rear, there's a second camera with a 2x telephoto lens.

Twice the data means a lot more potential from images, with Google's Neal Wadhwa, Software Engineer and Yinda Zhang, Research Scientist, Google Research outlining just how they've tapped into both hardware and software to improve things like edge recognition, depth estimation, and more realistic bokeh.

Importantly, it builds on rather than replaces the existing Portrait Mode system. With the previous phones, like the Pixel 3, Portrait Mode relied on a Dual-Pixel system: each pixel is split in half, each seeing a different half of the main lens' aperture. It's only a tiny difference, but those two perspectives allow the camera app to estimate distance, much in the same way that our eyes work together to figure out how far, or near, something is from us.

Problem is, the actual distance between the viewpoints using this system is less than 1mm, because the half-pixels are so close together. It makes it trickier to figure out depth for objects at a distance, for example.

With the dual cameras on the Pixel 4, though, there's 13mm of distance. That makes for greater parallax, and thus better estimates of distance for objects further away. Dual-Pixel is still used, though, to aid with portions of the frame where the view of one of the main cameras is obscured. Google then funnels all that through a trained neural network to work out depth.

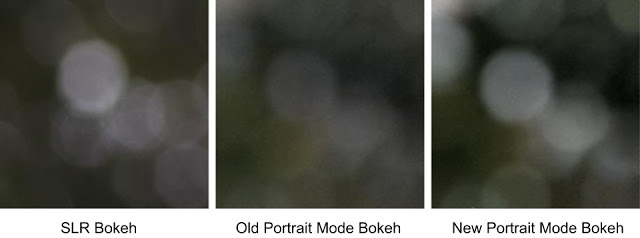

Cutting out the foreground subject from the background is only half the battle, however. For it to look more realistic, like a true shallow depth-of-field lens was used, you need to add the blurred background, known as bokeh. In the Pixel 4, Google uses a new pipeline when it adds blur, doing that first and then applying tone mapping, rather than the other way around.

The good news is that, because of the role software plays in all this, Google can push out things like better bokeh in updates for older phones. If you've got the Google Camera app v7.2, for instance, on your Pixel 3 or Pixel 3a, you'll have this improved background blur system too.