Google Just Offered Its Magical Machine Learning Chips To All

Google is no longer hoarding its artificial intelligence chips to itself, offering its Cloud TPUs for users wanting smarter processing in the cloud. Dubbed machine learning accelerators, the Cloud TPUs are custom hardware chipsets of Google's own creation, used for everything from recognizing the same people across a host of different photos, though to making safe driving inferences from autonomous car data.

In short, they're an attempt to make cloud-based processing smarter, and better able to observe and pick out patterns and more. The Cloud TPUs will live alongside, rather than replace, Google's existing chips that power its Google Cloud Platform (GCP), which include everything from Intel's latest CPUs through to high-end GPUs such as NVIDIA's Tesla V100.

The goal, therefore, isn't to replace all of those processors with Cloud TPUs. Instead Google intends to offer targeted machine learning abilities to projects that might traditionally be unable to afford it. Even those that do have in-house machine learning infrastructure, Google points out, could still benefit from what its cloud services are now offering: with a fleet of Cloud TPUs, for example, multiple variants of a machine learning model could be trained in short order, with the most effective then deployed internally.

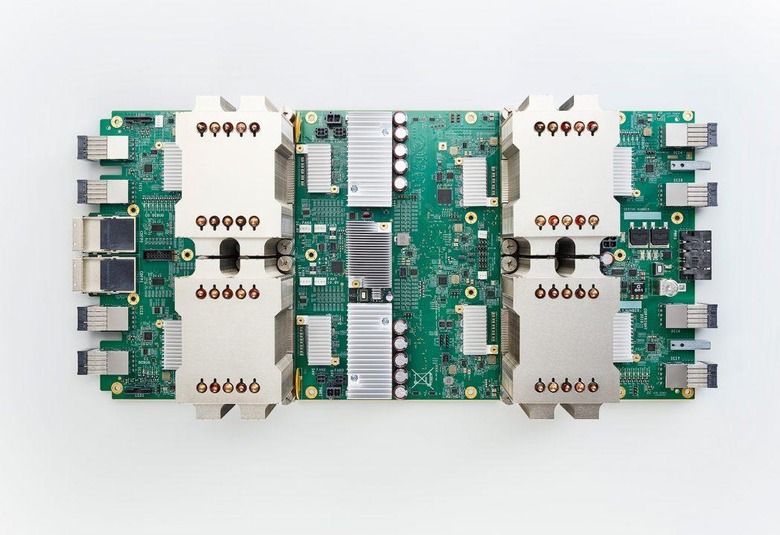

A Cloud TPU uses four custom ASICs, for up to 180 teraflops of floating-point performance paired with 64 GB of high-bandwidth memory. Individual Cloud TPUs can be addressed nay projects, or the boards can be connected together into what Google calls "TPU pods" with multi-petaflop performance. Those won't be available to GCP users initially, mind, though Google says they'll come online later in 2018.

Hardware, of course, is only half of the battle. Google is also open-sourcing various image classification, object detection, and machine translation and language modeling tools, which can use machine learning for a variety of apps and services. If you have something you want to try out yourself that's TensorFlow compatible, Google is open to that too.

Although GCP's new machine learning support is likely to be popular among universities and other projects wanting to get involved in the technology but without the outlay, it also has broader applications for more sizable organizations. Lyft, for example, is using the Cloud TPUs to integrate navigation-related data from its real-world fleet of Level 5 autonomous cars with new driverless algorithms. According to Anantha Kancherla, Head of Software on the project, "what could normally take days can now take hours."

Google will be offering limited quantities of Cloud TPUs for its users initially, billing for processing by the second. It'll be priced at $6.50 per Cloud TPU per hour. Those organizations and projects interested must first sign up to request a quota – including giving an explanation of their machine learning goals – before they can access the specialist chips.

MORE Google