Ford's New Silicon Valley Lab Isn't All Pie-In-The-Sky

Self-driving cars are undoubtedly the most attention-grabbing project at Ford's new tech outpost in Palo Alto, but it's not all the team is working on, and other schemes are far closer to helping modern drivers. The Research and Innovation Center is also exploring how digital dashboards can be smarter, how smart home gadgets like Nest can play nicely with your car, and even how a little Project Ara style modularity could make Fords more future-proof. Read on for three of the more down-to-earth – and potentially closer to production cars at your nearest Ford dealer – projects underway.

Nest Integration

Nest's smart thermostat and the Nest Protect smoke alarm that followed it always had the potential to be the lynchpin of the digital home, but that has snowballed since Google acquired the startup. For Ford, with the Nest offices literally a block and a half away from the new Palo Alto R&D center, building closer ties between car and home was an obvious strategy.

The result is SYNC 3's Nest integration, a combination of geofencing, car awareness, and preferences prediction that builds on the thermostat's own intelligence. Now, Nest already tries to learn your patterns at home, Ford engineer Jamel Seagraves pointed out to me, but that figuring out enough to properly educate the automatic "Away" mode can take a little time.

Ford's solution is to flag up to the smart home when the car is being used. When the car is started, SYNC 3 tells Nest, and the thermostat can instantly shut off the heating or the air-conditioning and start saving money.

On the flip side, when SYNC 3 spots you're near home again, it can notify Nest and have the house suitably warm or cool, ready for your arrival.

Geofencing isn't especially new, but Ford's proposed integration goes further than that. Say, for instance, it's unexpectedly snowing and you opt to turn the car's heater higher than usual, Ford's Carey Feldman suggested; SYNC 3 could pre-warn Nest of that fact, and the thermostat could increase the temperature at home to higher than normal levels, on the understanding that you'll probably appreciate a little more warmth.

There's also Nest Protect support, with warnings from the thermostat popping up on the infotainment screen in the center console. While the Nest app can do the same on your phone, that requires taking your eyes off the road and grabbing your iPhone or Android out of your pocket or bag; the SYNC 3 display is arguably more convenient, and also gives the option to call 911 or a trusted emergency contact.

Local Speech Recognition

Speech recognition is increasingly popular among car manufacturers, often controlling everything from multimedia, through hands-free calls, navigation, and even core settings. Unfortunately, the dirty little secret is that it's often inaccurate, sluggish, and confusing.

Ford is working with Carnegie Mellon researchers on a better system, and while the cloud may be fashionable, their new approach bypasses it altogether.

Most current speech recognition systems with support for anything more robust than a few basic words or phrases rely on remote processing. A wireless connection to cloud processing crunches the commands and then reports the driver's intention to the infotainment system.

Problem is, that introduces latency – the speech has to shuttle all the way to the remote data center, and then the processed commands make the return journey back to the car – and if the data connection slows down, so does the system responsiveness.

Ford's solution is GPU accelerated processing, more commonly found in graphics workstations or even supercomputers. Right now, the team is relying on NVIDIA's Tegra K1 chipset to run the voice engine; according to Carnegie Mellon's Ian Lane, even the very same, full engine normally found in the cloud only stresses the local processor by around 30-percent.

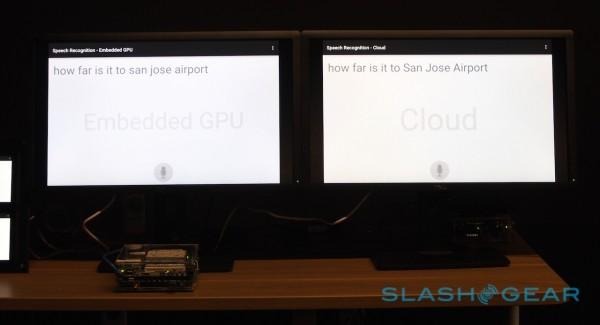

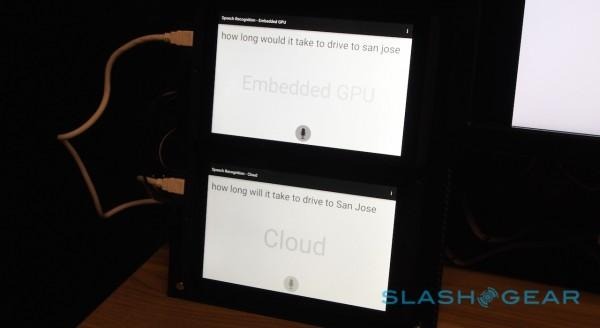

The result is a cut in latency and no reliance on having an active data connection. Lane's demonstration, even with a fast connection, showed that the local system had a slight edge; that will only improve in real-world conditions.

The leftover Tegra grunt isn't going to go to waste, either. While exploring different types of noise-canceling microphones and audio DSP are still on the cards, Lane also has plans to add cameras to the dashboard. They could track when the driver is looking at the infotainment system, and even when their lips are moving, comparing that movement to the audio being picked up by the microphone array.

Similarly, by monitoring whether windows are open or closed, what setting the HVAC system is on, and how many other people are in the vehicle, the car could automatically adjust between different acoustic models to suit. None of this, Lane points out, could practically be done by a cloud processing system.

Right now, the local engine doesn't update, but a system that polls for the latest voice processing data every day or week and does a periodic OTA update is also on the roadmap. Lane's team is currently out running network benchmarks in different locations, across different carriers, and at different times of day, so as to build a real-world model that can be used to simulate congestion.

Modular Upgrades

The issue of aging navigation data has demonstrated just how frustrating it can be when your car's systems are outdated, and it's only going to get worse as infotainment tech advances. Once upon a time, you could pull out the standard-sized radio unit and slot in an upgraded one, but the custom designs and highly-integrated systems of modern vehicles makes that impractical or even impossible.

Ford's solution is a modular infotainment system, in which the core components like processor, memory, storage, and custom sensors is separated from other parts, such as the touchscreen and buttons. Similar in concept to Samsung's Evolution Kit for its TVs, a slot-in processing block which brings new features to older sets, Ford envisages periodic upgrades being possible that go beyond the limits of software alone.

The proof of concept has been made in collaboration with CloudCar, which has developed a cloud-centric multimedia and navigation interface on top of Android. In Ford's demo car, the driver could switch between the old SYNC system and CloudCar's justDrive.

In practice, you might not want to have two interfaces to choose between; however, it showed how the same control hardware – including the buttons on the wheel and the speech recognition system – could be used with different "brains" behind the scenes.

So when can we have all this?

All three projects are probably closer to market than fully-autonomous vehicles (though Ford does have semi-autonomous tech, like automated parking, already in its production cars), but each of the teams was cautious not to over-promise.

Local speech processing with camera lip-tracking is likely to be practical "in a couple of years," Lane told me, though the others declined to give any specific timescales.

Nonetheless, it's not hard to see Nest integration becoming practical, and while modular infotainment systems would still require a trip to the dealer to swap them out, that's a whole lot better than being left with the tech from the day before yesterday.