Facebook's "Echo Chamber" Is Your Fault

Far from an echo chamber of reinforcing beliefs, your Facebook newsfeed is actually a hotbed of controversy: if it's not, Facebook argues, you've only yourself to blame. Much has been made of the ever-evolving algorithms that control which stories you see when you log into Facebook, including accusations that users are being fed a diet of shared articles that only ever support rather than challenge their pre-existing opinions. Instead, the social site's own research indicates, there's already a fair chance contrasting content is being served up, you just might be too overloaded to look at it.

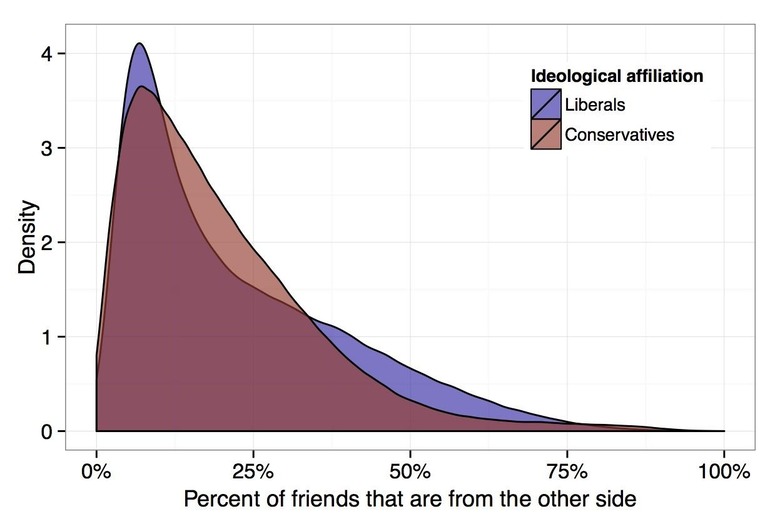

According to Facebook's data, gathered in the six months between July 2014 and January 2015, on average 23-percent of a user's friends claim an opposing political ideology.

Facebook's research team then looked at what news articles were being shared, and classified those articles by whether they skewed along ideological lines. 28.5-percent of what people see in their newsfeed is actually across their ideological lines, it was found, and in fact almost a quarter of what people click on fits that criteria too.

While the research did suggest some clustering takes place of those with similar opinions and beliefs, that's not to say there's no exposure to contrasting views. The question is how far down the page you scroll.

"News Feed shows you all of the content shared by your friends, but the most relevant content is shown first," Facebook's team of Eytan Bakshy,Solomon Messing, and Lada Adamic writes. "Exactly what stories people click on depends on how often they use Facebook, how far down they scroll in the News Feed, and the choices they make about what to read."

So, depending on how long you spend scrolling, you're probably going to be less exposed to the articles that Facebook believes you're less likely to click on. Crunching the numbers, the site calculated users are 5-8 percent less likely to see countervailing articles shared by their friends, depending on their political identification.

In fact, liberals were less likely to see articles that challenge their beliefs than conservatives, Facebook's team says.

"The composition of our social networks is the most important factor affecting the mix of content encountered on social media with individual choice also playing a large role," is Facebook's conclusion, arguing that in fact newsfeed's ranking algorithm is a relatively small contributor to what you're exposed to.

Of course, if you're really interested in seeing all the election, religion, and other arguments going on, you can just click over to the "Most Recent" view and see everything.

SOURCE Facebook