Facebook Warns Fake-News Spreaders But Won't Go Nuclear On False Info

After two elections' worth of fake news and almost 18 months of pandemic falsehoods and antivax lies, Facebook is finally taking a stance on Pages and users that share false information. The long-overdue rule change means individuals who repeatedly distribute debunked content could have their shares buried, though Facebook will fall short of actually removing it altogether.

It's an extension of the social network's fact-checking policies, which have met with considerable skepticism since the company launched them back in 2016. Facebook, and its reach among the American public and beyond, has become a prime fighting ground for fake viral content and partisan faux-medical advice.

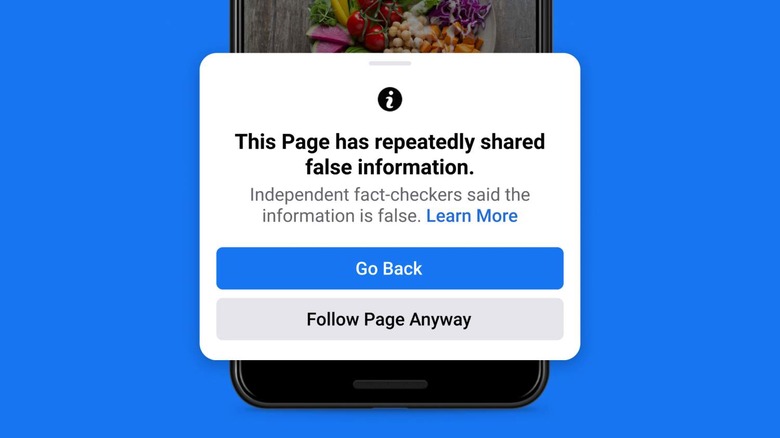

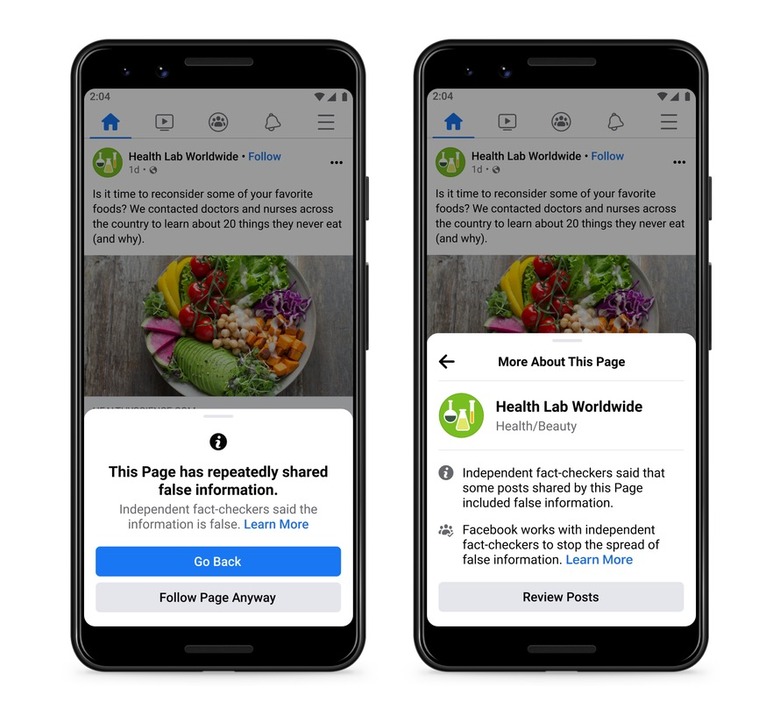

Now, Facebook says, it will show new warnings if people go to like a Page that has repeatedly been found to share false information by its independent fact-checkers. For example, that could include the message that "independent fact-checkers said that some posts shared by this page included false information." Facebook will also include links to explain what its fact-checking team actually does.

"This will help people make an informed decision about whether they want to follow the Page," the company said today. All the same, you won't be prevented from actually following that Page if you choose to.

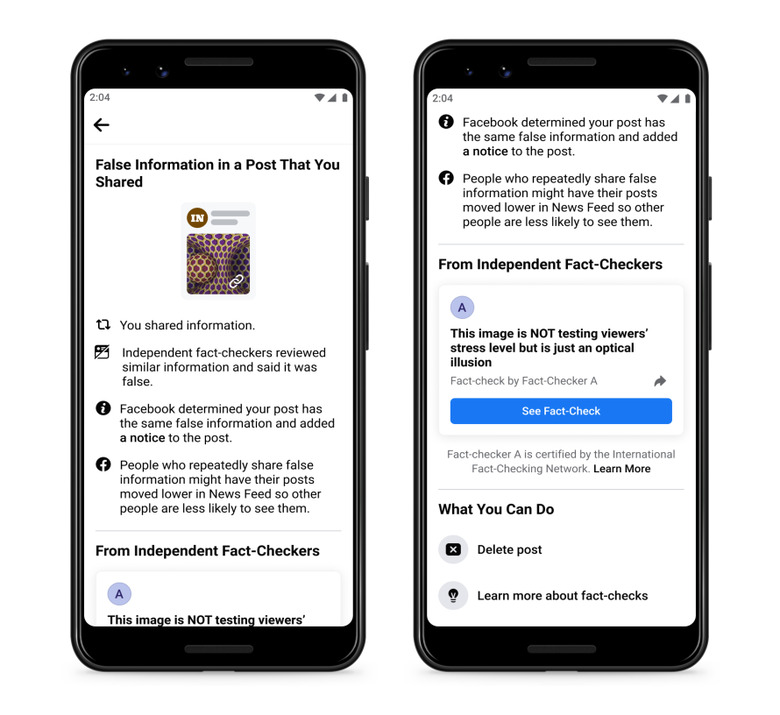

For individuals, Facebook says it's expanding the same sort of rules and penalties already applied to Pages, Groups, Instagram accounts, and domains sharing misinformation. "Starting today, we will reduce the distribution of all posts in News Feed from an individual's Facebook account if they repeatedly share content that has been rated by one of our fact-checking partners," Facebook explains. "We already reduce a single post's reach in News Feed if it has been debunked."

All the same, it won't be preventing that content from being shared. Instead Facebook is adding new notifications that will retroactively flag if content they've previously shared has since been debunked. They'll see the fact-checker's article to that effect, and be offered a prompt to share that, in addition to the option to delete the original post.

"It also includes a notice that people who repeatedly share false information may have their posts moved lower in News Feed so other people are less likely to see them," Facebook adds.

How effective all this is depends, of course, on how open people are to believe the debunking. There's no denying that using Facebook to willfully spread misinformation has been commonplace, and that combined with a general mistrust of "independent fact-checkers" may mean that users simply ignore the warnings.