Facebook Reveals Its Smart Glasses' Nerve-Tracking Wristband Tech

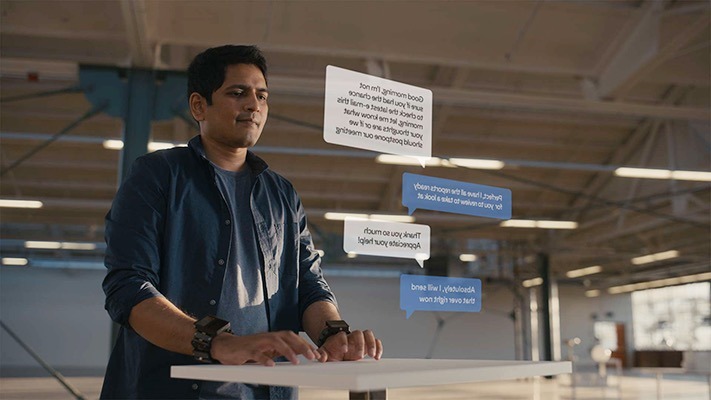

Facebook has revealed another step in its path to augmented reality Smart Glasses, a wrist-based controller that blends AI and nerve-tracking EMG to leapfrog traditional input systems. While the eventual goal is a super-smart artificial intelligence that instinctively intuits what you might want from your high-tech glasses, Facebook says, this wrist-based input controller is much more practical in the shorter term.

Earlier this month, Facebook discussed its roughly 10 year vision for smart glasses and augmented reality. Designed to be comfortable for all-day wear, as well as the technological advances of transparent displays, they'd also feature a new, proactive AI that would effectively be your co-pilot and personal assistant.

"The AI will make deep inferences about what information you might need or things you might want to do in various contexts, based on an understanding of you and your surroundings, and will present you with a tailored set of choices. The input will make selecting a choice effortless — using it will be as easy as clicking a virtual, always-available button through a slight movement of your finger," Facebook explains. "But this system is many years off."

In the shorter term, then, Facebook is working on a different approach. Its Facebook Reality Labs (FRL) Research team has been exploring how to combine a less all-encompassing AI with better ways to track and respond to human input, beyond keyboards, trackpads, and voice commands. A "usable but limited contextualized AI" could then basically fill in the blanks.

The system developed is worn like a watch, but uses electromyography (EMG) to track the electric motor nerve signals passing through the wrist. "The signals through the wrist are so clear that EMG can understand finger motion of just a millimeter," Facebook explains. "That means input can be effortless. Ultimately, it may even be possible to sense just the intention to move a finger."

Such a system could track the messages sent from the brain to the fingers when it intends to type, for example, or swipe across a touchscreen, and share those intents with the AI. Initially, FRL says, it's looking at just one or two such interactions, the equivalent of tapping a button. Clicking fingers and pinching-and-releasing the thumb and forefinger are the start, though eventually the team plans to expand that to full control over virtual UIs and objects.

Other possibilities are typing on a virtual keyboard, with the EMG wrist sensor monitoring each finger movement and figuring out which imaginary key you're likely to have wanted to tap. The AI, meanwhile, would contribute its own predictions and customizations, to improve accuracy.

The short-term goal is what's being described as an "intelligent click": effectively a limited AI that's aware of environmental and situational context, and which presents its suggestion on the most likely predicted goal for a virtual EMG-powered click to confirm.

"Perhaps you head outside for a jog and, based on your past behavior, the system thinks you're most likely to want to listen to your running playlist," Tanya Jonker, FRL Research Science Manager, suggests. "It then presents that option to you on the display: 'Play running playlist?' That's the adaptive interface at work."

It'd be combined with haptics, with the wristband opening the way to giving a physical sense of feedback from a virtual interaction or object. "You might feel a series of vibrations and pulses to alert you when you received an email marked "urgent," while a normal email might have a single pulse or no haptic feedback at all, depending on your preferences," Facebook says. "When a phone call comes in, a custom piece of haptic feedback on the wrist could let you know who's calling."

Alternatively, AR games could use haptics to give the feeling of using a virtual bow-and-arrow, or other weapon or tool. "Haptic emojis" could map emotion emojis to different haptic feedback. FRL has developed a special wristband it calls Bellowband, named for the eight pneumatic chambers it consists of. These can be precisely controlled to deliver pressure and vibration to the wearer's wrists. A second prototype, dubbed Tasbi (Tactile and Squeeze Bracelet Interface), uses six vibrotactile actuators, along with a different wrist-squeeze mechanism.

The end goal is a wearable computer that feels collaborative and useful, rather than omnipresent and demanding. Eventually, FRL predicts, a combination of smart haptics, virtual displays, and non-traditional input and control methods will allow for many of the interactions to be pretty much unconscious, less distracting than using a current smartphone or laptop. All the same, it's doing that with the idea of ensuring "meaningful boundaries" between users and their devices too.

Any production version of the prototypes is still some way off, FRL concedes. Still, it insists that the technology using EMG is still relatively near-term in its potential, though exactly what that means in terms of a product roadmap remains a mystery.