Facebook Mood Study: The Facts

Earlier this month it was made apparent that a study was conducted on Facebook users by the Facebook, Inc. Core Data Science Team. A total of 689,003 Facebook users were "exposed to emotional expressions in their News Feed" according to the study, testing whether "emotional contagion" is able to occur without direct interaction between people. Turns out it is, indeed possible to change people's emotions without nonverbal cues.

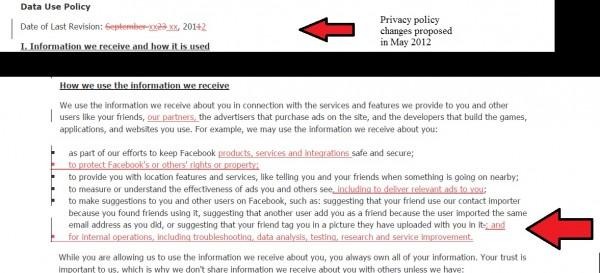

The problem some people are having with this study is the idea that it was done without user consent. This is considered a problem because of Facebook's data policy which promises users that their data will not be used for "data analysis, testing, research, and service improvement."

According to what's been found by Forbes writer Kashmir Hill, the bit in Facebook's Data Policy wasn't added until 4 months after the initial study was conducted by Facebook for this research paper, which was then published 2 and a half years later:

2012, January 11-18th : Study Conducted

2013, October 23rd : Paper received for review

2014, March 25th : Paper approved

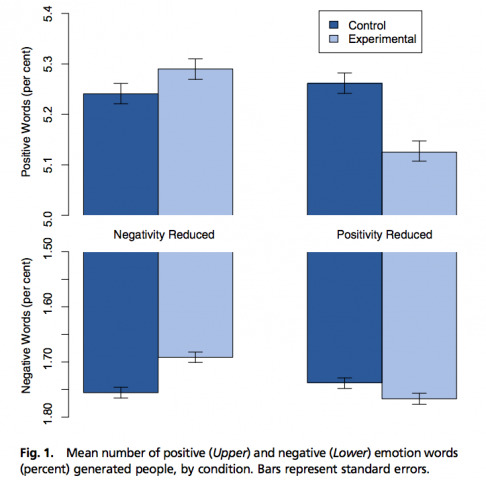

You can access the study through PNAS as a PDF file. The study included finding thousands of users on Facebook that used negative words and thousands more than used positive words. Once these users were found, their news feed algorithms were modified to include posts with words which included mostly positive or negative words.

Effects showed that negative content breeds negative response, and positive content breeds positive response. Facebook researcher and primary creator of this study Adam D. I. Kramer made a statement on his Facebook page. It included the following segment.

"While we've always considered what research we do carefully, we (not just me, several other researchers at Facebook) have been working on improving our internal review practices." Kramer continued, "The experiment in question was run in early 2012, and we have come a long way since then. Those review practices will also incorporate what we've learned from the reaction to this paper."

Facebook will almost certainly face no legal action as a result of this study. This is because – regardless of the published study – any manipulation of content shared by a website in order to gauge user interaction or interest is commonplace. The paper suggests this is true:

"Which content is shown or omitted in the News Feed is determined via a ranking algorithm that Facebook continually develops and tests in the interest of showing viewers the content they will find most relevant and engaging. One such test is reported in this study: A test of whether posts with emotional content are more engaging."

If you have a Tumblr or a Twitter or a Facebook page and you've ever posted content that you hoped would encourage action or an emotion, succeeded or failed, then tried something else, you've run a psychological experiment yourself. Kramer had only to respond due to the scale of the experiment – and it'll all quite likely disappear from our memory in short order.