Facebook Developes A Method Of Detecting And Attributing Deepfakes

Deepfakes have been around for a while now, but recently they've become so realistic that it's hard to tell a deepfake from a legitimate video. For those who might be unfamiliar, a deepfake takes the face and voice of a famous person and creates a video of that person saying or doing things they've never actually done. Deepfakes are most concerning for many when used during elections as they can sway voters by making it appear that one candidate has done or said something they never actually did.

Facebook has announced that it has worked with researchers at Michigan State University (MSU) to develop a method of detecting and treating deepfakes. Facebook says the new technique relies on reverse engineering, which works backward from a single AI-generated image to discover the generative model used to produce it. Much of the focus on deepfakes is detection to determine if an image is genuine or manufactured.

Other than detecting deepfakes, Facebook says researchers can also perform image attribution, determining what particular generative model was used to produce the deepfake. However, the limiting factor in image attribution is that most deepfakes are created using models that weren't seen during training and are simply flagged as being created by unknown models during image attribution.

Facebook and MSU researchers have taken image attribution further by helping to deuce information about a particular generative model based on deepfakes that it has produced. The research marks the first time it's been possible to identify properties of the model used to create the deepfake without having prior knowledge of any specific model.

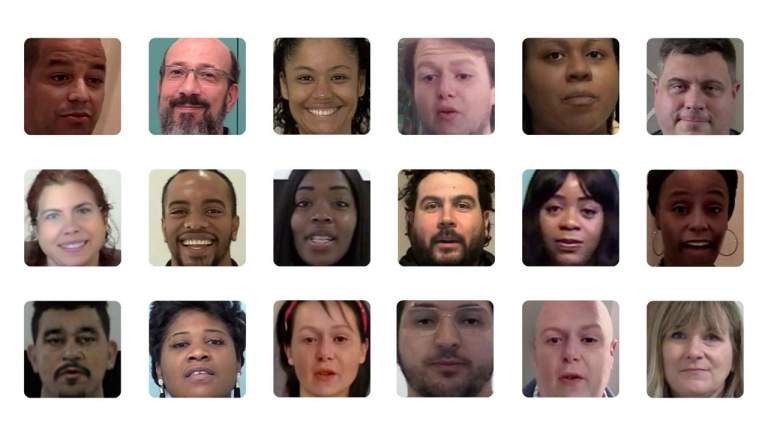

The new model parsing technique allows researchers to obtain more information about the model used in creating the deepfake and is particularly useful in real-world settings. Often the only information that researchers working to detect deepfakes have is the deepfake itself. The ability to detect deepfakes generated from the same AR model is useful for uncovering instances of coordinated disinformation or malicious attacks that rely on deepfakes. The system starts by running the deepfake image through a fingerprint estimation network, uncovering details left behind by the generative model.

Those fingerprints are unique patterns left on images created by a generative model that can be used to identify where the image came from. The team put together a fake image data set with 100,000 synthetic images generated from 100 publicly available generative models. Results from testing showed that the new approach performs better than past systems, and the team was able to map the fingerprint back to the original image content.