Facebook's Fate Is Skepticism Even When It's Innocent

A new leak on how Facebook uses AI to predict users most susceptible to advertising has thrown trust in the social network back into the spotlight. The company has not been shy in discussing its work on artificial intelligence, which it uses in numerous ways to filter what it believes its users will be most interested in – and will keep them coming back for more.

However, in the process it raises questions as to whether trust in Facebook has bottomed out, a matter which could be more damaging to its business than any fine levied by the FTC or other regulators. Even with its newest updates to the site's privacy policies and its tougher requirements for third-party app-makers, it's unclear whether Facebook can regain user trust.

According to a confidential document, obtained by The Intercept, Facebook has been using its artificial intelligence engine, FBLearner Flow, to "predict future behavior" of the site's members. That is pitched as a way for advertisers to more accurately target those potential customers most amenable to their ads.

For example, a car company could ask Facebook to identify users who might be likely to change their vehicle in the near future. This "loyalty prediction" also takes into account the chance of that user switching their brand of choice. The theory, as Facebook explains it, is that with a little well-aimed promotion, that user could be swayed to jump to a competitor.

It's not hard to see why this might concern privacy advocates. The Intercept points to Facebook's public outrage over the recent Cambridge Analytica situation, in which the political consultancy is accused of using data on as many as 87m users after it was extracted from an overly-permissive – and since altered – API for third-party apps. Facebook's angst, the site argues, was about the deception involved, not the fact that Cambridge Analytica uses profiling techniques that the social network itself may come close to.

On the other hand, however, you could make a convincing argument that this is par for the course in business. What Facebook calls "loyalty prediction" is really estimating churn: the rate of turnover in customers, who stop subscribing to a service in each given accounting period.

The problem Facebook faces, though, is that none of its tools, services, or products will ever be looked at in isolation again. In the aftermath of the Cambridge Analytica debacle, and its mobile apps being overly-greedy about contacts and chat history, and a hundred other death-by-tiny-cuts controversies, the social network has squandered any goodwill it might have generated by allowing you to keep up with family and friends across the globe. The assumption of trust is gone.

Certainly, Facebook's bounty of demographic data is a real reason for concern. With its two billion users, the information its prediction engine can crunch through is probably more comprehensive than any other. While it may be, as the user protection promises claim, "aggregated and anonymized" for the sake of individual user privacy, that presumably only affects the access third-parties like advertisers get.

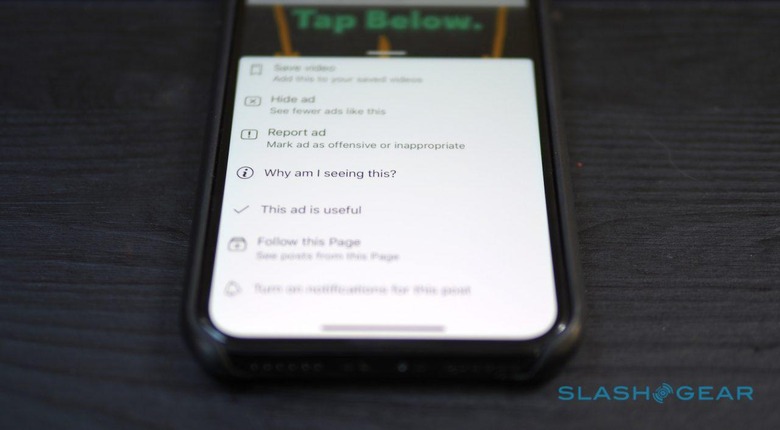

Facebook's targeting for those advertisers, however, has always been a black box. You tell the site who you want to reach, and your budget, and it handles the rest. Certainly, it's commensurate with the well-repeated promise over the past few weeks that Facebook is not in the business of selling user data, but it doesn't mean targeted access to those users isn't available.

In statements about FBLearner Flow, Facebook argues that to say the system is used for marketing is a "mischaracterization" but points out that it has previously stated publicly that it does "use machine learning for ads." Mark Zuckerberg, in his appearances before the US Congress this past week, has made similar comments about the nature of Facebook's business and its attitude toward privacy. "We run ads," the CEO and founder told Senator Orrin Hatch (R-UT) when asked how the company could remain free, not individuals' data.

As the lingering conspiracy theories about Facebook secretly turning on microphones and listening in on your conversations demonstrate, however, there's already no shortage of paranoia about just how well the social network knows you. Applying advanced AI to marketing may well be in keeping with Facebook's publicly-stated commitments to individual user privacy. Problem is, after feeling let down so many times before, we may simply not believe them even if they really are telling the truth.