Dazzled By The Sun, NASA Taps AI To Give Its Solar Telescope An Eye-Test

Staring at the Sun is ill-advised if you want to keep your eyesight, but NASA's solar telescopes have some sneaky ways to avoid burn-out as the space agency gleans vital information about our closest star. The Solar Dynamics Observatory has been working for more than a decade, unlocking unprecedented details about the ferocious forces boiling away in the Sun, but new AI tech is making sure its solar eyesight is 20/20.

The SDO consists of two primary imaging instruments, the Helioseismic and Magnetic Imager (HMI) and the Atmospheric Imagery Assembly (AIA). The latter trains a constant gaze on the Sun's surface, capturing a shot every 12 seconds across 10 wavelengths of ultraviolet light.

The AIA might be more resilient than human eyes would be – damage to your eyes from staring at the Sun, known as solar retinopathy, can happen in under two minutes – but over time it still suffers from the sheer energy output. "Over time, the sensitive lenses and sensors of solar telescopes begin to degrade," NASA explains. "To ensure the data such instruments send back is still accurate, scientists recalibrate periodically to make sure they understand just how the instrument is changing."

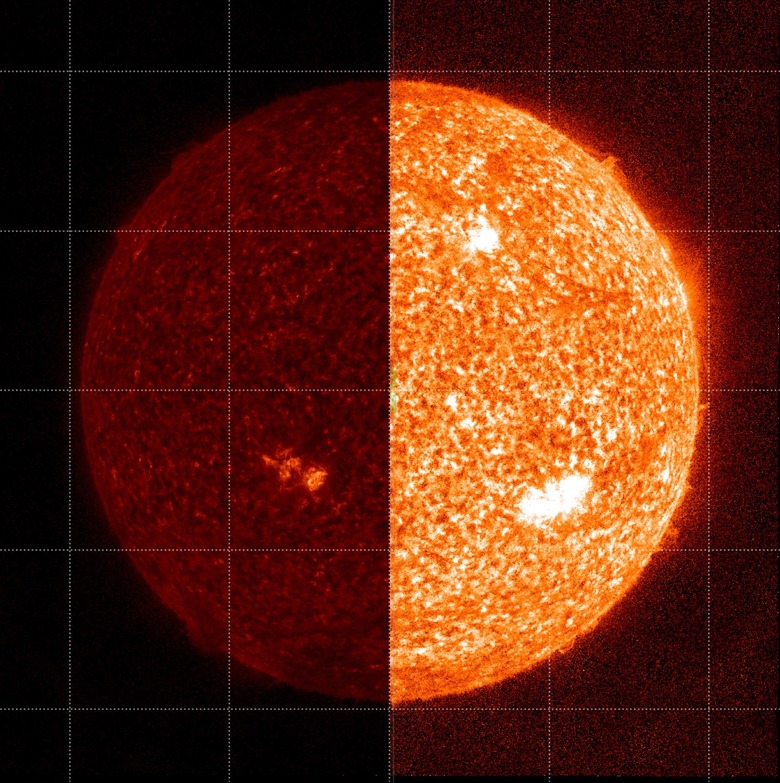

This SDO eye-test has so far relied on sounding rockets. Designed to have a short lifespan, they fly out past the bulk of the Earth's atmosphere – which helps protect us from much of the ultraviolet light – and then measure the levels out there. That's then compared to the measurements from the AIA, and then the data tweaked to accommodate instrument degradation. The image above shows the original data from the AIA on the left, and the processed version using sounding rockets calibration on the right.

Problem is, NASA explains, you can't be constantly sending up sounding rockets. "That means there's downtime where the calibration is slightly off in between each sounding rocket calibration," the agency explains. Looking ahead, meanwhile, deep space missions will also need to look at potent stars, but won't be able to use sounding rockets for their calibration.

The fix, detailed in a new paper today, is machine learning. By training an AI algorithm on existing images from sounding rocket calibration flights, and telling it what the correct amount of calibration is, the system can learn how much to apply.

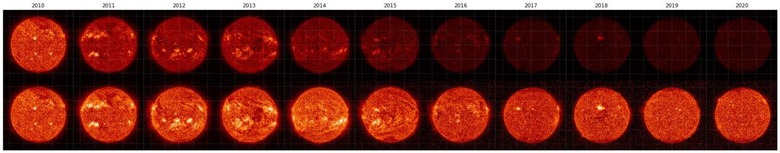

In the image above (which you can click to see full-sized), the top row shows the raw data captured by the AIA in the years since it began observations. The lower row shows the data after being processed by the new machine learning algorithm.

"Because AIA looks at the Sun in multiple wavelengths of light, researchers can also use the algorithm to compare specific structures across the wavelengths and strengthen its assessments," NASA says. "To start, they would teach the algorithm what a solar flare looked like by showing it solar flares across all of AIA's wavelengths until it recognized solar flares in all different types of light. Once the program can recognize a solar flare without any degradation, the algorithm can then determine how much degradation is affecting AIA's current images and how much calibration is needed for each."

Checking the machine learning predictions to the actual calibration results from rocket launches, it turned out the AI got it just right. Now, the AIA team plans to use the trained algorithm to better adjust for changes in the instrument between future rocket flights.