Cerebras WSE's Giant Chip With 1.2 Trillion Transistors Is Made For AI

The current trend in computing has been to reduce the size of components for both space efficiency and manufacturing considerations. All of that while not sacrificing the processing power needed even for the most intensive computing use cases. For AI and machine learning, however, the power needed goes beyond what regular commercial processors can offer given that trend. That is why young company Cerebras has developed a new "AI-first" chip that throws that convention out the window with what is probably the largest chip ever built.

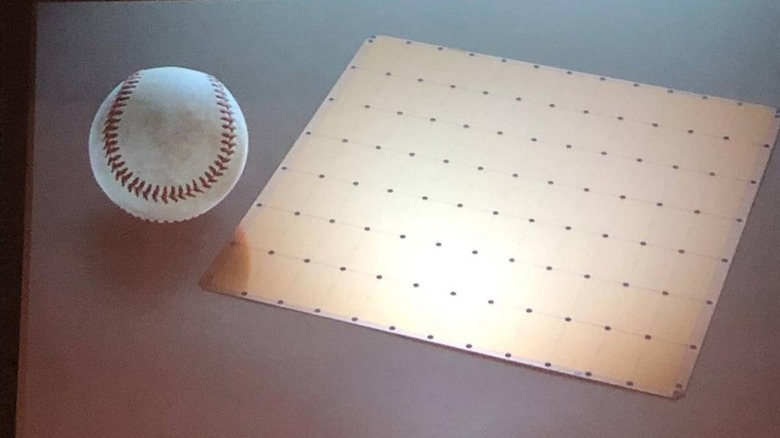

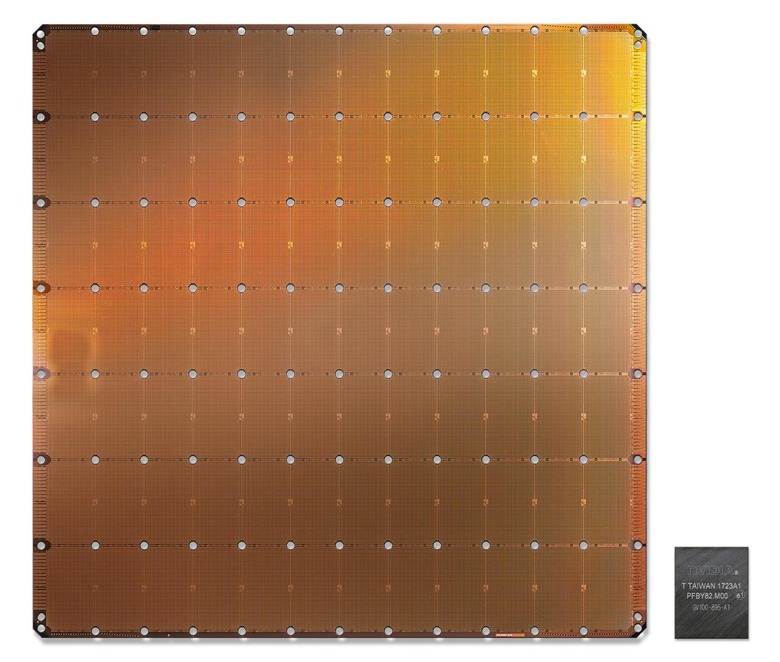

The first thing you might note about Cerebras' "Wafer Scale Engine" or WSE chip is that it's huge and may not look like any chip you've ever seen, even within the past 30 years. It's 100 times larger than NVIDIA's graphics processing unit, also used for AI, and is more than six times the size of a baseball.

Its size isn't just for novelty, though. The wafer boasts of 400,000 AI-optimized cores and 18 GB of local superfast SRAM memory. All in all, it has 1.2 trillion transistors crammed on a 46,225 sq. mm. piece of silicon. In comparison, that NVIDIA graphics chip only has 21.1 billion transistors.

The theory behind that large size is for speed, efficiency, and reducing latency. By laying out all the needed connections on a single sheet and by placing all the needed memory immediately near it, the Cerebras WSE reduces the latency that data needs to travel, thereby reducing the "training time" needed by AI to go through insights and produce answers.

It definitely sounds convincing in theory but the Cerebras WSE will definitely need the real-word applications to back it up. Forget about this being available even to business customers as the size, production, and most likely the price of the Wafer Scale Engine will put it out the reach of anyone outside giant companies like Google. That said, the startup has made no mention of such customers lining up to put the chip to the test.