Apple's Major New Child Safety Features Detailed: Messages, Siri, And Search

Apple has introduced an expansive list of new features designed to help parents protect their children from potentially explicit and harmful material, as well as reducing the odds that a predator may be able to contact a child with harmful materials. The new features cover Messages, Siri, and Search, offering things like device-based AI analysis of incoming and outgoing images.

Protecting young users

The new child safety features focus on three specific areas, Apple explained in an announcement today, noting that it developed these new tools in collaboration with experts on child safety. The three areas involve the company's Messages platform, utilizing cryptography to limit Child Sexual Abuse Material (CSAM) without violating the user's privacy, and offering expanded help and resources if 'unsafe situations' are encountered through Siri or Search.

"This program is ambitious, and protecting children is an important responsibility," Apple said as part of its announcement, explaining that it plans to 'evolve and expand' its efforts over time.

Messaging tools for families

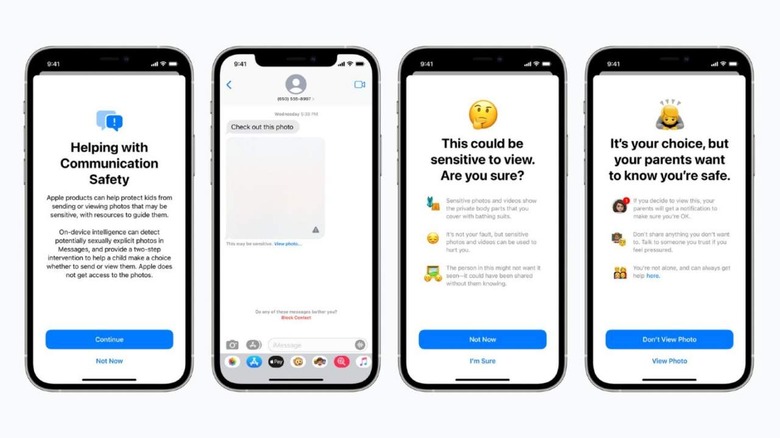

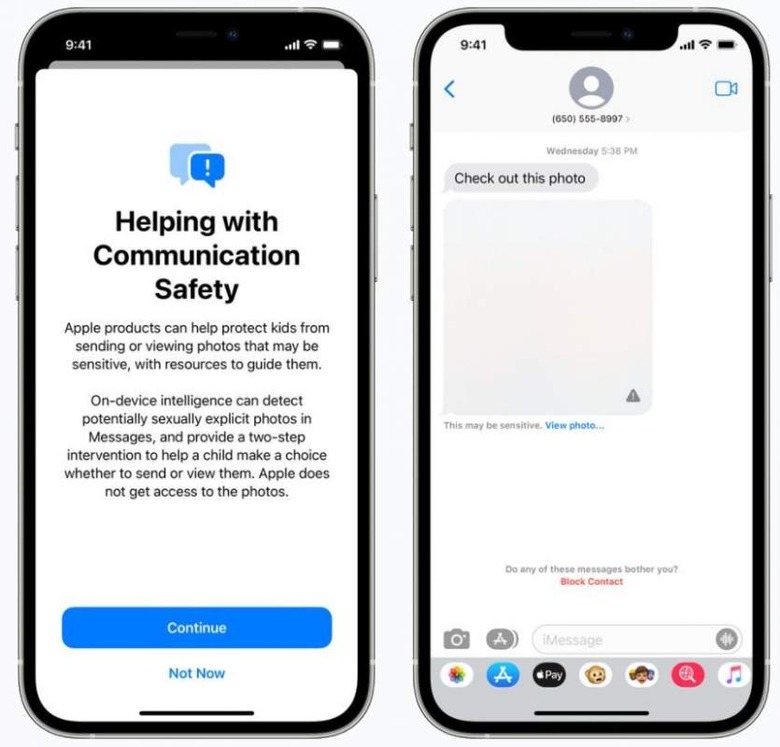

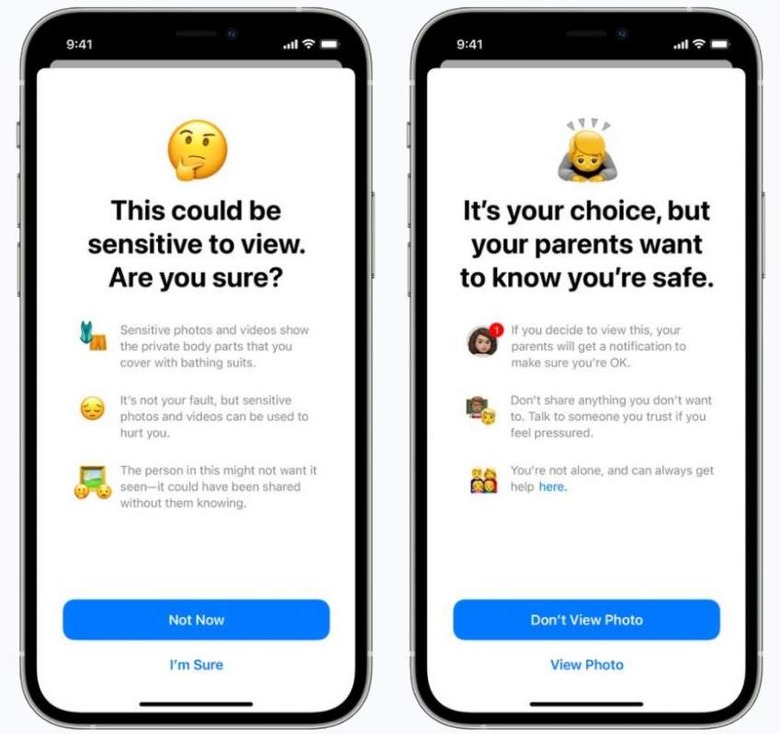

Once the new features are made available, Apple says its Messages app will add new tools that warn both parents and children when sexually explicit images are sent or received. Assuming a child receives an image flagged as explicit, the content will automatically be blurred and the child will instead be given a warning about what they may see if they click on the attachment, as well as resources to help them deal with it.

Likewise, if Apple's on-device AI-based image analysis determines that a child may be sending a sexually explicit image, Messages will first show a warning before the content is sent. Because the image analysis takes place on the device, Apple will not have access to the actual content.

Apple explains that parents will have the option of receiving an alert if their child decides to click on and view a flagged image, as well as when their child sends an image the AI detected as potentially sexually explicit. The features will be available to accounts that were set up as families.

Using AI to detect while protecting privacy

Building upon that, Apple is also targeting CSAM images that may be stored in a user's iCloud Photos account using "new technology" arriving in future iPadOS and iOS updates. The system will involve known CSAM images and enable Apple to report these users to the National Center for Missing and Exploited Children.

In order to detect these images without violating the user's privacy, Apple explains that it won't analyze cloud-stored images. Rather, the company will use CSAM image hashes from the NCMEC and similar organizations to conduct on-device matching.

The "unreadable set of hashes" are stored locally on the user's device. For it to work, Apple explains that its system will undergo a matching process using this database before an image is uploaded from the user's device to iCloud Photos.

Apple elaborates on the technology behind this, going on to explain:

This matching process is powered by a cryptographic technology called private set intersection, which determines if there is a match without revealing the result. The device creates a cryptographic safety voucher that encodes the match result along with additional encrypted data about the image. This voucher is uploaded to iCloud Photos along with the image.

That system is paired with threshold secret sharing, meaning that Apple can't interpret the safety vouchers' content unless the account crosses a threshold of "known CSAM content." Apple says the system is extremely accurate, meaning there would be a less than one-in-one-trillion chance annually that an account may be mistakenly flagged.

Assuming an account does exceed this threshold, Apple will then be able to interpret the safety vouchers' content, manually review each report for confirmation that it was accurate, then disable the account and send the report off to the NCMEC. An appeals option will be available for users who believe a mistake was made that led to the account being disabled.

Siri's here to help

Finally, the new features are also coming to Search and Siri. In this case, parents and children will see expanded guidance in the form of additional resources. This will include, for example, the ability to ask Siri how to report CSAM content they may have discovered.

Beyond that, both products will also soon intervene in cases where a user searches or asks for content related to CSAM, according to Apple. "These interventions will explain to users that interest in this topic is harmful and problematic, and provide resources from partners to get help with this issue," the company explains.

Wrap-up

The new features are designed to help address the growing need for protecting young users from the Internet's vast array of harmful content while also enabling them to safely leverage the technology for education, entertainment, and communications. The technology may help reassure parents who would otherwise be concerned about letting their children use these devices, as well.

Apple says its new child safety features will be available to users with iOS 15, iPadOS 15, watchOS 8, and macOS Monterey updates scheduled for release later this year.