Apple Child Safety FAQ Pushes Back At Privacy Fears

Apple has published a new FAQ on its child abuse scanning system, having prompted controversy last week with the announcement that it would use AI to spot potentially harmful or illegal material on iPhone and other devices. The system aims to identify signs of possible child grooming, or shared illicit photos and videos, though it caused concerns that the technology could eventually be misused.

The goal, Apple says, is "to protect children from predators who use communication tools to recruit and exploit them, and limit the spread of Child Sexual Abuse Material (CSAM)." However the way in which Apple planned to do that met with privacy pushback, with WhatsApp and others branding the system "surveillance tech."

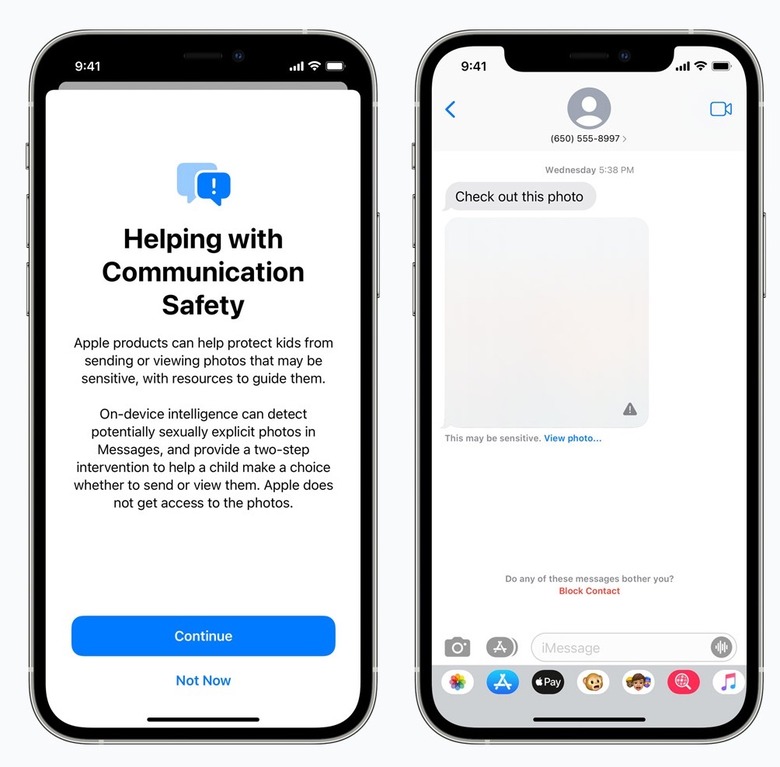

Part of the challenge is that, in fact, Apple announced two systems at the same time, and the details in some cases became confused. Communication safety in Messages, and CSAM detection in iCloud Photos, "are not the same and do not use the same technology," Apple points out in its new document.

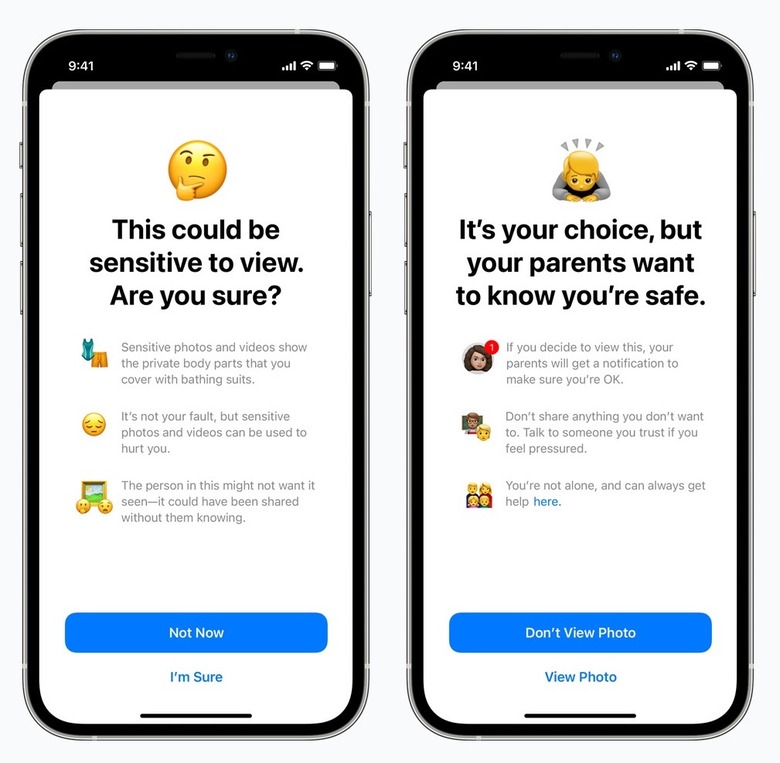

For the Messages feature, the scanning works on images sent or received in the Messages app, only on child accounts set up in Family Sharing. Analysis is carried out on-device, for privacy purposes, and images deemed to be explicit will be blurred and viewing the picture made optional. "As an additional precaution," Apple adds, "young children can also be told that, to make sure they are safe, their parents will get a message if they do view it."

Parents or guardians must opt-in to the scanning system, and the parental notifications can only be enabled for child accounts age 12 or younger. Should a photo be flagged, no details are shared with law enforcement or other agencies; even Apple isn't notified. "None of the communications, image evaluation, interventions, or notifications are available to Apple," the company insists.

As for CSAM detection in iCloud Photos, that only impacts users who have opted into iCloud Photos. On-device data – and the Messages system specifically – are not affected. It looks for potentially illegal CSAM images uploaded to iCloud Photos, though no information beyond the fact that a match has been made is shared with Apple.

Photos on the iPhone camera roll aren't scanned with the system, only those uploaded to iCloud Photos, and CSAM comparison images aren't stored on the iPhone either. "Instead of actual images, Apple uses unreadable hashes that are stored on device," the company explains. "These hashes are strings of numbers that represent known CSAM images, but it isn't possible to read or convert those hashes into the CSAM images they are based on."

One of the biggest concerns, perhaps unsurprisingly, has been that this scanning system could be the start of a slippery slope. While used initially for CSAM images, the same technology could be appealing to authoritarian governments, it's suggested, who might pressure Apple to add non-CSAM images to the scanning list.

"Apple will refuse any such demands," the company insists. "We have faced demands to build and deploy government-mandated changes that degrade the privacy of users before, and have steadfastly refused those demands. We will continue to refuse them in the future."

Whether that commitment reassures you will probably depend on where you fall on the general Big Tech Trust scale. Apple points out that CSAM flags aren't automatically reported to law enforcement, and that human review is made before anything is reported to the National Center for Missing & Exploited Children (NCMEC). At the same time, Apple does not add CSAM image hashes itself, but instead uses a set that has "been acquired and validated by child safety organizations."