Here's How Apple Is Making AR Objects Far More Believable

Apple is using machine learning to make augmented reality objects more realistic in iOS 12, using smart environment texturing that can predict reflections, lighting, and more. The new feature, being added in ARKit 2.0, uses on-device processing to better integrate virtual objects with their real-world counterparts, blurring the lines between what's computer-generated and what's authentic."Put a real banana next to a metal AR bowl, there'd be a yellow reflection added"

Currently, if you have a virtual object in a real-world scene, like a metal bowl on a wooden table, the wood's texture won't be reflected in the metal bowl. What environmental texturing does is pick up details in the surrounding physical textures, and then map that to the virtual objects. So, the metal bowl would slightly reflect the wooden surface it was sitting on; if you put a banana down next to the bowl, there'd be a yellow reflection added too.

Environment texturing gathers the scene texture information, generally – though not always – representing that as the six sides of a cube. Developers can then use that as texture information for their virtual objects. Using computer vision, ARKit 2.0 extracts texture information to fill its cube map, and then – when the cube map is filled – can use each facet to understand what would be reflected on each part of the AR object.

That, however, would require a complete, 360-degree scan of the space before the cube map could be filled. Since that's impractical, ARKit 2.0 can use machine learning to fill in the gaps in the cube map, by predicting the textures that are missing. It's all done in real-time and entirely on-device.

"Few AR experiences involve sitting perfectly still"

Of course, few AR experiences involve sitting perfectly still. As a result, ARKit 2.0's environment texturing can track physical movement and adjust its predictions of absent textures accordingly. Again, as the virtual objects move, their cube map shifts to change the reflections applied to their virtual surfaces.

It's astonishing how much of a difference it makes to how well augmented reality objects bed into their real surroundings. Certainly, we're still some way from digitally generated graphics being indistinguishable from their authentic counterparts: since Apple is doing all the rendering locally, rather than in cloud, there's the headroom of the iPhone or iPad GPU to consider. Still, the AR objects look far more realistic as participants in the real-world scene than before.

As with ARKit more broadly, what makes this new environment texturing particularly powerful is how little third-party app developers will actually have to do to integrate elements like reflections, shadows, and more into their augmented reality experiences. The cube map is generated automatically, and kept up to date as the user moves around the space. In many cases it's just a couple of lines of code that are required to then make use of that texture data.

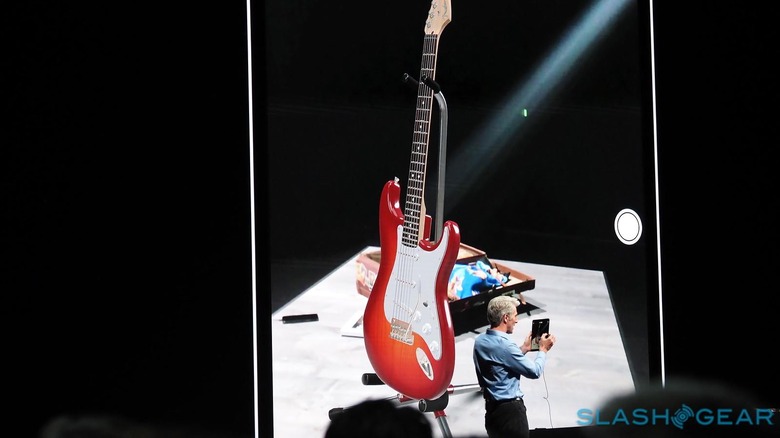

Apple's big AR demonstration for WWDC 2018 is a multiplayer game involving looking "through" an iPad display and toppling wooden blocks added digitally to a real table. All the same, I can't help but think of what implications this texture mapping will have on tomorrow's augmented reality devices, such as the smart glasses Apple is believed to be working on.

Then, the degree of realism of the virtual objects will be all the more important. After all, when you're wearing AR glasses anything that looks out of place visibly will be all the more jarring, compared to on the screen of your iPhone or iPad. Apple may not be talking about that today, but it's not hard to see that this route to more lifelike virtual objects – in combination with things like persistent, shared maps of AR spaces – will be instrumental in making more immersive augmented reality experiences that don't have an iPhone in-between.