Android Wear May Soon Be Sweeter Than iPhone X

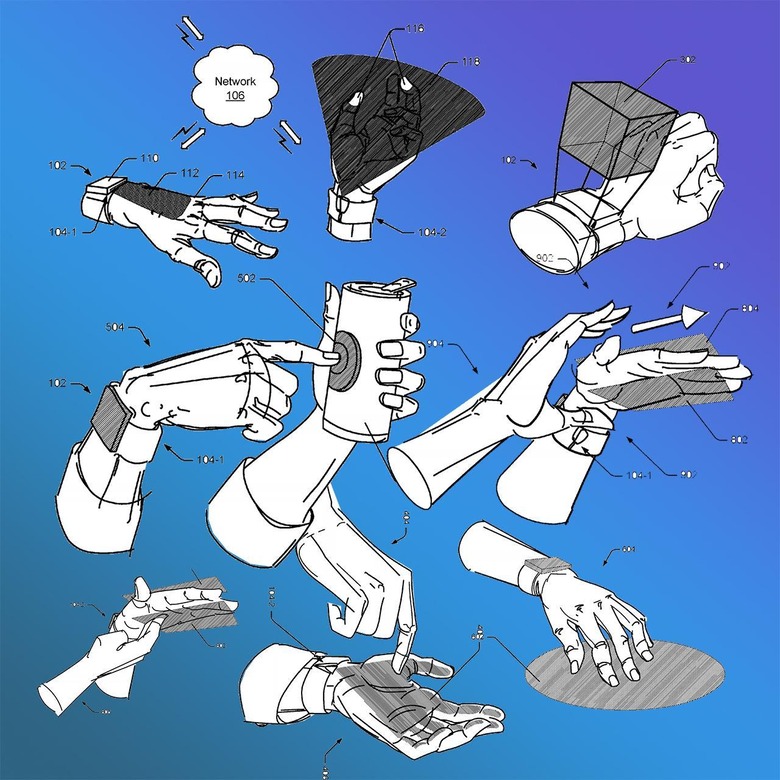

iPhone X TrueDepth-like radar (see: Face ID) may be employed on the next generation of Android Wear devices. In a set of concept drawings made by Google, we've seen the ways in which radar could make the next generation of wearable devices not only more usable, but useful, too. Google's Radar-Based Gesture-Recognition Through A Wearable Device might well be the reason why the future of Android Wear is a reality.

Radar Wrist

The radar technology Google uses for their presentation rests in a wrist-worn device. This device is not necessarily the one iteration Google might use, but it presents a couple of ways in which the idea can be executed. One emanates radio waves from the bottom of the wrist, the other does the same thing from the top of the wrist.

With this radar system, the user makes gestures to command devices to execute tasks. For example you might put up one finger for your lamp to turn on with a yellow light, and two fingers might mean that light switches to blue. The possibilities are only limited by the devices the user has on hand and the imagination of the user creating commands.

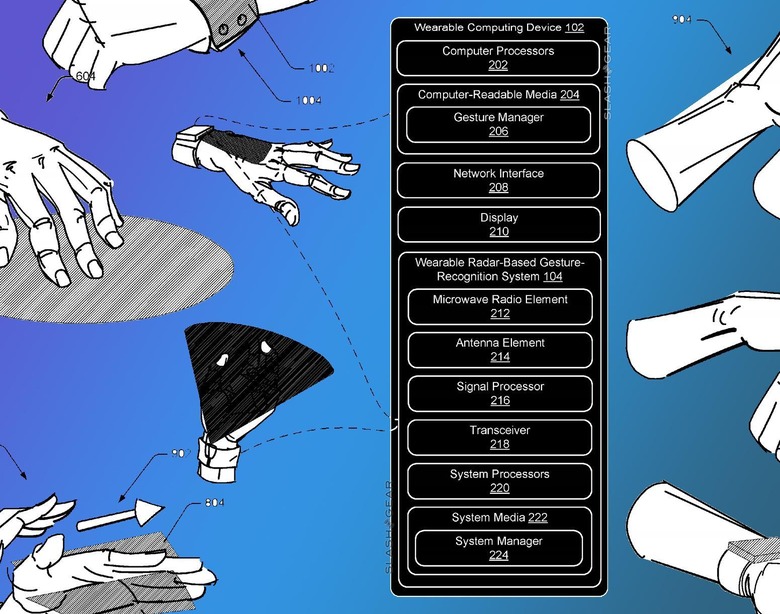

As Google shows, this Wearable Computing Device requires a number of elements. It requires its own processor, as well as Gesture Manager software. The device Google shows has a Network Interface and a Display (to show some form of visual confirmation that each gesture has been executed correctly.)

This device has two elements very similar to that of the signal blaster and signal reader of the iPhone X. One is a Microwave Radio Element, the other is an Antenna Element – there's also a Signal Processor and a Transceiver. Basically Google has all of the elements of the iPhone X notch in play – all the important parts – here in a device they first wrote about in a string of patents that date back to June of 2014.

Project Soli

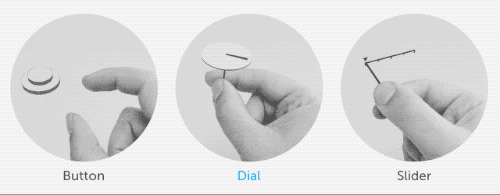

One avenue Google took this technology down was a Google ATAP project called Soli. I wrote a little bit about this back in September of 2017. Back then I presented it as a largely gesture-based system, as if the gestures were the most important part of this equation. Now here at the tail end of 2017, Apple seems to have switched up the interest game, and any technology that lives in the iPhone X "notch-zone" is one worth taking a peek at.

Above you'll see just one example of what Projecti Soli is all about – making your hand do the work. Instead of a touchscreen display activating all things with a flat plane, the whole hand can control a computer in 3D space.

Below you'll see a short video on Project Soli. This is part of Google ATAP and housed for developers over at Google ATAP / Soli.

The video above shows what developers had done as of the point at which the video was published – about a year ago. There the project shows the technology as a rather large set of boards and such – Google's wearable gesture-recognition radar tech is much smaller.

Where from here?

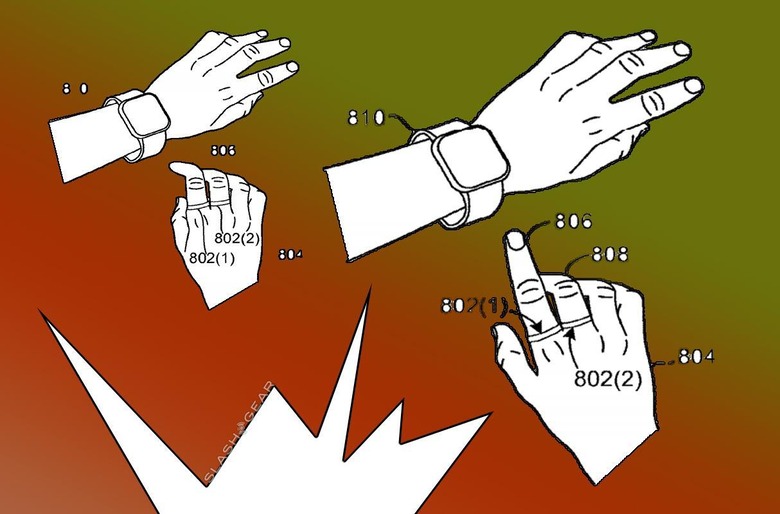

Given the 3D sensor floodgates are now broken, the Android Wear hardware action might not be far behind. It all depends on whether there's enough call for devices such as these – that tech in a smartwatch, a wristband, or a ring!

Microsoft has an interest in this technology as well. As of January of 2017 they had their own patents in place (also having worked on said patents since 2014) for a Smart Ring (drawings shown above). That tech would require "flexion sensors" – and would require a screen. Have a peek at the device simply known as RING to get an idea of what the most rudimentary of these devices came to a few years ago.

Stick around the SlashGear Wearable Reviews and News Portal for more action – mostly from the past. The next move Google makes in the Android Wear universe might well be the most revolutionary!