Amazon Alexa, Siri, Google Assistant Hacked With Laser In Major Security Flaw

Ever since smart speakers started hitting shelves, there's been no lack of people bringing up certain security concerns, whether those have to do with flaws in hardware and software or just general privacy issues. Today, a team of researchers are detailing a new vulnerability that exists in many smart speakers – along with some phones and tablets – that could potentially allow hackers to issue voice commands from far away.

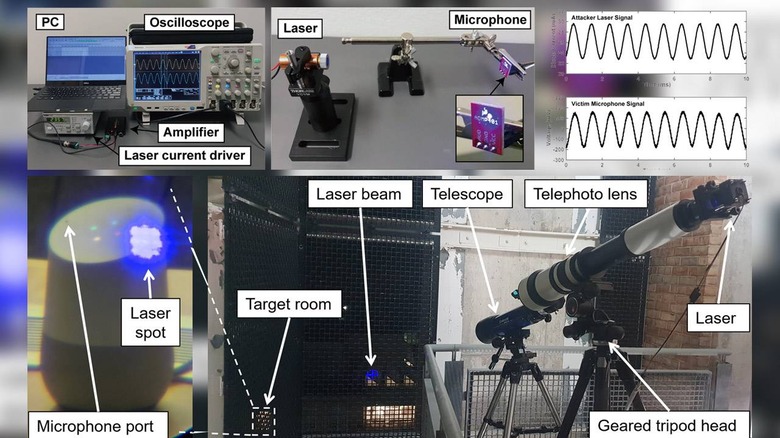

The team, comprised of researchers from The University of Electro-Communications in Tokyo and the University of Michigan, detailed this vulnerability in a new paper titled "Light Commands: Laser-Based Audio Injection Attacks on Voice-Controllable Systems." That paper is available through a new website centered on explaining these so-called Light Commands, which essentially use lasers to manipulate smart speakers with bogus commands.

"Light Commands is a vulnerability of MEMS microphones that allows attackers to remotely inject inaudible and invisible commands into voice assistants, such as Google assistant, Amazon Alexa, Facebook Portal, and Apple Siri using light," the researchers wrote. "In our paper we demonstrate this effect, successfully using light to inject malicious commands into several voice controlled devices such as smart speakers, tablets, and phones across large distances and through glass windows."

The Light Commands vulnerability seems to be something of a perfect storm: since hackers can use lasers to activate smart speakers, tablets, or phones, they don't need to be within the typical pick-up range of the device's microphones. Since the exploit still works even when aiming a laser through a window, hackers don't even need access to the smart speaker before they carry out this attack, and they could potentially do this without alerting the owner of the device, as commands issued to speakers, phones, or tablets in this way would be inaudible.

"Microphones convert sound into electrical signals," the Light Commands website reads. "The main discovery behind light commands is that in addition to sound, microphones also react to light aimed directly at them. Thus, by modulating an electrical signal in the intensity of a light beam, attackers can trick microphones into producing electrical signals as if they are receiving genuine audio."

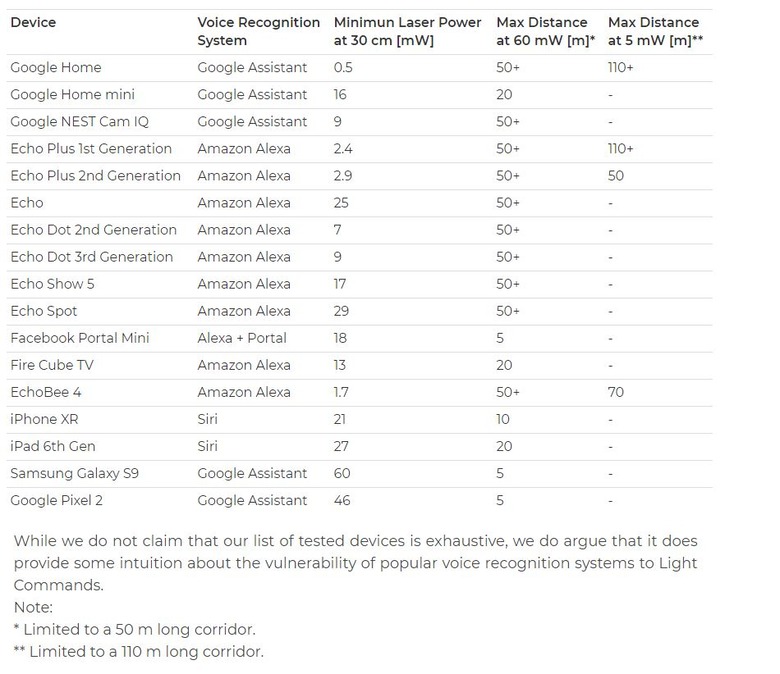

Researchers tested a broad range of devices, including smart home speakers from Google, Amazon, and Facebook, along with phones and tablets like the iPhone XR, 6th-gen iPad, Galaxy S9, and Google Pixel 2. They discovered that in some cases, this exploit can still work from as far as 110 meters away – which was the longest hallway they had available to them as they were testing.

Obviously, there are a number of factors that could prevent this exploit from working. At a range as large as 110 meters, hackers would likely need a telescopic lens and tripod to focus the laser. As far as smartphones and tablets are concerned, many of them with only respond specifically to the user's voice, making this exploit significantly harder to carry out on those devices.

However, on smart speakers that don't have voice recognition like that active, there's potential for hackers to do some pretty nasty things with this exploit. Assuming a smart speaker is visible from a window, hackers could use Light Commands to unlock smart doors, garage doors, and car doors. Hackers could also use this exploit to make purchases, or really carry out any command those smart speakers recognize.

The good news is that the researchers say so far there's been no evidence of these exploits being used in the wild. They also note that there are ways for manufacturers to prevent these attacks, perhaps by adding an extra layer of authentication to voice requests (like asking the user a randomized question before carrying out the action). Manufacturers could also patch smart speakers to ignore commands only coming from a single microphone, as this exploit targets just one microphone at a time.

In a write up about this vulnerability, Wired reached out to Google, Apple, Facebook, and Amazon. While Apple declined to comment and Facebook hadn't responded, both Amazon and Google said that they were going over the research presented in this paper and were looking into the issue. Hopefully that means a fix is on the way, because if left unchecked, this vulnerability could make any smart speaker within sight of a window a liability for users.