Amazon Alexa Recorded Audio Clips Aren't As Secret As You Think [Update]

It's amazing what we can literally tell computers, phones, and even appliances to do these days. Once the stuff of science fiction only, voice commands are becoming more common in almost any new device or service. That progress didn't happen magically overnight and companies like Google, Amazon, and Apple do say that your voice recordings may randomly be selected to be analyzed to improve the service. What they don't say, however, is that there are humans involved in doing that analysis by listening to those presumed private recordings.

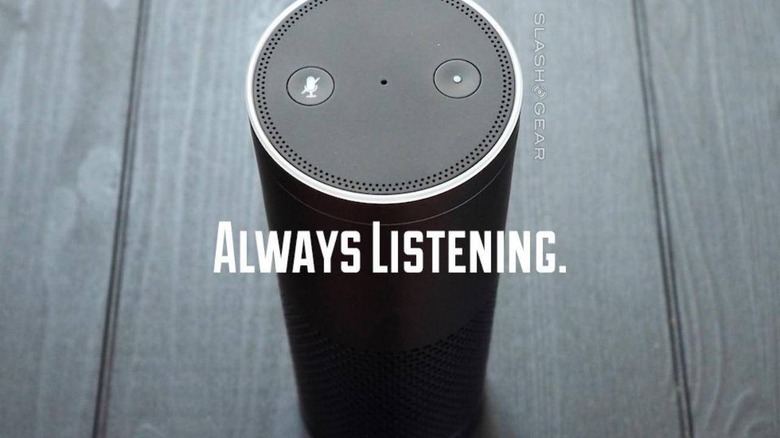

Smart speakers and voice-controlled AI assistants have raised privacy red flags right from the start. Their private home setting and always listening mode have sent shivers down advocates' spines. But while the companies have ensured that users' data are more or less protected and private, they may not always be disclosing everything and everyone that's involved with it.

Amazon's privacy policy does state that Alexa requests, at least only those uttered after saying the wake phrase, may be used to train its digital brain to become even better. Most people simply assume that Amazon feeds those recording into computers that do their magical machine learning processes. Nowhere does it state that employs thousands of humans to listen to some of those recordings to analyze and annotate them to help Alexa improve, which is exactly what Bloomberg reports.

To be fair, it isn't just Amazon doing this. Both Apple and Google employ humans to manually clarify cases where Siri and Google Assistant, respectively, may trip over. All these companies sing the same tune when asked about this review process. Recordings are selected randomly, encrypted, and stripped of any personally identifiable information. They aren't clear, however, what happens when the audio clip itself may contain some of that information.

There are other complications beyond the potentially embarrassing realization that your secret monologue may be heard by someone somewhere in the world. Those reviewers may find themselves listening to disturbing audio, maybe even illegal ones. This could put them in a precarious and sometimes traumatizing situation of knowing a terrible secret they can do nothing about.

Update: Amazon has provided us with the following statement:

"We take the security and privacy of our customers' personal information seriously. We only annotate an extremely small number of interactions from a random set of customers in order improve the customer experience. For example, this information helps us train our speech recognition and natural language understanding systems, so Alexa can better understand your requests, and ensure the service works well for everyone. We have strict technical and operational safeguards, and have a zero tolerance policy for the abuse of our system. Employees do not have direct access to information that can identify the person or account as part of this workflow. While all information is treated with high confidentiality and we use multi-factor authentication to restrict access, service encryption, and audits of our control environment to protect it, customers can always delete their utterances at any time" Amazon spokesperson