Adobe, Cornell AI Transfers One Photo's Style To Another

There is no doubt that artificial intelligence, machine learning, and neural networks have experienced huge strides in progress, but of their applications have been on things with "hard edges". Those include search results, translation, board games, etc. Recently, however, progress is also being made in areas of computer vision, imaging, and graphics, for applications that are usually considered more "subjective". Like transferring one photo's style unto another photo. Researchers from Adobe and Cornell University have developed a deep-learning neural network that does exactly that, and the results are very convincing indeed.

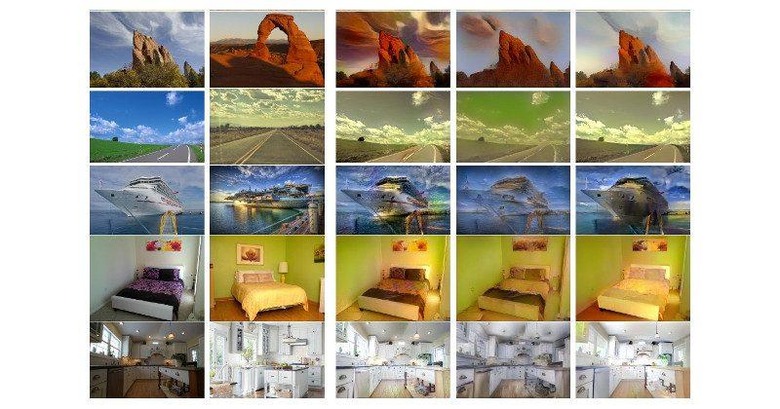

"Style transfer" may not be a popular term, even for the tech savvy, but heavy users of social networking apps and services, like Facebook's Prisma, are already using it without even knowing it. It is actually a technique that uses a smidgen of AI to transfer the style of one image unto another. The current implementation of style transfer, however, has one critical limitation. Its output produces a more "painterly" image that turns a real-world photo into a stylized representation. In other words, it isn't going to fool anyone.

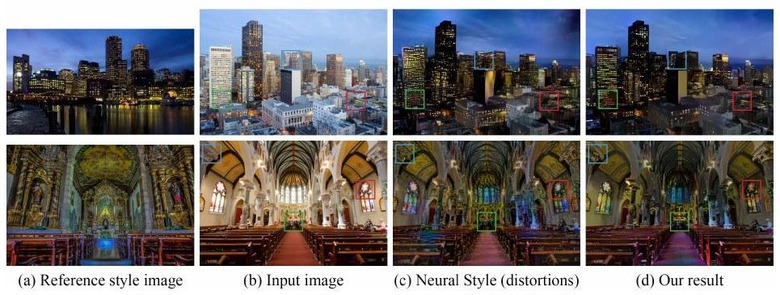

Adobe and Cornell University's AI, however, might. It applies principles in deep-learning, hence the name "Deep Photo Style Transfer", to produce a more photorealistic "merged" image. It can take, for example, a photo of a night-time skyline, copy elements like colors and brightness, and apply those onto a completely different image of a skyline, perhaps one taken in the morning. And the final result is no paint job but one that could pass for a faked photograph.

Deep Style Transfer is hardly the first attempt at using neural networks to implement such a feature, but it is so far the most visibly successful one. Plain neural networks result in photos with very obvious distortions, as if portions of the source image have simply been cut out and pasted on the target image. It still has limits though. For example, the AI seems to work best on photos with buildings and there should be common elements in both source and target photos. It's still a massive step up from the painterly style that the likes of Prisma produces.

This is just one of the latest examples of machine learning, specifically deep learning, being employed in areas that slightly touch on aesthetics. Last month, Google Brain, revealed a technique that almost makes the "zoom in, enhance" meme possible. Unlike that, however, this Deep Photo Style Transfer has the potential for commercial applications.

SOURCE: Cornell University