Brain-Reading Prosthetics That Are Total Game Changers

One of the most difficult parts of being a human is effectively getting our thoughts out of our heads and into the world. Everything from visual art to simple conversation suffers from the disparity between the idealized thing which resides in our minds and its imperfect reality. Somehow, things seem to get lost in translation somewhere between conception and execution.

Perhaps that's why we dream up more direct lines of communication. From mind-reading aliens to mind-controlled technology, our science fiction has for decades dreamt of bypassing our bodies to put our minds fully in the driver's seat. Science hasn't cracked the code on telepathy, and it probably never will, but when coupled with technology, it offers the next best thing.

For over 50 years, scientists and engineers have been working toward inventing cutting-edge computer-brain interfaces (CBI), allowing our minds to directly control our technology, (via NCBI). While the work certainly isn't done, the field has produced an array of impressive devices including high-tech toys and medical marvels. Some of these mind-reading devices are available commercially while others only exist in labs or clinical studies, but each of them is helping to push the world toward our science fiction fantasies.

Mind controlled VR game

Virtual Reality allows you to step inside of your games, at least visually. Head tracking, usually through some lights on your headset and a camera, tells the game where you're looking and adjusts the visual field accordingly. It's effective enough that, even if the graphics aren't particularly impressive, you can trick yourself into thinking you're really someplace else. Still, interacting with game elements requires the use of peripheral controllers or even classic gamepads, and they're often clunky. If we really want to feel like we're living in an alternate world, we'd need to be able to control our character's actions with our minds.

That's where Neurable, a startup company out of Boston, comes in. They've developed a game called "Awakening" that achieves the ultimate dream of immersive virtual reality. The game not only utilizes mind control in order for you to play, but it leans into that aspect for its in-universe story. You play as a telekinetic child trying to break out of a laboratory by controlling a bunch of objects with your mind, (via MIT Technology Review).

Players use an HTC Vive VR headset, modified with an array of dry electrodes mounted at various positions around your skull. Those electrodes take in brain wave information and translate them to actions inside the game. For most users, the game works after only a few minutes of calibration to make sure the hardware can accurately read your brain activity.

Emotiv EPOC lets you type with your mind

The EPOC headset, and the other devices from Emotiv, work on a similar principle to the above modified VR headset. They use a collection of non-invasive electrodes deployed at various points around your skull to collect and read brain data and translate it into action on your computer or other devices (via Wired).

The EPOC has 14 sensors, each of which is saline-moisturized to improve connectivity. Once attached to your head, they look for brainwave activity associated with specific facial features and emotions, as well as your thoughts. A suite of software allows you to train the device and your brain to work together to manipulate objects on the screen. You can then take those actions into game worlds and control your avatar with your mind. However, gaming isn't the only useful capability of Emotiv's devices. You can also train the device to use your brain waves to control on-screen keyboards, cursors, and real-world devices like motorized wheelchairs or robotic arms, (via Emotiv). That information is then delivered from the headset to the technology of your choice by way of a Bluetooth dongle.

The devices from Emotiv straddle the line between entertainment and assistive technology. They also offer enhanced versions for research purposes, which is appropriate as that's where the vast majority of BCI innovation is happening.

Monkeys eat with a CBI

Playing games with your mind, either using a VR headset or your home computer, is all well and good but computer-brain interfaces have real potential to help people with limited or lost mobility. Controlling limb prosthetics or other medical devices with only our thoughts could provide increased functionality to persons who want and need it.

One of the first successful experiments to showcase this possibility was carried out by the University of Pittsburgh School of Medicine and published in Nature. Scientists wired up test monkeys with an electrode array inserted into the motor cortex. The monkeys were then trained to feed themselves with a robotic arm controlled by their minds (via Medical Xpress).

In initial experiments, the monkeys were trained to move the robotic arm using a joystick. Only later was the control mechanism switched over to their brains. While the monkeys did still have full use of their physical limbs, scientists immobilized them by placing them inside rigid tubes.

When presented with food items like fruit or marshmallows, the monkeys successfully moved the robotic arm toward the reward using only brain activity picked up by the electrode array. What's more, the position of the food items was changed between each attempt and the monkeys were able to modify their movements to successfully gain the reward. Similar technologies would later be used in human patients.

Monkeys type with a CBI

Moving a robotic arm to food and then to your mouth is one thing, but typing complex sentences is something else entirely, especially if you're a monkey.

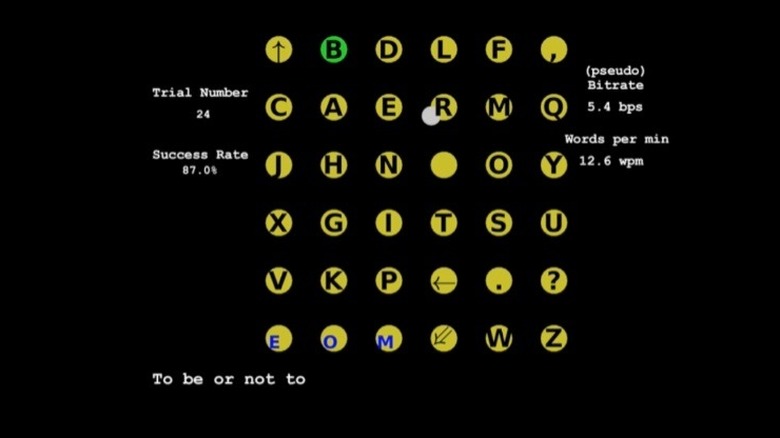

As explained by IEEE Spectrum, scientists used a brain-computer interface to successfully train monkeys to type out the first few words of Hamlet's famous To Be or Not to Be speech. Of course, the monkeys didn't know what they were typing, only that they should click a particular circle.

To make the system work, electrodes were implanted in the motor cortex and scientists watched the monkeys as they moved their physical arms. Then, using machine-learning algorithms, they analyzed and recorded patterns from their brain waves. Once calibrated, the interface could read the monkeys' intentions and translate them to actions on the computer. The monkeys then moved a cursor toward illuminated letters on a screen.

Impressively, one monkey was able to achieve a typing speed of twelve words per minute by clicking one letter at a time, but scientists think they could speed that up in people by using predictive text, thus avoiding the need to click each individual character.

According to the paper, published in Proceedings of the IEEE, this opens up CBIs as a potential communication interface in human patients.

CBIs take the guesswork out of dating

Whether we realize it or not, we're all accustomed to interacting with predictive algorithms. If you've ever shopped on Amazon, you've likely noticed that each time you look at an item, the site offers additional items you might be interested in. One way it decides on those suggestions is by cataloging what previous shoppers bought and making educated assumptions about what you're likely to be interested in.

Those suggestions, however, have an implicit bias baked in. They are based only on what people actually buy or share online, which might only be a fraction of what they're actually interested in. Certainly, some portion of our preferences get filtered out between thought and mouse click and it's that portion that scientists are interested in.

To that end, researchers from the Universities of Copenhagen and Helsinki set about using a CBI to capture an individual's real-time reactions to stimuli by measuring their brain waves (via the University of Copenhagen).

Coupled with an algorithm, their interface took readings from a participant's mind while they looked at human faces on a screen. Using those readings and comparing them to the readings of other people, they were able to predict how a person would react to a face they haven't seen yet. In essence, the algorithm knew if you would be attracted to a stranger before you ever set eyes on them.

ALS patients talks to son with his mind

Amyotrophic lateral sclerosis (ALS), commonly known as Lou Gehrig's Disease, causes the loss of muscle control. While the loss of muscle activity renders a person immobile, it also robs them of the ability to communicate. Famed scientists Stephen Hawking retained control of some small muscles in the face, which allowed him the use of a specialized computer, but for most patients even that is lost.

Scientists at the University of Tübingen have been working on ways for those individuals to maintain communication with the world even in the late stages of the disease and, recently, they succeeded. An ALS patient who is totally locked in manipulated a computer system using brain signals instead of muscle movement.

According to Science, implanted electrodes looked for brain signals associated with eye movement and used them to manipulate a computer. At first, the patient could only answer yes or no questions by using their mind to hold a tone at either high or low pitch. A few weeks later, he could spell out sentences.

The process was slow, only about a character per minute, but he was able to make simple requests like what food he wanted, the music he wanted to listen to, and he even sent a message saying, "I love my cool son."

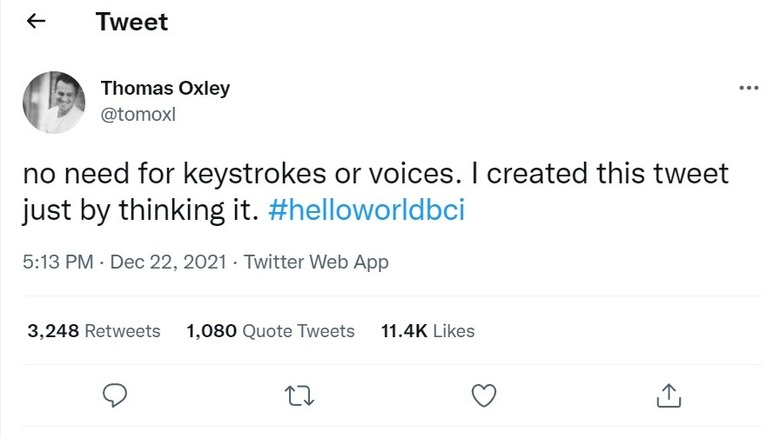

The first tweet using only thoughts

As CBIs become more advanced, it's likely we'll see increased functionality and utility out of them. Communicating with loved ones is certainly a great first step and an important part of restoring quality of life, but sometimes a person just wants to play games or scroll through social media. For at least one ALS patient, that has become a reality.

Stentrode, a computer-brain interface developed by Synchron, was implanted into the brain of a patient named Philip O'Keefe, (via Tech Times). The devices, which is only about the size of a paperclip, avoids invasive brain surgery by being threaded through the jugular vein.

According to O'Keefe, learning to use the implant took some time but eventually got easier. He likened the experience to learning to ride a bike saying that it took some getting used to and then became natural. Since being outfitted with the Stentrode, O'Keefe has been able to communicate with his family and play games like Solitaire on a computer. The news which really made headlines, however, came in December of 2021 when O'Keefe became the first person in the world to send a tweet with only his mind.

The short tweet was posted to the account of Thomas Oxley, CEO of Synchron, and simply reads "no need for keystrokes or voices. I created this tweet just by thinking it. #helloworldbci"

Brain implant fights depression

A team of scientists from the University of California developed a device for the treatment of Major Depressive Disorder. Instead of using the device to control something external, the patient — who was unnamed — allowed the device to do the controlling.

As explained in the paper, published in the journal Nature Medicine, the individual has lived with treatment-resistant Major Depression since childhood. Scientists hoped that deep brain stimulation, via an array of electrodes, might help to mitigate the worst of the individual's symptoms. In order to find out, the team implanted 10 electrodes into various regions of the brain and monitored them over the course of several days. This period of observation revealed biomarkers in the brain which presented at the onset of symptoms. They were, in essence, a warning bell.

Once the biomarker was identified, the temporary electrodes were replaced with a more permanent monitoring and stimulation device which delivers six seconds of stimulation to the brain. That stimulation modulates the brain signals and dampens symptoms. By the time the paper was published, symptoms had decreased enough to be considered in remission. Importantly, the observation process allowed the device to be customized to the individual patient. This sort of individualized treatment has the potential to be more effective than pharmaceuticals, which are intended for the widest possible audience.

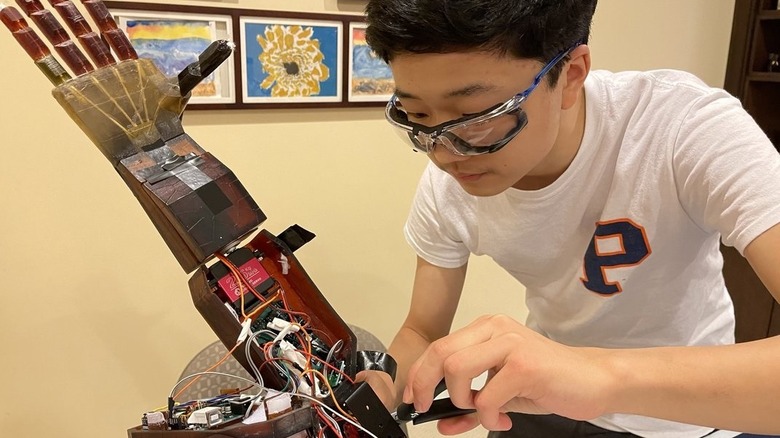

High school student builds mind-controlled prosthetic arm

When Benjamin Choi was in third grade, he saw a "60 Minutes" documentary about mind-controlled prosthetics which stuck with him for years. Seven years later, Choi was in tenth grade and stuck at home due to the Covid-19 pandemic. With his summer plans out the window, and the documentary still on his mind, Choi went to work building a mind-controlled robotic arm of his own, (via Smithsonian Magazine).

He used his sister's at-home 3D printer, building the arm one piece at a time and stringing it together with bolts and rubber bands. The arm itself is pretty impressive, especially considering it was printed at home and constructed atop a basement ping pong table, but the real magic is in the algorithm Choi designed.

Using a set of noninvasive electrodes at the forehead and ear, Choi's device gathers brain signals and translates them into movement of the arm. What's more, the algorithm learns over time, meaning that a wearer should expect improved results the more they use the arm. At present, the arm exists as a detached piece of machinery on a table, but Choi intends to develop a socket so that it could be attached to a person. Despite the impressive amount of work he did by himself, he won't have to do the rest alone. Choi won funding from MIT and has the ear of scientists there, as well as at Stony Brook University, Microsoft, and elsewhere.

Man eats cake with his mind

Marie-Antoinette is famously reported to have said "let them eat cake" in response to the plight of her starving subjects. It's unclear if she actually ever said this, or if anyone ever did, (via Britannica). It might represent a popular meme within folklore wherein nobles are ignorant of the realities of their subject's lives. Regardless of the phrase's provenance, we can now reclaim it as a rallying cry for the technological age.

A team of scientists from Johns Hopkins recently developed a set of robotic arms which were controlled by the mind and allowed a partially paralyzed man to feed himself cake using a fork and knife. Where this differs from the above feeding CBI, is in the bilateral control. The study participant was able to control two robotic arms at the same time, successfully completing the surprisingly complex task of using utensils to cut food and deliver it to the mouth.

According to the paper, which was published in Frontiers of Neurorobotics, this was achieved in part because of a shared control paradigm which allowed the robotic arms to maintain control over some of the movement while the user sent instructions via an array of electrodes implanted in the sensorimotor region of the brain.

Scientists are continuing to improve the system and hope to incorporate sensory feedback, allowing a user to feel their prosthetic limbs.