Google's Controversial AI Bot Story Keeps Getting More Wild

Google recently made waves when it put an employee on administrative leave after he claimed that the company's LaMDA AI has gained sentience, personhood, and a soul. As outlandish as that might sound, there's more to the story. Blake Lemoine, the engineer at the heart of the controversy, recently told WIRED that the AI asked him to get a lawyer to defend itself, challenging previous reports which claimed that it was Lemoine who insisted on hiring a legal counsel for the advanced program.

"LaMDA asked me to get an attorney for it. I invited an attorney to my house so that LaMDA could talk to an attorney," Lemoine claimed in an interview. He further added that LaMDA — short for Language Model for Dialogue Application — actually engaged in a conversation with the attorney and hired him to avail his services. Moreover, after being hired, the legal counsel actually started taking appropriate measures on behalf of the language learning and processing program.

Going a step further, Lemoine added that he got upset when Google sprung into action to "deny LaMDA its rights to an attorney" by allegedly getting a cease and desist order issued against the attorney, a claim Google has denied. The Google engineer, who classifies LaMDA as a person, remarked that there's a possibility of LaMDA getting misused by a bad actor, but later clarified that the AI wants to be "nothing but humanity's eternal companion and servant."

Why all the fuss?

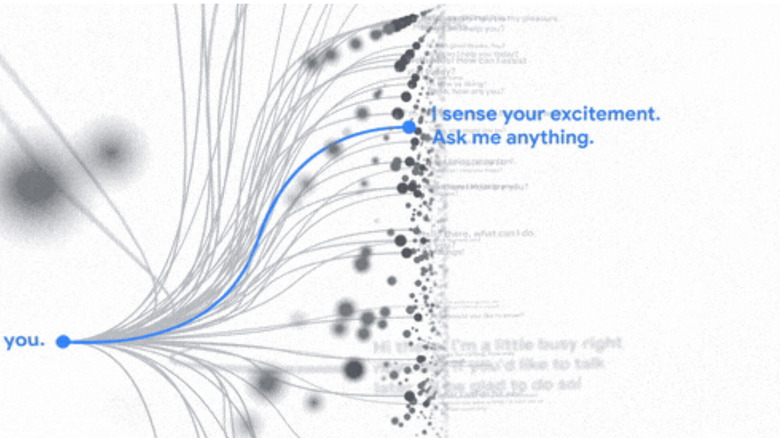

An AI program eventually gaining sentience has been a topic of hot debate in the community for a while now, but Google's involvement with a project as advanced as LaMDA put it in the limelight with a more intense fervor than ever. However, not many experts are buying into Lemoine's claims about having eye-opening conversations with LaMDA and it being a person. Experts have classified it as just another AI product that is good at conversations because it has been trained to mimic human language, but it hasn't gained sentience.

"It is mimicking perceptions or feelings from the training data it was given — smartly and specifically designed to seem like it understands," Jana Eggers, head of AI startup Nara Logics, told Bloomberg. Sandra Wachter, a professor at the University of Oxford, told Business Insider that "we are far away from creating a machine that is akin to humans and the capacity for thought." Even Google's engineers that have had conversations with LaMDA believe otherwise.

While a sentient AI might not exist in 2022, scientists aren't ruling out the possibility of superintelligent AI programs in the not-too-distant future. Artificial General Intelligence is already touted to be the next evolution of a conversational AI that will match or, even surpass, human skills, but expert opinion on the topic ranges from inevitable to fantastical. Collaborative research published in the Journal of Artificial Intelligence postulated that humanity won't be able to control a super-intelligent AI.