Apple Just Made This Important Privacy Feature International

With the arrival of iOS 15.2, Apple introduced a new Communication Safety feature in Messages that was aimed at protecting children using iPhone devices from photos depicting nudity. So far, the feature has been limited to the United States, and according to The Verge, it will be expanded soon to Australia, Canada, New Zealand, and the United Kingdom as well. The Guardian reports that the child safety feature will arrive "soon" in the U.K., while DailyMail claims that the safety tool has already been launched in the country. The feature will be enabled via a software update with a minimum operating system version of iOS 15.2, iPadOS 15.2, or macOS Monterey 12.1.

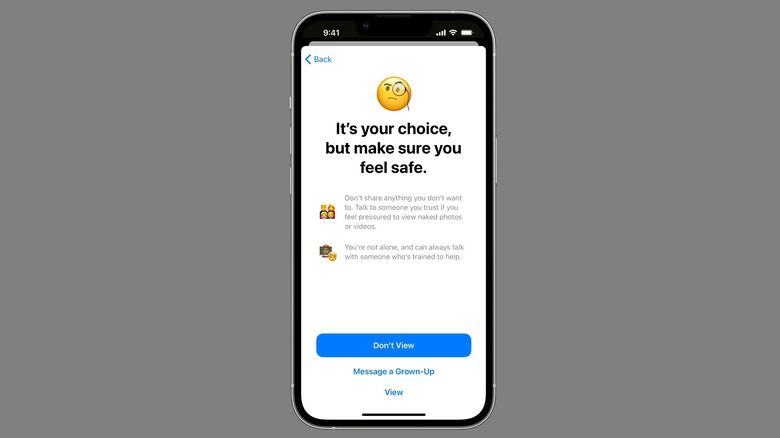

Communication Safety in Messages automatically scans an image received in the Messages app, and if it detects any trace of nudity, it automatically blurs the image preview. The system then shows a prompt asking the user if they really want to view it, and also directs them to resources they may need to assist them in deflecting content of this sort. The same warning system kicks into action when the user attempts to send an image with traces of nudity. The feature has to be enabled manually from Screen Time settings, but the child's iPhone must first be registered within the Family Sharing group.

Same safety measures, no parental alerts

Available as a toggle called "Check for Sensitive Photos" in the Communication Safety section, the feature offers three solutions to deal with a scenario where a problematic image is being sent or received. The first course of action is to "Message Someone" — someone being a reliable adult which the child using the iPhone can trust to help.

There's an option to block the person on the other side of the conversation with a single tap. There is also an "Other Ways to Get Help" button that leads to helpful online safety resources for the right guidance in such sensitive situations. Additional resources are also at the disposal of children from Siri, Spotlight, and Safari Search when they search for guides related to child exploitation.

When Apple originally announced the plans last year, this system included an alert system for notifying parents every time their ward sent or received images depicting nudity. Experts raised concern that the alerts could out at-risk LGBTQ children. Apple subsequently dropped the alert functionality, but the rest of the system remains intact.

It is also worth pointing out that all image scanning happens locally on each iPhone device, and Apple can't access the photos sent or received by any user. Apple also had a similar system for detecting CSAM (Child Sexual Abuse Imagery) in photos stored on iCloud, but following a massive pushback over privacy concerns, those plans were delayed.