USB: What It Stands For And How It Revolutionized The Way We Use Computers

A computer, at its simplest, is a sophisticated input and outlet machine. But there is only so much it can do. It can't print a document on a piece of paper — there's a dedicated machine for that. A computer can, however, talk to a printer and send the digital material that is subsequently printed on paper. This information exchange between a computer and a printer requires a channel. A USB port provides that channel, connecting the two devices via a cable.

USB, short for Universal Serial Bus, is what you will find on a majority of devices around you. From boxy electric cars to those risk-prone public charging spots, this is the port standard you will come across on a daily basis. Modern computers are increasingly shifting to what we call a USB Type-C standard. USB can transfer power to charge devices, link up with external storage, connect to audio gear, enable input from peripherals like a cool mechanical keyboard, and hook up to an external monitor, among other chores.

But that was not always the case. The history of USB ports dates back to over a couple of decades in the past, and has gone through multiple design iterations in that span. Here is the most surprising part. No personal fortune was made off this game-changing innovation, despite being developed by a computing pioneer. We're talking about Intel here, and this is a history of the humble USB standard that changed computing forever.

Why was USB ever needed?

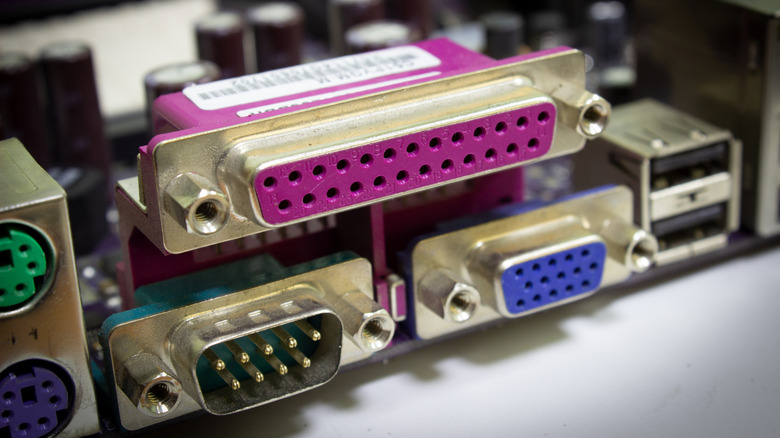

The biggest problem USB solved was the standardization of ports. Just to give you an idea of how cluttered and messy the situation was not too long ago, go through these connector names. Mice required a PS/2 connector or a serial port. The situation for the keyboard was not too different. For fans of certain fruit-tech brand, the Apple Desktop Bus or DIN connector did the job. Printers and other peripherals relied on big parallel ports. Custom game ports and dedicated SCSI were also common.

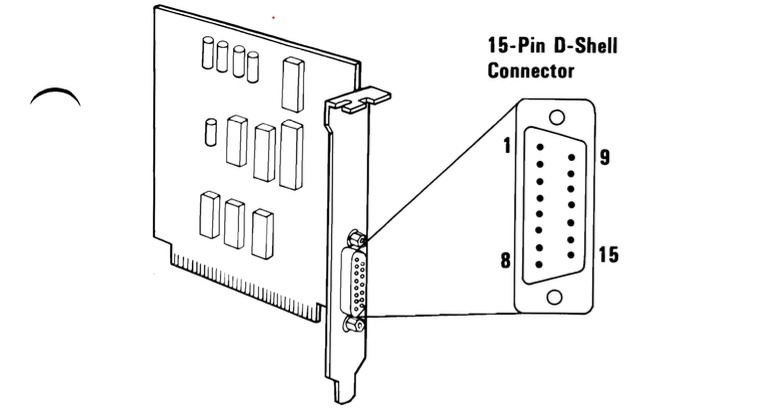

What you see in the picture above is the IBM Game Control adapter, which came with a 15-pin D-Shell connector. It allowed a maximum of two joysticks and four paddles per system. And let's not forget the crowded situation with adapters and splitters of all kinds. Furthermore, troubleshooting and configuring these ports was another hassle. With the arrival of the USB standard, most of these ports eventually vanished, as data lanes kept touching new speed benchmarks and power delivery numbers also kept going up.

There were a few that tried to challenge the rise of a USB standard but ultimately failed. Among them was the Apple-backed FireWire interface. It was faster than the USB 2.0 standard at the time and went through multiple iterations, but eventually was updated with what the world now knows as the Thunderbolt standard. It's surprising, however, that Apple was one of the first major commercial brands to popularize the USB standard and did the same for the USB Thunderbolt standard in the modern age.

How USB came to be

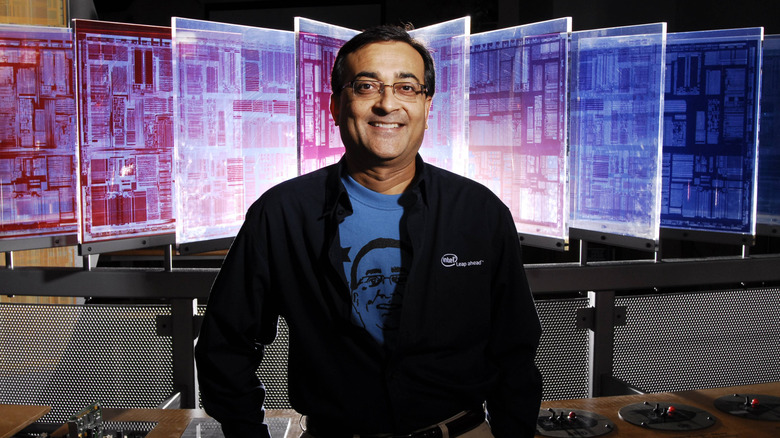

It is evident that the USB standard was developed to ease things up. In an interview with CNN, Ajay Bhatt, an Intel veteran and co-inventor of USB, remarked that the situation with ports and connectors was more complex than it needed to be. "All the technology at that point was developed for technologists by technologists," he told the outlet. Bhatt first pitched the idea of a plug-and-play approach to connectivity in the early 1990s, and in 1992, he met with fellow engineers from companies such as Compaq, IBM, and Microsoft, among others, at a conference. The group looked at all the formats available at the time and eventually decided on a slender form factor with four connector cables.

The overarching goal was to create something user-friendly, power-ready, high on transfer bandwidth, and as inexpensive as possible. The initial design had an A-connector for plugging into the computer, while the B-connector was linked to the peripheral. And since it also provided a power source, the hassle of connecting even small peripherals to a separate power outlet was also solved in one go. For the name, well, "bus" and "universal" were easy choices.

A bus carries people from point A to B, while universal signified the all-encompassing approach of the revolutionary port standard. The sum total of those ideas entered the commercial lexicon as USB, aka Universal Serial Bus. The first design iteration was announced in 1995 as v1.0, offering a data transfer rate of 12 megabits per second.

The start of mass adoption

The preparations to push the idea into the mainstream began with the creation of the USB Implementers Forum (USB IF) body, established in 1995 to speed up market adoption. But what the companies needed was some serious flexing of their technical muscle. Intel really wanted to sell the idea of USB as some kind of computing redeemer. So it did what every other consumer tech brand has embraced these days. Pull some stunt at a tech show. At the 1998 COMDEX, Intel held a live show event and set a record by connecting over 100 peripherals to a single computer.

Back then, all the peripherals came from brands that had joined the USB IF consortium, which had over 500 signatories at the time. Intel, Microsoft, Phillips, and Kodak were some of those USB-ready partners. "The USB IF estimates the average user will connect six to eight peripherals at one time," the company said in a press release nearly three decades ago. It was a fantastic showcase, seeped in practical utility. The world of computing was ready, and brands were all aboard the USB bus.

It was a monumental year, from both software and hardware perspectives. Microsoft released the Windows 98 operating system the same year, the first computing OS from the company to support the USB-C standard. The same year, Apple put out an evolutionary iMac, which went all-in on the new connection format and served a pair of USB ports atop the newer v1.1 standard.

How USB made it beyond computing?

A recurring theme in the early days of USB evolution was flashy marketing, but one with a practical edge. One such showcase involved a meeting with camera brands in Japan, led by Jim Pappas, who was a part of the USB development team at Intel. Here's an anecdote from Intel's own retelling (PDF), showcasing the sheer impact of brilliant ideas:

"One trip took them to Japan, to persuade the leading camera manufacturers to adopt the new interface. At the time, digital photography was one of the most important potential applications for PCs, along with digital music. Pappas brought with him a digital camera that had a serial port for connecting to a PC, and a PowerPoint slide that compared the amount of time it would take to upload photos using the serial port (about two hours) versus USB (about 12 minutes). The slide also noted that traditional film could be processed at a one-hour facility in half the time it took to upload from a digital version from the serial port. "It was a pretty high-impact slide," says Pappas, and a year or so later, all of the Japanese manufacturers began rolling out new USB-enabled cameras."

The biggest impact, however, was the open release of the USB specifications and adopters agreements as a free download worldwide. To put it more specifically, it's a RAND-Z (reasonable and non-discriminatory, zero royalty) licensing obligation. The consortium also standardized device classes for software drivers, paving the way for a plug-and-play future.

The road to a power-ready future

In the domain of computing, Full Speed USB (Version 1.1) marked a solid impact with a 12 megabit per second transfer rate. But then came the idea of using the USB standard for storage devices. In the words of Bob Dunstan, an architect at Intel, "painfully slow would be an understatement," if one were to push to USB v1.1 for data transfer. To fill that gulf, USB 2.0 (Hi-Speed) was released in 2000, delivering a massive 40x jump to 480 Mbps. Charging was added to the list of USB priorities soon after.

In 2007, the USB Battery Charging Specifications was released, built atop the v2.0 standard. This marked the beginning of a unified connectivity standard on phones for charging as well as data transfer. And this was also the slow beginning of the end for proprietary chargers. Korea developed the first mobile charging standard in 2001, and in 2007, China standardized USB for mobile charging. The GMS Association gave its sponsorship two years later, and in 2011, the European Commission facilitated a Memorandum of Understanding (MoU) that pushed smartphone makers to adopt the Micro-USB connector.

Simultaneously, the USB Power Delivery (PD) specifications were released in 2012, opening the doors for direct connection to peripherals such as monitors, printers, and notebooks. A year later, the now-mainstream USB-C standard was announced atop the USB v3.1 specification, and the first products with a USB-C landed on the market in 2015. Our laptop is currently plugged on one end to a USB-C power delivery cable, while the other one is hooked to a massive monitor. Looking at pictures of computers from the early 2000s, we'd say we now live in a computing bliss, thanks to the birth of USB-C.