3 New iOS 18 Features Every iPhone User Should Know About

Apple announced its fancy artificial intelligence (AI) ambitions at the annual developers' conference in June 2024, but users had to wait a long time due to Apple's cautiously staggered approach to rolling anything AI-related out. After a lengthy beta testing phase, the first wave of Apple Intelligence features came with the iOS 18.1 update, first for developers, and then for public beta testers.

Soon after, Apple released the iOS 18.2 beta update for developers — and that software build is what Apple Intelligence fans had been waiting for. It introduces three of the most interesting AI features that Apple showcased at the conference.

The first one is a supercharged Siri courtesy of direct ChatGPT integration, making it capable of answering queries that would otherwise require an internet search. Next, we have Visual Intelligence, which is essentially Apple's own take on Google Lens, riding atop OpenAI's multi-modal AI smarts. Finally, the update also introduces Image Playground, a standalone app for creating fun images. Do keep in mind that all the features described below are still in the beta testing phase, and according to Bloomberg, will only become fully available in December 2024.

ChatGPT integration

Apple hopes to inject new life into the Siri experience with iOS 18. To that end, the company has upgraded the underlying language understanding, updated the local knowledge bank of the virtual assistant, and has even overhauled the visual interaction interface. However, Siri is still a virtual assistant at heart, and that means it still can't accomplish what chatbots like Gemini or ChatGPT can pull off. At least not on its own.

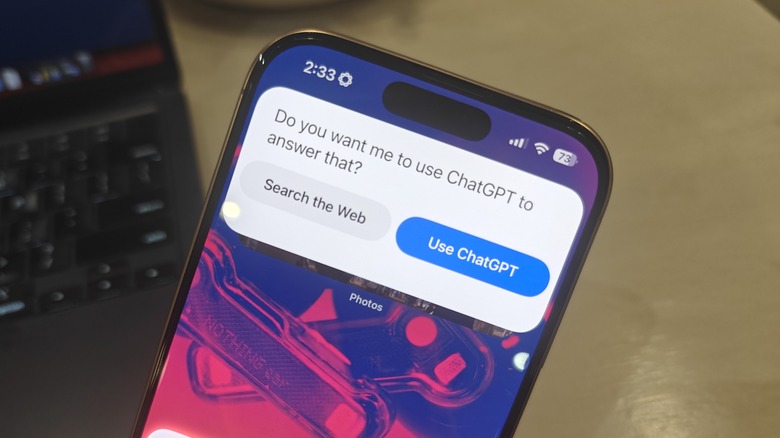

That's where Apple's partnership with OpenAI comes into the picture. The result of that collaboration is integration with ChatGPT, and an opt-in system that seamlessly offloads queries from Siri to ChatGPT if the former can't answer them. "Apple users are asked before any questions are sent to ChatGPT, along with any documents or photos, and Siri then presents the answer directly," assures OpenAI.

Thanks to the knowledge bank that has been baked at the heart of ChatGPT in the form of training data, coupled with its natural language and internet trawling capabilities, Siri will let OpenAI's chatbot handle queries without any technical hoops involved.

The biggest draw here is that users don't have to jump between apps, or even have to launch any app, for that matter. All they have to do is invoke Siri via text or voice, and get the answer they seek neatly curated in a window at the top of the screen, in a conversational style.

Visual Intelligence

Visual Intelligence is essentially Apple's take on the concept of Google Lens, but with Siri in the lead, and some assistance from ChatGPT. All you need to do is long-press the Camera Control button on the right edge of your iPhone. Doing so will launch the Visual Intelligence interface in portrait mode. Now, all you have to do is point the camera, press the shutter button, and pose your query.

In its current avatar, Visual Intelligence handles queries in two formats. If the follow-up question is in text format, Siri handles it. In case the query is out of Siri's reach, users will be asked whether their question can be offloaded to ChatGPT. Once they agree, Siri will process the input and provide a response accordingly.

In case the user query is about analyzing the frame and then looking it up on the internet, the default option is Google Search. If you've used the Circle to Search functionality that has appeared on the recent crop of Google Pixel and Samsung Galaxy smartphones, Visual Intelligence — more or less — aims to replicate that, but using the camera.

At the stage of the iOS 18.2 beta update, the range of what Visual Intelligence can accomplish is pretty limited compared to what tools like Gemini are capable of. Moreover, the responses can be pretty haphazard, or downright jargon. When I captured an image of an article and asked Visual Intelligence to summarize the text content, the AI instead presented me with a summarized version of my app notifications.

Image Playground

Image Playground is a standalone app that lets users create fun images using text descriptions. The app also borrows some inspiration from the iPhone's camera app to apply stylistic effects to images, and also uses the emoji kitchen formula to mix multiple effects in the same image. The app is still in the beta stage, so the results are not nearly as accurate as one would get from text-to-image tools like OpenAI's Dall-E or Google's Imagen engine.

Another crucial distinction is that Image Playground won't generate photorealistic images. When you first launch the app, you will see a pill-shaped text box at the bottom where you can describe what kind of image you want to create. Right above it is a carousel showing five add-on effects that would work best with the image generated following the text input.

As you swipe on it, you'll see a Themes dashboard where you can pick between presets such as Summer, Party, and Fantasy, to change the image accordingly. Then there are separate effect selections under the Costumes, Accessories, and Places categories, all of which work with a one-tap approach. In the bottom right corner is a "+" icon that lets you pick up a custom image from your iPhone's Photos app and give it a fun twist. The focus here is more on creating playful images instead of realism or life-like accuracy.