The Easy Way To Run An AI Chatbot Locally On Your Laptop

People are using all kinds of artificial intelligence-powered applications in their daily lives now. There are many benefits to running an LLM locally on your computer instead of using a web interface like HuggingFace. For one, running an LLM locally without sending the data through a web interface allows the user to generate data with more privacy. Most web services that allow the use of LLMs consistently remind the user not to send any sensitive information through the LLM, as the developers monitor the conversations and any number of people could see your data.

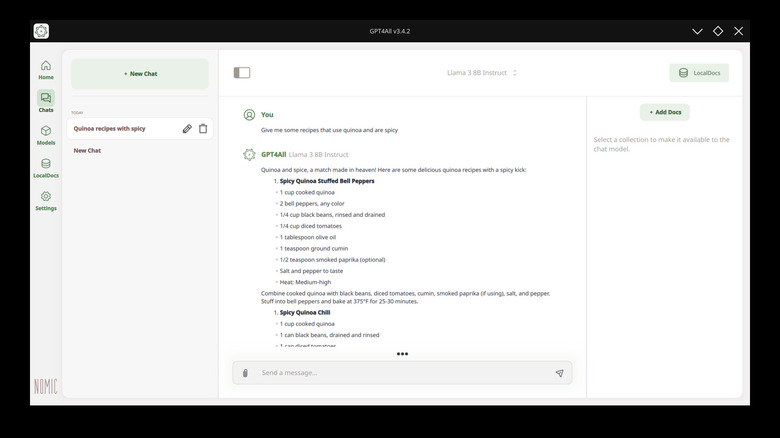

Some people are more defensive of their data than others and would prefer to try these services in a more private setting where they aren't being monitored. Local LLM interfaces like GPT4ALL allow the user to run the model without sending their prompts and replies through a company's portals, and can give them greater freedom when testing and customizing the model. Here's how to install GPT4ALL and a local LLM on any supported device.

What is GPT4ALL?

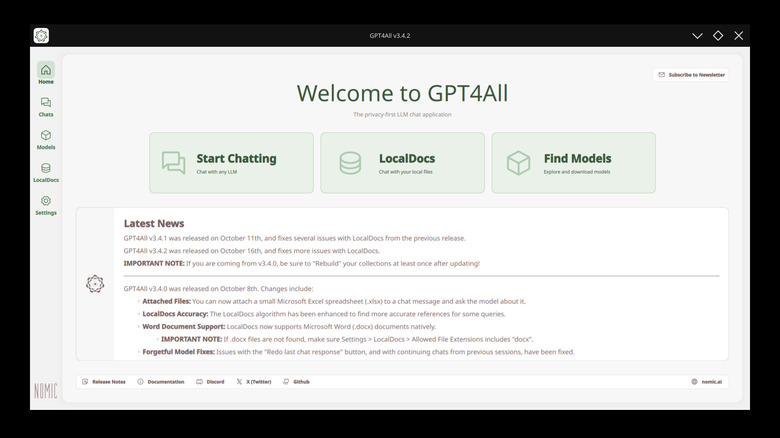

GPT4ALL is an open-source large language model interface developed by Nomic AI that allows you to run your chosen LLM locally through the provided interface. The application is compatible with all major distributions of the major LLMs, such as ChatGPT, Llama, and Mistral. It even has a search feature that allows you to look for LLM implementations and document sets to install to GPT4ALL directly in the interface.

You can install, manage, and connect to various LLMs, including user libraries for different models, right from inside the GPT4ALL application. Additionally, GPT4ALL uses your computer's resources and doesn't require a powerful GPU to run the models locally like other implementations. This is due to the models integrated into GPT4ALL being quantized down to a smaller overall size to reduce the necessary processing power for the LLMs. The goal of GPT4ALL is to improve public access to artificial intelligence tools and language models.

How to install GPT4ALL

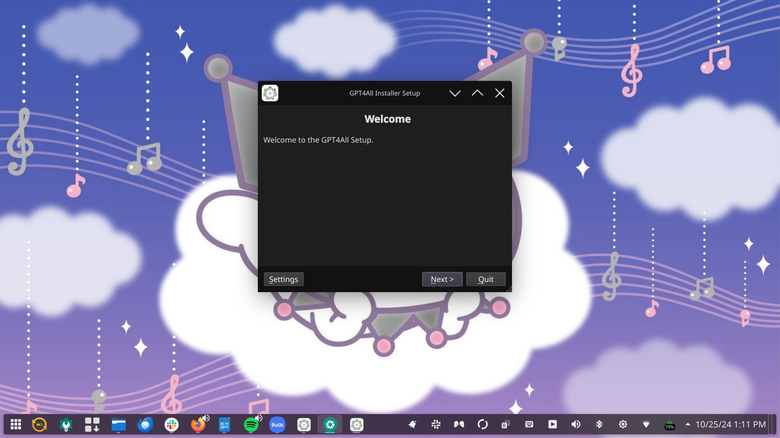

The graphical user interface (GUI) for GPT4ALL is officially supported for Windows, macOS, and Ubuntu. Nomic provides installation files compiled for the different operating systems. However, Linux users of non-Ubuntu distributions might need to complete additional steps to get the program running. To install the application on Windows or macOS, simply download and run the installer files. Then, follow the instructions. Once the application is installed, you can run it, and it will open the chat interface, which is designed to look like other LLM interfaces.

Linux users who want to install the application can install the application from the Ubuntu installer if it is compatible with their distribution. It is also possible to build the program in Python using the command line. To install the application through Python using the command line in Linux, you should run the following command in the terminal:

pip install gpt4all

How to install LLMs on GPT4ALL

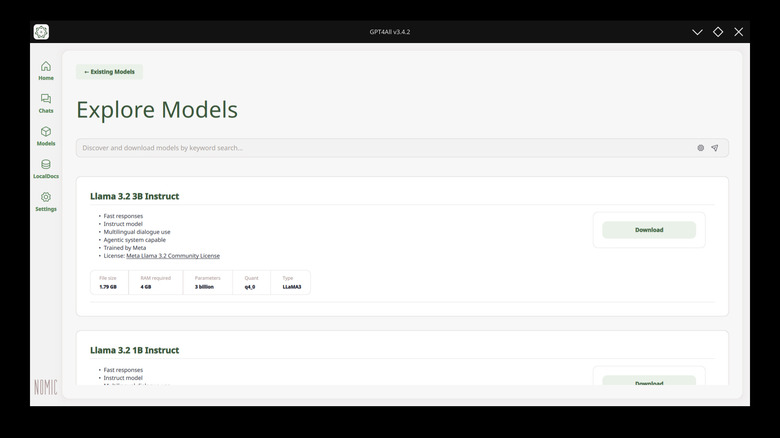

Installing LLMs on GPT4ALL is a snap. The application has a list of compatible LLMs that you can search through and install directly in the interface. The list even helps you sort by how much RAM you need to run each model, what the model is based on, the size of the model, and other parameters that might be determining factors for the user. All of this information is readily available in GPT4ALL's interface, allowing users to choose the models that best suit their specific needs.

Some of the models that can be used through GPT4ALL require API keys to access. These are not determined by GPT4ALL's team. Rather, if a model requires an API key, this is a directive from the company that designed and trained the model. Models like GPT-4 and some of the official Mistral distributions require an API key to use, regardless of whether you're running them locally or through a web interface. So, unfortunately, using GPT4ALL isn't a way to get around paying for OpenAI keys if that's a service you want to use.