14 Supercomputers That Once Held The Title Of Most Powerful In The World

Computers now dominate our everyday lives. Everyone reading this will be doing so on some form of computer, whether it is a desktop PC, laptop, tablet, or even smartphone. From the time of the first computer to modern day machines, the computer has revolutionized the way we communicate, share information, and keep ourselves entertained. While early computers were used for more basic functions, nowadays computers can do everything from playing video games to accessing the internet to stream all types of content.

One type of computer that most people rarely get the chance to interact with is the supercomputer. These are essentially larger and more powerful computers that are able to execute far more calculations than the everyday Macbook Pro. They play an essential role in modern science, allowing researchers to carry out complex simulations and solve problems that wouldn't otherwise be possible. These supercomputers drive forward our understanding of the world and help shape the future of tech.

SlashGear looked back at the supercomputers that had once been dubbed the most powerful in the world. To do that, facts and figures from Top 500, an internationally recognized body that ranks supercomputers based on how fast they can carry out specific benchmark tests, were calculated.

Numerical Wind Tunnel

The Numerical Wind Tunnel is a Japanese-built supercomputer that was installed in 1993. It was a joint collaboration between Fujitsu and the National Aerospace Laboratory of Japan. Following the development of a vector parallel architecture system between the two entities earlier in the 1990s, both groups combined once again to use the system as part of a supercomputer. The result was the Numerical Wind Tunnel, which went on to become the most powerful computer in the world in 1993.

At that point, the Numerical Wind Tunnel had 140 vector processors and when tested with the Linpack benchmark, was able to achieve a performance of 124.2 gigaflops. It was surpassed a short while later by the Intel XP/S 140 Paragon but soon took back the crown when an upgrade added 27 additional vector processors. This took the performance to 170 gigaflops per second, and it was able to stay at 100 gigaflops when engaged in long fluid dynamic calculations for modeling airflow over aircraft. It was ultimately involved in the design of experimental craft such as the HOPE-X and NEXST.

Intel XP/S 140 Paragon

The Intel XP/S 140 Paragon was one of several Paragon supercomputers that Intel developed in the early 1990s. This particular model took the number one spot on the Top 500 list in June 1994. It was purchased by and installed at the Sandia National Laboratories, a research and development center for the National Nuclear Security Administration in the United States. The machine had cost Sandia $9 million and it was later deemed to be obsolete by 1998, when it was controversially sold to a third party.

Created from a prototype computer system known as Touchstone Delta, the XP/S 140 Paragon was made up of 3,680 processors and more than 1870 computing nodes in a parallel processor design, it had a maximum performance of 143.4 gigaflops. The computer was used for a number of purposes while at Sandia, with funding provided by the Defense Advanced Research Projects Agency and the U.S. Air Force. These included simulating plasma physics and 3D seismic imaging to modeling chemical catalysis and processing complex data.

[Featured image by Carlo Nardone via Wikimedia Commons | Cropped and scaled | CC BY-SA 2.0]

Hitachi SR2201 & CP-PACS

Hitachi established itself as a manufacturer of powerful supercomputers in the mid-to-late 1990s, with several machines that went on to take the top spot. The first of these was the SR2201, a distributed memory parallel system that was officially unveiled in March 1996. Installed at the University of Tokyo, it utilized a 150 MHz HARP-1E main processor and ran on PA-RISC architecture, something that Hitachi's first generation of these computers also did. Thanks to its 3D crossbar configuration, it was able to reach a high peak performance and achieved 232.4 gigaflops on the Linpack test.

The CP-PACS followed, which was essentially a specialized version of the earlier SR2201. This parallel computer was designed for use at the Center for Computational Science at the University of Tsukuba in Japan and intended to help tackle difficult computational physics problems. This meant that changes were made to the original plans to allow the CP-PACS supercomputer to perform as expected, including up the number of processors to 2,048. It took the number one spot of the Top 500 list in November 1996 after performing at 368.2 gigaflops on the Linpack benchmark.

ASCI Red & White

The ASCI Red and ASCI White were two supercomputers that were created as part of the Accelerated Strategic Computing Initiative, a U.S. government project that intended to create a computer system that would be able to fully simulate nuclear weapon testing. The ASCI Red was the first of these and also holds the distinction of being the first supercomputer to reach a performance of over 1 teraflop on the Linpack benchmark test. The most powerful supercomputer in the world for some three years and incredibly reliable, it was a joint collaboration between Sandia National Laboratories and Intel that was used by the Department of Defense for almost a decade.

The ASCI White surpassed the ASCI Red when it was installed and made operational in November 2000. Located at the Lawrence Livermore National Laboratory, it was a huge structure that took up enough space to cover two entire basketball courts thanks to the 200 cabinets that were used to store the 512 nodes across 16 IBM processors. Costing $110 million to build, it exceeded expectations with its 8,192 CPUs able to perform at 4.9 teraflops in the Linpack benchmark.

[Featured image by US Government: Lawrence Livermore National Laboratory via Wikimedia Commons | Cropped and scaled | Public Domain]

IBM Blue Gene/L & Blue Gene/Q

Among the many super computers that have been constructed over the years, Blue Gene might be one of the most recognizable names. IBM's Blue Gene project was an attempt to create the first supercomputers that were able to perform at the petaflop level. It was formally announced in 1999, with IBM confirming that the aim of the five-year project was to study complex concepts, including protein folding.

Blue Gene/L was the first of three supercomputer systems developed under the Blue Gene project and was initially capable of reaching 70.72 teraflops on the Linpack benchmark. Over the next few years, it was upgraded several times until it was able to reach up to 478.2 teraflops of performance.

IBM followed Blue Gene/L with Blue Gene/P and ultimately Blue Gene/Q. A version of the final supercomputer, known as Sequoia, took back the crown for the world's fastest and most powerful supercomputer. Installed at the Lawrence Livermore National Laboratory, it was used by the Department of Energy and was able to achieve 16.32 petaflops thanks to its 1.6 million cores and 98,000 computer nodes.

[Featured image by Argonne National Laboratory via Wikimedia Commons | Cropped and scaled | CC BY-SA 2.0]

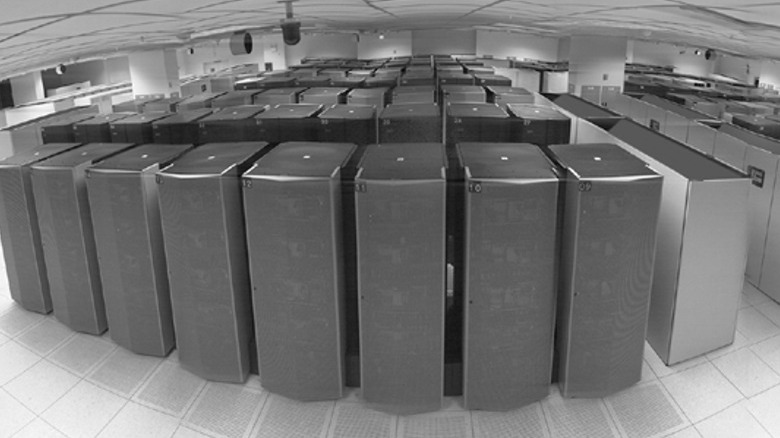

Cray Jaguar

The Jaguar supercomputer — sometimes known as the OLCF-2 — is a system that was developed by Cray Inc. in 2005. However, it didn't become the world's most powerful supercomputer until some time after it was first active. In fact, it took several upgrades before the Jaguar was able to dethrone IBM, reaching a peak performance of 1.759 petaflops per second, the equivalent of 1.7 trillion calculations per second. At the time it claimed the number one spot, it was only the second ever supercomputer to reach a performance in the petaflop range.

Installed at the Oak Ridge National Laboratory, which is sponsored by the United States Department of Energy, the Jaguar is intended to be used to assist studies in various environmental and climate-related fields, including renewable energy and fusion power. The version of the Jaguar that broke the records was effectively created by joining together two separate systems.

[Featured image by Daderot via Wikimedia Commons | Cropped and scaled | Public Domain]

NUDT Tianhe-1A

The Tianhe-1, created as part of a National University of Defense Technology project, is a Chinese supercomputer that was first active in 2009. At this time, it was not the most powerful supercomputer in the world. It had only reached the fifth spot in the Top 500 rankings but was officially the fastest ever produced in China. Installed at the National Supercomputer Center in Tianjin, it was created specifically to study aircraft design and to aid in the process of petroleum exploration, as well as other fields such as the climate and even animation.

An upgraded version of the supercomputer was unveiled in 2010 under the name Tianhe-1A. This new model instantly took the top spot thanks to the fact that it could achieve a peak performance of 2.57 petaflops. Powering the supercomputer were more than 20,000 Intel CPUs and NVIDIA processors along with an interconnect that is twice as fast as the commonly used InfinitiBand. The Tianhe-1A remained the fastest computer for around seven months until it was overtaken by the K computer.

[Featured image by Nakrut via Wikimedia Commons | Cropped and scaled | CC BY-SA 4.0]

Fujitsu K computer

The K computer, developed by Fujitsu and the Riken Advanced Institute for Computational Science, was a supercomputer project that aimed to be the first capable of making 10 quadrillion calculations every second. When it was first activated in 2011, it jumped straight to the top of the rankings, although fell short of its ultimate goal. It was still able to achieve a performance of 8.16 petaflops in the Linpack benchmark test.

This version of the system was made up of 672 computer cabinets, housing more than 68,000 CPUs. This gave the K computer 548,352 cores in total, a figure that eventually rose to over 700,000 as part of an upgrade. This allowed the supercomputer to reach a performance of 10.51 petaflops.

The computer was so large and powerful that it consumed the equivalent amount of energy as 10,000 homes and cost up to $10 million to operate each year. However, it was put to good use helping to solve problems associated with healthcare, renewable energy, and climate change.

[Featured image by Toshihiro Matsui via Wikimedia Commons | Cropped and scaled | CC BY 2.0]

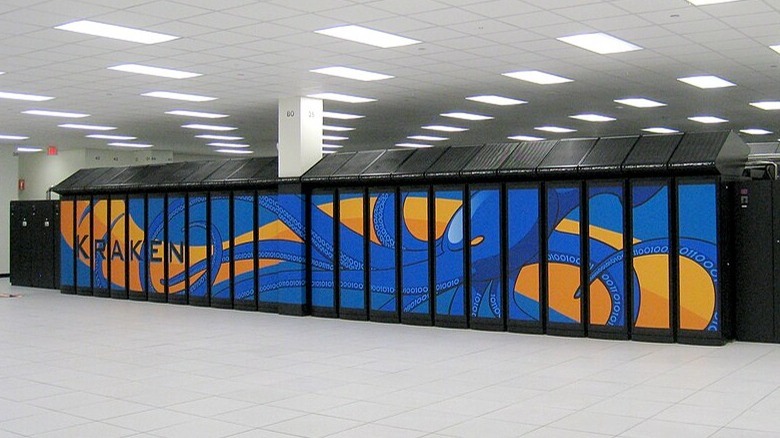

Cray Titan

After several years of having non-U.S. based supercomputers ranked at number one, things started to shift from 2012 onwards back in favor of the United States. IBM's Sequoia was briefly at the top before Cray Inc.'s Titan was able to reclaim the crown. Like its predecessor, the Jaguar supercomputer, the Titan was installed at the Oak Ridge National Laboratory. This new system essentially took the basic structure of the Jaguar and upgraded it, utilizing a unique hybrid architecture that was made up of CPUs and GPUs.

A major plus of accelerating CPU with GPUs is that it can reduce the overall cost in terms of electricity, helping to reduce the carbon footprint of supercomputers. According to Oak Ridge National Laboratory, it was 10 times faster than Jaguar but only used slightly more energy. The chips used were made up of both AMD and NVIDIA products and the upgrade cost an estimated $60 million. On the Linpack benchmark, the Titan had a performance of 17.6 petaflops.

[Featured image by OLCF at ORNL via Wikimedia Commons | Cropped and scaled | CC BY 2.0]

NUDT Tianhe-2

Having seen the Tianhe-1A overtaken by Japanese and U.S. supercomputers, China was able to get back to the number one spot of the Top 500 ranking with the Tianhe-2. Once again developed by the National University of Defense Technology and Inspur, the Tianhe-2 went active in June 2013 and was judged to be the fastest in the world, with a performance of 33.86 on the Linpack.

Building the Tianhe-2 involved a team of more than 1,300 individuals and cost an estimated $390 million. It is made up of some 16,000 nodes and a then record-breaking 3.12 million cores. According to news reports from the time, the team behind the Tianhe-2 was surprised about beating out the other supercomputers on the Top 500 list as they hadn't expected the system to be fully ready until 2015. Created as part of the 863 High Technology Program, the supercomputer is an attempt to make China less reliant on foreign technology in addition to increasing the research and development capacity of the nation.

[Featured image by O01326 via Wikimedia Commons | Cropped and scaled | CC BY-SA 4.0]

Sunway TaihuLight

China had planned to upgrade the Tianhe-2 supercomputer in 2015 but this was shelved after the U.S. government blocked a deal that would have seen Intel export thousands of CPU chips to the country. Instead, China switched focus to domestically produced chips for its latest supercomputer — the Sunway TaihuLight. Located in the Chinese city of Wuxi at the National Supercomputing Center, it was created by the National Research Center of Parallel Computer Engineering & Technology and has been used to simulate the Big Bang as well as the earliest moments of the universe.

Featuring some 10 million cores alongside 40,960 nodes, the Sunway TaihuLight originally used the SW1600 chip but switched to the SW26010 chip, which features an additional 120 cores per chip. This produces a total of 93 petaflops on the Linpack test along with an estimated peak performance of around 125 petaflops. That's more than twice as fast as the Tianhe-2 and established China as a major force in supercomputing.

Summit

IBM's Summit is another U.S. supercomputer that managed to take the top spot as the fastest supercomputer in the world. Like many other American supercomputers, it is based at the Oak Ridge National Laboratory, with Summit used within the Oak Ridge Leadership Computing Facility. Intended to be used to help research medicine, cosmology, and to look at ways of reversing climate change. The project emerged after the United States Department of Energy agreed to pay IBM and several partners — such as chip-maker NVIDIA — a sum of $325 million to construct two supercomputers.

After running the High Performance Linpack benchmark, Summit was able to reach a performance of 122.3 petaflops, putting it on the top spot in June 2018. Like many modern supercomputers, the IBM system makes use of GPUs to help accelerate the CPUs. In this case, Summit uses Power9 CPUs and NVIDIA graphic cards in the form of Tesla V100 GPUs that are linked together with a dual-rail EDR InfiniBand network. It is also one of the most environmentally friendly supercomputers in the world, ranking 5th in terms of energy efficiency on the Green 500 list.

[Featured image by Carlos Jones/ORNL via Wikimedia Commons | Cropped and scaled | CC BY 2.0]

Fugaku

Fugaku is a Japanese supercomputer that essentially acts as the successor to the earlier K computer. In fact, 2019 was the year that the K computer was officially decommissioned with its tasks set to be transferred to Fugaku when it became fully operable in 2021. Developed at an estimated cost of $1 billion, the supercomputer was designed to tackle a wide range of problems and aid research in many areas. For example, Fugaku was used to study the effectiveness of face masks during the COVID-19 pandemic.

Located at the Riken Center for Computational Science in Kobe, Fugaku was a joint effort between Fujitsu and Riken to create a shared resource. Using ARM processors and the 48-core A64FX SoC, Fugaku achieved a score of 415.63 petaflops on the Linpack benchmark. Fujitsu is planning an upgrade to the supercomputer that will link it with an experimental quantum computer in the hopes of increasing its performance and allowing it to work on more complicated problems, including artificial intelligence systems.

[Featured image by Raysonho @ Open Grid Scheduler / Scalable Grid Engine via Wikimedia Commons | Cropped and scaled | CC BY-SA 4.0]

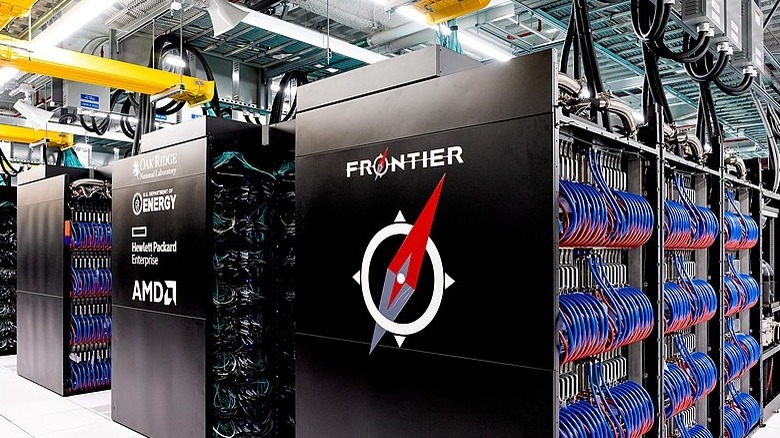

Frontier

Frontier is currently the fastest and most powerful supercomputer in the world. Located in Tennessee at the Oak Ridge Leadership Computing Facility, it was created as part of a collaboration between Oak Ridge National Laboratory and the Department of Energy. Frontier was developed by Hewlett Packard Enterprise and its subsidiary Cray alongside chip maker AMD at a cost of some $600 million. It went online in 2022 and has remained at the top spot ever since.

Recognized as the world's first Exascale supercomputer, it achieved peak performance of 1.102 Exaflops. That's equivalent to 1102 petaflops and more than double the previous record holder Fugaku, meaning it can perform 1 quintillion calculations per second. That makes it 1 million times more powerful than a typical PC or laptop. Powering the supercomputer are more than 9,400 nodes that each contain a single CPU and four GPUs supplied by AMD. The end result is some 8.7 million cores that consume in excess of 22 Megawatts of power.

[Featured image by OLCF at ORNL via Wikimedia Commons | Cropped and scaled | CC BY 2.0]