6 iPhone 16 Features That Android Phones Have Had For Years

We may receive a commission on purchases made from links.

The iPhone 16 and iPhone16 Pro had a flashy launch in September, as Tim Cook and company showed off Apple's latest and greatest smartphones. These upgraded models bring some eyebrow-raising improvements over last year's iPhone 15 series, including beefy new chips, forthcoming AI features, and a camera shutter button called Camera Control. But as everyone knows, the best artists take plenty of inspiration from others, and so, too, does the iPhone.

As someone who's been documenting Android's big changes over its lifespan, it's clear to me that several features new to the iPhone lineup this year will feel rather familiar to long-time Android users. Though it might be tempting for Android fans to score some points against iPhone fans in Reddit debates by pointing this out, it's more useful to look at how Apple has implemented the features pioneered by Android phones. Apple may have a habit of copying features, but it tends to do so with a considered, thoughtful approach.

In fact, both the iPhone and various Android phones have always taken some degree of inspiration from one another. To name but a single example of this symbiotic relationship, Apple got rid of the home button on the iPhone X a few years after Android introduced on-screen navigation with Ice Cream Sandwich. Android then added gesture controls in Android 10 to iterate on Apple's buttonless navigation.

The iPhone 16 continues in this tradition of cyclical innovation, taking inspiration from more than a few features that Android phones have adopted over the years. As usual, Apple may be late to the party, but it showed up with flowers; many of the features the iPhone 16 cribs from its competition are refined compared to them, proving that being first isn't everything. Here are six iPhone 16 features that Android phones have had for years.

Camera buttons were once popular on Android phones

One of the most exciting iPhone 16 features is a single hardware button Apple calls the Camera Control. This combination capacitive and physical button sits on the side of the phone and can be used to interact with the camera (and certain other apps) in unique ways. Users can adjust zoom and other settings, half-press to focus the camera, and more. But while it may be one of the most refined implementations of a camera button that we've seen in recent memory, it's not exactly new to those who know how common camera buttons used to be on Android phones.

For instance, my first Android smartphone was an LG Optimus V that I bought prepaid on Virgin Mobile. Despite it being a rather entry-level device with a 3.2MP camera released all the way back in 2011, it came with a camera button planted proudly on its side. While that button couldn't do some of the fancy, haptic tricks Apple's 2024 implementation of the feature can, it was handy for snapping quick shots of my high school adventures, and it still works today. On the other end of the spectrum were devices like the Samsung Galaxy Camera, which was essentially a camera strapped onto an Android phone. Suffice to say: it had a camera button.

Camera buttons remained standard on many Android phones for quite some time, though they fell out of fashion in the mid 2010s. Today, it's extremely rare to see one, which is a shame. Thankfully, many Android manufacturers tend to take cues from the iPhone, so perhaps Apple will lead the way in repopularizing this feature and making it better than ever.

Apple is a bit late to AI features

Like it or not, the tech industry has gone gonzo for AI, with seemingly every company trying to get in on the arms race to stuff your phone full of features that range from useful to perplexing to downright broken. Much like it has been many times throughout history, Android has been the testing ground for these new technologies before Apple stepped in to deliver a (hopefully) more refined experience with its forthcoming Apple Intelligence features.

Google's Pixel line of smartphones, in particular, has led the AI arms race for a few years now. This was the impetus behind the search giant's development of Tensor, its own, custom line of mobile silicon, which is built for machine learning and AI. In fact, features like the Magic Eraser, which lets Pixel users (and, more recently, all Google Photos users) remove objects from photos, debuted on the Pixel 6, which was the first phone to feature the Tensor chip. That's but one of many Pixel AI features, and over the past couple of years, we've seen other large Android manufacturers —most notably Samsung —create their own suites of AI features.

Some of these have raised concern, as Android phones continue to blur the line between photography and fiction with reality-bending camera and photo ending features. Others, like live translation and note transcription, are transformatively helpful. When it comes to Apple Intelligence, Cupertino is taking a more measured approach, and the iPhone 16 rejects the worst of the trend. For instance, its AI will offer helpful advice on setting up a camera shot, but the camera app won't bear false witness by adding people who weren't there or inventing a smile for someone who failed to say, "Cheese."

4K, 120 FPS video predates the iPhone 16 Pro

The iPhone 16 Pro and Pro Max launched with some incredibly exciting camera features, most notably the ability to shoot 4K video at 120 frames per-second. That's a game-changer for iPhone cinematography, as footage with that frame rate can be slowed down to 24 or 30 FPS for movie-quality slo-mo. Even more casual users, like the parent filming their kid's high school football game, will benefit from that capability. But while it's exciting to see 4K at 120 fps come to the iPhone, it's not the first phone to feature that capability.

Released back in June 2022, the Sony Xperia 1 IV came equipped with a 12 MP, 1/3.5-inch Exmor RS sensor that had physical zoom capabilities and, like the iPhone 16 Pro, shot HDR (high dynamic range) video in 4K at 120 fps. Since it had a 4K OLED display running at 120Hz, it could then play those videos back at full resolution, something the iPhone 16 Pro will not be able to do on its 1206 x 2622 OLED display after shooting similar footage. However, the frame rate will remain intact on its 120Hz panel.

But although it's not the first to shoot video at 4K at 120 fps, the iPhone 16 Pro remains among a select few smartphones with that capability. This year, the Samsung S24 Ultra was among the rare few to beat them to the punch this year, sliding to home base mere inches from being struck out. Given the popularity of the iPhone, it will put that capability into more hands than any other smartphone manufacturer. Moreover, the iPhone is well-known to outclass the vast majority of Android shooters when it comes to video performance, so it will be exciting to see what people do with it.

Enormous displays are bigger on Android

Apple is currently touting the iPhone 16 Pro Max's massive 6.9-inch display as the largest ever on an iPhone. That's true, of course, but it's not the largest display on any smartphone. Not only has the world of Android seen some truly massive phones over the years, but the iPhone would arguably not have expanded in size over the years were it not for Android phones that proved there's a large consumer demand for big screens. The world's biggest smartphones are all Android phones.

Today, Android foldables dwarf their inflexible peers, but before screens could be bent in half, Android phones led the industry in engorged screen sizes. Samsung was arguably the most influential, with the Samsung Galaxy Note series of phones, which debuted in May 2011 with a 5.3-inch display, proving popular at a time when the iPhone 4's display was still a quaint 3.5-inches. Although Steve Jobs had famously believed no one would buy big phones, it wasn't long before Apple caved to consumer demand, first expanding the iPhone a year after Jobs's death as the iPhone 5 crept up to 4 inches. Soon thereafter, the iPhone 6 Plus finally took the Galaxy Note on directly with its 5.5-inch IPS LCD panel.

If you sent even the regular iPhone 16 back in time to 2011 with its 6.1-inch display, Steve Jobs would probably throw it into his aquarium. But large phones remain popular, as evidenced by current-day Apple's boasts about the iPhone 16 Pro Max's super-sized screen. Android retains the lead when it comes to screen size, although these days that's due to book-style foldables like the 2024 Pixel 9 Pro Fold that fits the power of an 8-inch display in the palm of your hand.

The iPhone Action Button refines an old idea

This year, the base iPhone 16 model inherits the Action Button that was exclusive to last year's Pro models. Replacing the mute switch found on older iPhones, the Action Button is a user mappable button that can be used to put the phone in silent or focus modes, open the camera, turn on the flashlight, start a voice memo, and much more. It's the first customizable hardware button on an iPhone, but it's far from the first on any smartphone.

Android phones have sported remappable buttons and other ways of accessing shortcuts for close to the entire lifespan of the operating system. The prime example of this is the Bixby Button Samsung shipped on the Galaxy S8 and Galaxy S9 phones. While it was initially a button to summon Samsung's voice assistant, the company later opened it up to remapping so it could be used to launch other apps (but you couldn't remap it to Google Assistant without a lot of extra steps). Later on, the company dropped the extra hardware button, but those with newer Galaxy phones can still remap a double press or long press of the power button to do nearly whatever they please.

On Android, remappable hardware shortcuts didn't always take the form of boring buttons. The 2017 HTC U11, for instance, had a feature called Edge Sense that let users squeeze the phone like a sponge to trigger a remappable shortcut. The Motorola Moto X, released back in 2013, got even weirder by using motion sensing shortcuts. Users could make a chopping motion with the phone to activate the flashlight, or a twisting motion to activate the camera.

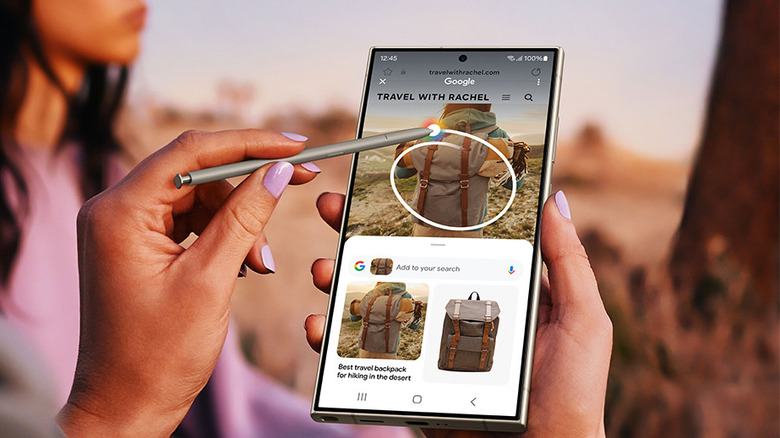

Apple's Photographic Styles seem familiar

Apple loves to make old features feel new with a bit of creative branding. So, when it came time to add camera filters to the iPhone 13, a feature Android phones had touted for ages, they were rebranded as Photographic Styles. But while the iPhone 16 brings a massive upgrade to Photographic Styles that allows users to play around with filters even after the shot has been snapped, even that upgrade was heralded by Android phones from the previous decade.

Since Android manufacturers tend not to treat every feature as worthy of celebration in the same way Apple does, it's hard to know exactly when the first Android camera filters arrived. But at least as far back as the HTC One M8, released in 2014, users could edit aspects of photos after they were taken, either adding color and tone filters or even changing which part of the photo was in focus. I dusted mine off for this article, and it's still fun to play around with. I also dusted off my Galaxy S9, which shipped with the My Filters feature, which not only lets users apply custom filters, but use photos they've taken as the baseline for a new filter. This feature was only improved over the years, and on my Galaxy S23 Ultra from early 2023, the filters can be removed or edited after the fact, just like on the iPhone 16.

Although Android beat Apple to market with these sorts of features, the iPhone 16's implementation of it in iOS 18 might be the best yet. While reviews have been mixed on the implementation of new skin undertone filters, the iPhone's D-pad style controls for adjusting different aspects of the filter is simplistic in a refreshing way.