We Tried Apple Intelligence. Here Are The Nine Best Features So Far

Apple's foray into generative AI began with the introduction of iOS 18 at WWDC 2024. In usual fashion, the company had a special name for its endeavor: Apple Intelligence. What came as an unexpected surprise was that Apple kept it limited to only a couple of smartphones — the iPhone 15 Pro duo — and iPads with an M-class silicon. The company explains that's due to the availability of raw firepower to handle these demanding AI workflows.

I have extensively tested Apple Intelligence features on my iPhone 15 Pro Max and an iPad Pro with the M4 processor, and so far, the experience has been fairly smooth. Apple's approach is noticeably tamer and slower-paced than the ambitious ideas you will encounter with ChatGPT or Microsoft's Copilot. Moreover, a healthy few of the biggest Apple Intelligence features are yet to make an appearance.

Following is a distilled version of my experience using Apple Intelligence features, alongside the improvements that Apple can make down the road. It's also worth pointing out that Apple hasn't committed to a "forever free" approach for its next-gen AI features, and it's plausible that iPhone users will have to cough up extra cash to use them in the near future.

Writing tools

When Copilot and Gemini started making their way into mainstream productivity tools like Docs, Gmail, and PowerPoint, I was finally convinced that generative AI was finally finding a purpose that appealed to the masses. Apple missed that early train but finally caught up with the arrival of Apple Intelligence. Dubbed writing tools, it's a kit that lets you proofread a document, make it sound more friendly, give it professional life, or even rewrite the whole darn thing. If you want to absorb the gist of a long article, there's a dedicated "concise" tool that does just that.

For those swamped by assignment or academic presentation duties, there are dedicated one-click controls that turn paragraphs into bullet points, arrange them into lists, or even sort them across a table format. The results aren't too bad, especially the proofreading output and the conversion into a professional or friendly tone. However, compared to ChatGPT or Claude, Writing Tools are noticeably slower.

What I love the most is the system's ability to run offline. This is a major victory because you no longer have to worry about always being connected to the internet. It's also the safer approach as your data is not processed or saved on a cloud server. You don't have to lose sleep while working on sensitive files since all the editing and format conversion work happens on-device, unlike your average AI tools like ChatGPT.

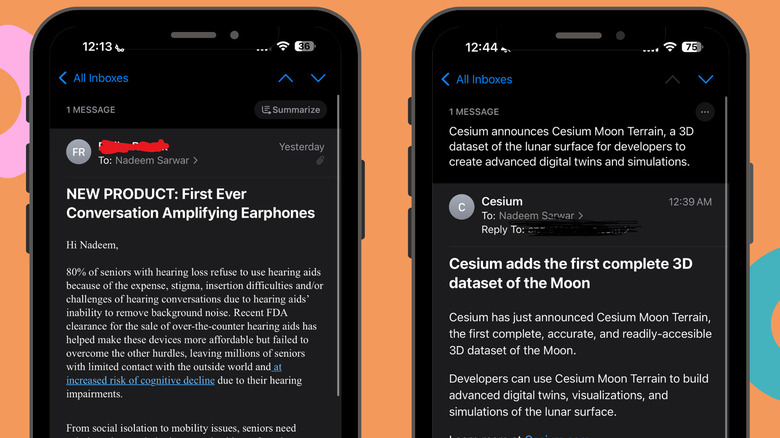

Email summaries

For over a year, I have been using an excellent app called Shortwave. Powered by OpenAI's latest GPT-4o model, this app can perform some cool tricks, but the best one is email summarization, which works for both standalone emails as well as chained conversations.

Naturally, when iOS 18's latest developer beta arrived with Apple Intelligence and a cool summarizer system for Mail, I immediately installed it and tried the new feature. It works fairly well, as long as your description of "well" is something aggressively concise. For example, in a press release about a brand launching low-alcohol wine, the AI retained a few key details but also missed some important elements, such as the promise of low to zero sugar content, the price of the wine bottles, and the launch date.

The Summarizer system is good at comprehending the details, but it also has a habit of flubbing. For example, in one case, the summary described me seeding a message when it was actually the other way around. In another case, an email sent to a professor requesting an interview was turned into an internship request. The Mail summarizer currently looks like a neat tool to sift through tedious marketing messages that could use some refinement. If I were to give you an honest opinion, don't use it for important stuff like office communication emails, academic matters, or anything relevant that can put your career on the line for missing crucial details.

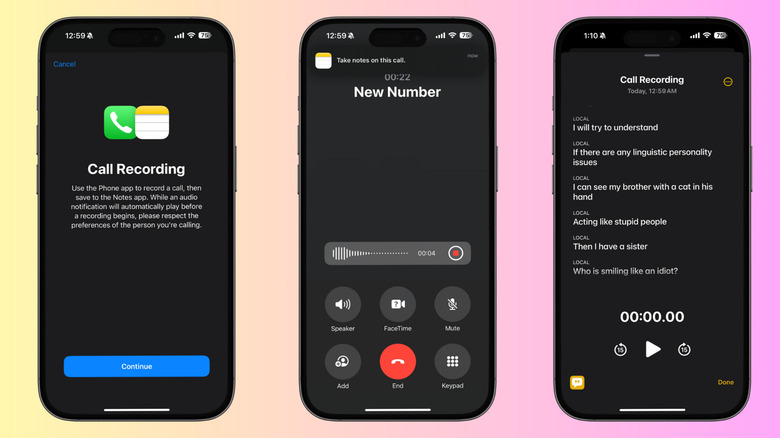

Call recordings and transcriptions

I always carry a Google Pixel phone because of the in-house Recorder app, which does an excellent job of transcription. The built-in recorder tool has also been a lifesaver for occasions when I only needed an excerpt for the call and not a transcript for the whole interview. The iPhone just couldn't do any of that natively.

iOS 18, in one fell swoop, solved all those pain points thanks to a native call recorder system that can transcribe the voice conversation and create a summary. So far, it has proven to be a reliable companion, as long as you're speaking with an American English tongue. I'd still take it over not having one partly because I can spot where the AI made a mistake. For example, in the first minute of a voice call, it erred at transcribing "tonality" and perceived it as "personality" in the summary.

Such mistakes are easy to spot if you just understand the context of the conversation. For now, the only major inconvenience is that the calls are not saved natively in the Phone app. Instead, they are saved as a separate file in the Notes app with a generic file name such as "Call with XYZ." On the positive side, especially for someone like me who conducts interviews for a living, these calls are easy to transcribe, and their automatic import into the Notes app makes it easier to integrate within a project.

Photo search with natural language

So far, the Photos app on iPhones has only offered a rudimentary search functionality. But thanks to a native AI implementation, it can now return relevant results for hyper-specific search queries in natural language. My iPhone 15 Pro Max is home to a few thousand product shots, pet pictures, and everything in between.I simply typed "person drawing on a tablet" in the app's search field, and it picked up photos of me sketching on a Wacom slate, an iPad Pro, or an Android tablet. It was not 100% accurate, as a few of the images depicted only a stylus and a tablet as opposed to an actual person or their hand.

It's still one of the best implementations of a search feature on a phone. Interestingly, it's still in the test phase. By the time Apple releases it widely for the masses later this year, I believe a lot of the rough edges with object recognition will be polished, and the search functionality will serve enhanced accuracy.

To further help users with discovery, the photos app now automatically recognizes media and keeps them clubbed together across categories such as trips, pets, people, and more. At the bottom of the Photos carousel, users will also find filter tools for picking up only certain types of content, such as videos, selfies, portraits, and live photos. Duplicates and recently deleted images also get their own one-tap sections, alongside hidden media.

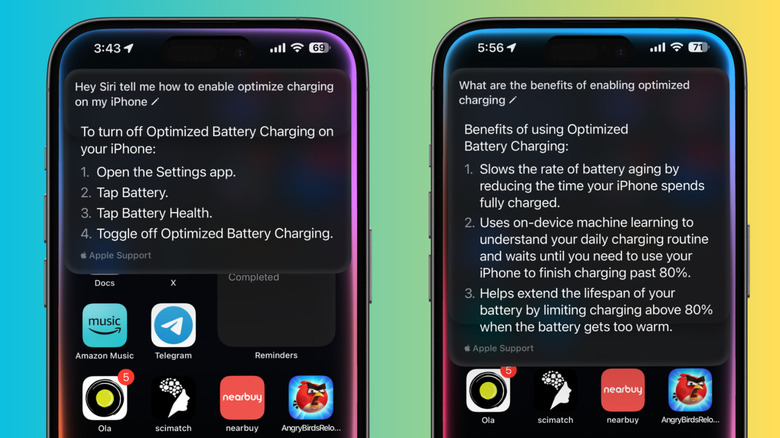

Siri as tech support

It goes without saying that Siri has been a laggard in the virtual assistant race for a while now. At a time when Google Assistant was evolving into its Gemini era, riding atop the generative AI foundations, Siri was still frustratingly ill-equipped. With the arrival of Apple Intelligence, Siri is getting a massive makeover that essentially makes it the master of your entire iPhone and the connected Apple ecosystem.

One of the best tricks of this new Siri experience is its mastery of Apple's user guides. Say you want to know the steps behind changing Siri's conversation tone. It now lists the steps alongside a quick button that will take you straight to the relevant Settings page where you can change the Siri voice. This is a significant convenience, especially from an accessibility perspective, and for people who aren't particularly tech-savvy and rely on folks around them to troubleshoot basic iPhone issues or find the right tools.

Depending on your query, the Siri conversation window will also show an "Apple Support" link that can take you to a support page where you can find more information. Depending on the query, the link can also redirect you to the relevant section of Apple's official iPhone user guide. And the best part is that you don't necessarily have to speak in a robotic tone. You can just ask it questions in natural language, and the assistant will do its best, with scope for back-and-forth conversations.

A more conversational Siri

Apple Intelligence is yet to take its final shape, as some of the more promising features will only arrive later this year or even in 2025. However, Siri has already been blessed with a massive visual and functional overhaul. While that versatility is a welcome changer, the real change is a deeper understanding of natural language commands. For example, you can ask questions such as "What are the benefits of optimized charging on an iPhone" and it will answer with a summarized version of the tech. Now, based on your query, it will either furnish information based on Apple's own local training data, or it will pump answers from web articles, quora, or other relevant sources, presented in the form of hyperlinked texts.

Interestingly, you can now follow up your original query with another voice command without saying the "Hey Siri" activation hot word. The voice commands can now be turned into actionable commands, although full support for third-party apps will take some time to materialize.

In its current iteration, Siri can take consecutive voice inputs for tasks like setting an alarm, adding an entry to the calendar, and even emailing a nudge for the same. Depending on the task at hand, it can occasionally take a few additional confirmations, but the drudgery of using the hot word is no longer there, and the conversations feel more responsive.

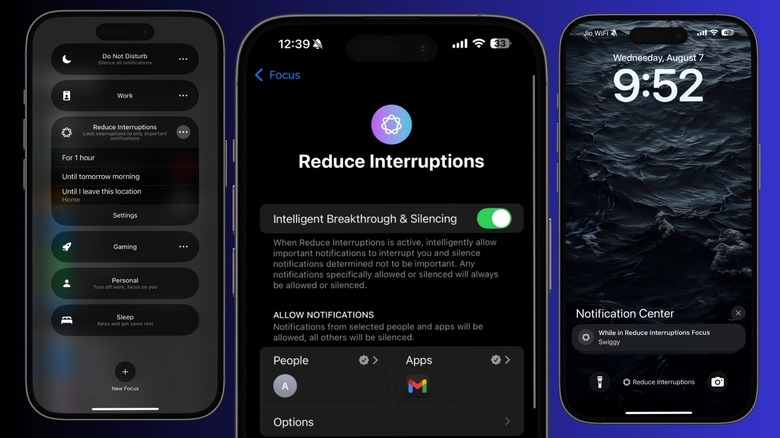

An intelligent Focus Mode

The ability to customize a no-distraction mode is not exactly an alien concept for Androids or iPhones. However, iOS 18 introduces a fresh take on the idea. Apple calls it Reduce Interruptions. This feature automatically reduces interruptions by only allowing notifications from a select few apps to pass through while silencing the rest. The AI-fueled no-distraction mode kicks into action once you enable the "Intelligence Breakthrough & Silencing" toggle. However, if you are concerned about missing notifications from important apps such as Gmail, Slack, or WhatsApp, you can create exceptions for all of them. If you seek even more granular controls without whitelisting a communication app, you can create similar exceptions for people in your contacts list.

Apple also offers the facility to make the "Reduce Interruptions" focus mode stand out for the iPhone in your hand as well as the connected Apple Watch. You can pick a custom lock screen, home screen page, as well as watch face to let you know that the feature is active. On a few occasions, some jargon marketing or offer notifications from certain apps pass through, so there's still some scope for improvement.

I got the best experience by creating an activation schedule and then adding a few focus filters for apps like Messages and Mail while also customizing behaviors such as entering low power mode, disabling always-on display, activating dark mode, and enabling silent mode for a total distraction-free zen.

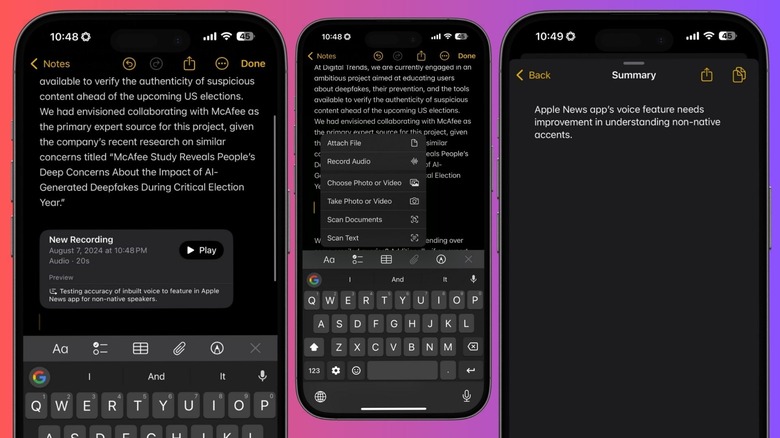

Voice notes get a boost

Apple's Notes feature is not the most compelling option out there, to put it mildly. I only use it because it can sync across all my Apple devices, can be used for real-time chats, and lets me share notes with my siblings or co-reporters with ease. In 2024, the Notes app is also getting its fair share of upgrades, such as automatic numerical calculations, collapsible sections, and highlights, as well. One facility, in particular, that stands out for me, is the app's automatic transcription and summarization of voice recordings.

You can now directly tap the insert option, record an audio clip, and get it inserted as a card in between the text. With a single tap, you can access the playback screen, where a transcription is already, alongside a one-click summarization tool. It's one of the most productive upgrades I've come so far in iOS 18. The transcription accuracy is good, barring some flubs with recognizing accents. I am dearly hoping that Apple adds support for more accents.

It would be a crucial upgrade because when the onboard AI fails to pick up certain words and pauses correctly, the error is reflected in the summary. For example, it identified "U.S. English speakers" as "Jewish English speakers" and missed the entire context. Depending on how different your English pronunciations sound compared to a native American English speaker, you might find glaring errors, or none at all, if you can fake a good one.

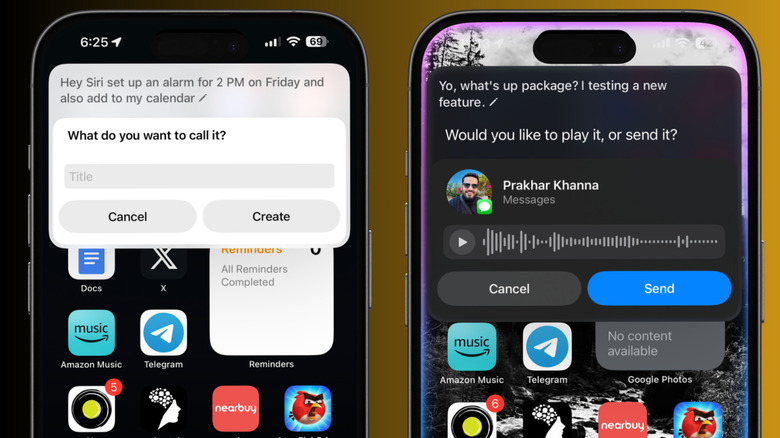

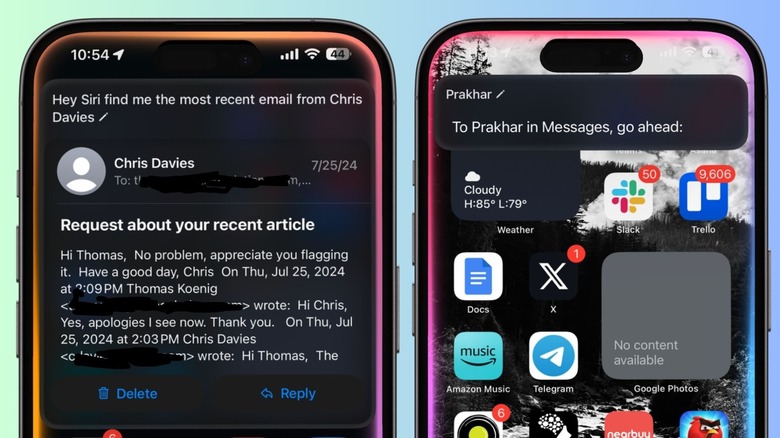

An integrated Siri

One of the most underrated tricks that Siri landed, alongside natural language and contextual understanding of on-screen activity, was the ability to interact with other apps and perform relevant actions. This is enabled courtesy of the "pre-defined and pre-trained App Intents" in the SiriKit frameworks, allowing developers to integrate advanced Siri capabilities in their apps. Based on the action described in a voice command, Siri can pick up the right app to execute the task.

For example, you can ask it to "Send a voice message to X" and Siri will not only pick up the right contact and the Messages app to send it, but will do so without ever opening the app. Likewise, you can ask it to open the most recent email from a certain person, and the assistant will oblige. Once again, the email opens in a preview window at the top of the screen, anchored around the Dynamic Island.

Depending on the query, the Siri window is actionable, which means it can also accomplish tasks either with voice guidance or touch-based input.

"Siri can understand content from your app and provide users with information from your app from anywhere in the system," promises Apple. So far, Siri has been crippled owing to deep integration. Now that it can communicate with apps, Siri will be able to perform any mainline task across apps with ease. The ball is now in the developer's court.