You Can Now Control Your iPhone Or iPad Using Your Eyes: Here's How

Apple has always given considerable importance to accessibility features in its products. From controlling your iPhone using your voice, to assigning an invisible button on the back to an action — there are plenty of great accessibility features you should be using on your iPhone. Apple's WWDC 2024 event pushed the trend forward, with iOS and iPadOS 18 unveiling several new features catering to people with disabilities or those who have trouble interacting with their iPhones and iPads efficiently.

iPads, especially, are on the forefront of offering innovative features to individuals with diverse requirements — thanks to the huge displays, powerful speakers, and an interface that can't get any friendlier to use. Among the slew of new iPadOS 18 features to be excited for is the ability to control your device using nothing but your eyes. Specially designed to accommodate people with physical disabilities that hinder the use of a touchscreen-enabled device, this accessibility toggle leverages artificial intelligence and on-device machine learning to unlock a new way of controlling the user interface. To try eye tracking out for yourself on an iPhone or iPad is easy — read on to find out how.

How to enable Eye Tracking on iOS 18

Eye Tracking is available starting with the latest developer preview of iOS 18 — so you will have to install the beta build first to unlock access to this feature. You can confirm your iPhone or iPad's software version by navigating to Settings > General > Software Update. To enable the feature, follow the steps highlighted below:

- On your iPhone or iPad running OS version 18, launch the Settings app.

- Navigate to Accessibility > Eye Tracking under the "Physical and Motor" section.

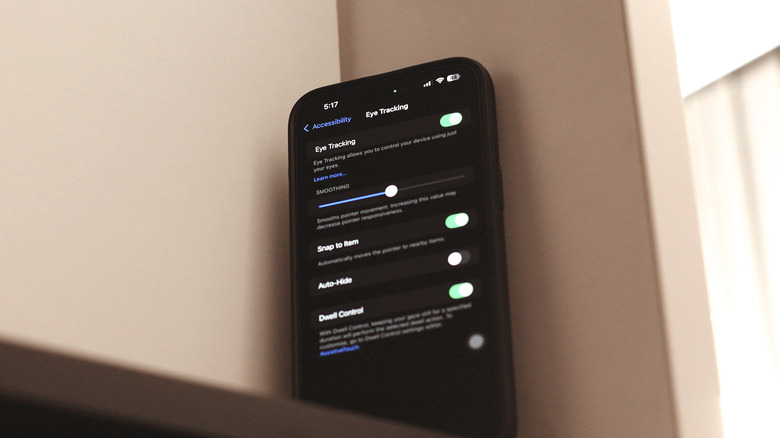

- Flick the "Eye Tracking" toggle on.

- You will then be directed to follow a dot across different parts of the screen using your eyes. Be sure there is ample lighting in your surroundings for the best calibration.

Apple recommends you place your phone on a steady surface around 1.5ft (50cm) away. Since the eye tracking technology depends on your device's front-facing camera to track your eye movements, make sure there is nothing covering it — also clean up any smudges before you begin. Once the calibration process finishes, your iPhone will do its best to determine which parts of the interface you're looking at. You should then be able to experience the eye tracking mode in action.

Using your eyes to control your device

There are visual indicators such as a blob following your eye movements, and outlines highlighting the controls in focus that help you have a better understanding of where and what your eyes are tracking. In our experience, the accuracy is quite finicky, especially on the smaller screen of an iPhone. You do get a couple of options that are tweakable, including a smoothness slider that may help with overall responsiveness. The "Snap to item" toggle does exactly what it says and makes it easier to use eye tracking by magnetically snapping controls to the closest element on screen.

Using Dwell Control, you can perform a specified action when you hold your gaze for a while. Eye Tracking on iOS 18 also works hand-in-hand with AssistiveTouch to allow for controls that are slightly more complex. Examples include scrolling, controlling your device's hardware keys, accessing the notification or control center, activating Siri, or temporarily pausing Dwell Control to avoid the accidental registration of actions while watching a video or reading long text.

Given the nature of prerelease beta builds, we expect the eye tracking functionality to improve with time. It's also difficult to determine just how much of a toll enabling this accessibility feature will take on your device's battery. On the plus side, despite using the selfie camera to track your eyes, Apple claims that all the data is processed and stored securely on-device.